Since Microsoft’s Build 2015 conference, and increasingly since Microsoft’s showing at E3, everybody (including me) has been talking about HoloLens, and its limited field of view (FoV) has been a contentious topic. The main points being argued (fought) about are:

- What exactly is the HoloLens’ FoV?

- Why is it as big (or small) as it is, and will it improve for the released product?

- How does the size of the FoV affect the HoloLens’ usability and effectiveness?

- Were Microsoft’s released videos and live footage of stage demos misleading?

- How can one visualize the HoloLens’ FoV in order to give people who have not tried it an idea what it’s like?

Measuring Field of View

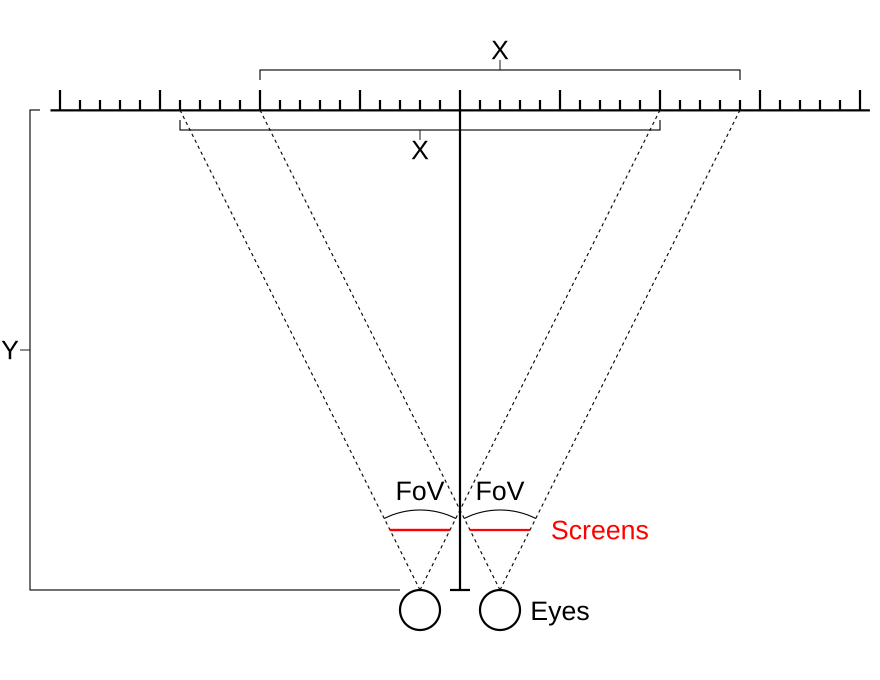

Initially, there was little agreement among those who experienced HoloLens regarding its field of view. That’s probably due to two reasons: one, it’s actually quite difficult to measure the FoV of a headmounted display; and two, nobody was allowed to bring any tools or devices into the demonstration rooms. In principle, to measure see-through FoV, one has to hold some object, say a ruler, at a known distance from one’s eyes, and then mark down where the apparent left and right edges of the display area fall on the object. Knowing the distance X between the left/right markers and the distance Y between the eyes and the object, FoV is calculated via simple trigonometry: FoV = 2×tan-1(X / (Y×2)) (see Figure 1).