Note: This is part 2 of a four-part series. [Part 1] [Part 3] [Part 4]

The final update/edit to my previous post was to report that I had managed to synchronize the DK2’s tracking LEDs to its camera’s video stream by following pH5’s ouvrt code, and that I was able to extract 5-bit IDs for each LED by observing changes in that LED’s brightness over time. Unfortunately I’ll have to start off right away by admitting that I made a bad mistake.

Understanding the DK2’s camera

Once I started looking more closely, I realized that the camera was only capturing 30 frames per second when locked to the DK2’s synchronization cable, instead of the expected 60. After downloading the data sheet for the camera’s imaging sensor, the Aptina MT9V034, and poring over the documentation, I realized that I had set a wrong vertical blanking interval. Instead of using a value of 5, as the official run-time and pH5’s code, I was using a value of 57, because that was the original value I found in the vertical blanking register before I started messing with the sensor. As it turns out, a camera — or at least this camera — captures video in the same way as a monitor displays it: padded with a horizontal and vertical blanking period. By leaving the vertical blanking period too large, I had extended the time it takes the camera to capture and send a frame across its host interface. Extended by how much? Well, the camera has a usable frame size of 752×480 pixels, a horizontal blanking interval of 94 pixels, and a (fixed) pixel clock of 26.66MHz. Using a vertical blanking interval of 5 lines, the total frame time is ((752+94)*(480+5)+4)/26.66MHz = 15.391ms (in case you’re wondering where the “+4” comes from, so am I. It’s part of the formula in the data sheet). Using 57 as vertical blanking interval, the total frame time becomes ((752+94)*(480+57)+4)/26.66MHz = 17.041ms. Notice something? 17.041ms is longer than the synchronization pulse interval of 16.666ms. Oops. The exposure trigger for an odd frame arrives at a time when the camera is still busy processing the preceding even frame, and is therefore ignored, resulting in the camera skipping every odd frame and capturing at 30Hz. Lesson learned.

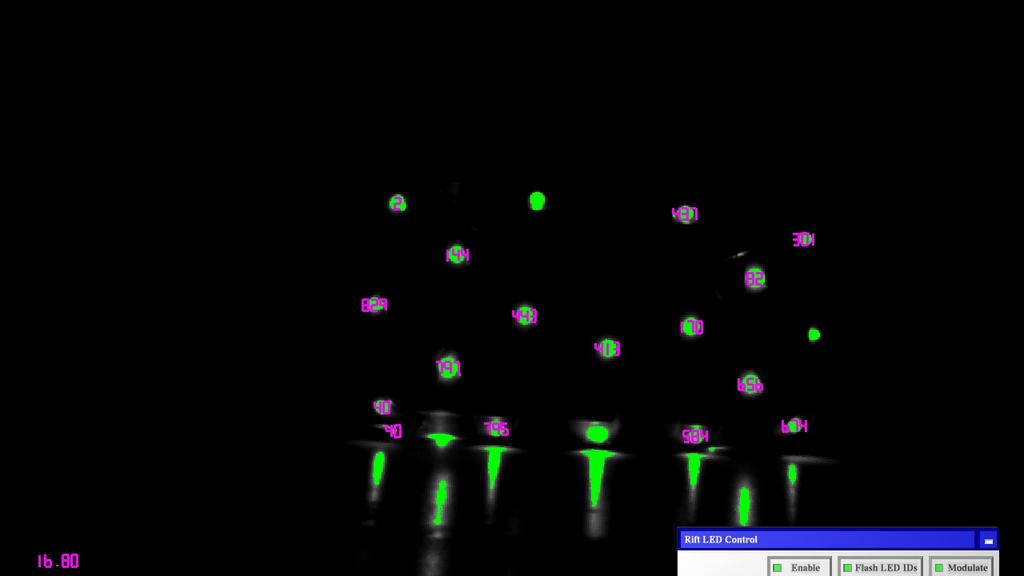

Figure 1: First result from LED identification algorithm, showing wrong ID numbers due to the camera dropping video frames all over the place.

Correcting pattern drift

While it took me a while to figure this out, the fix was trivial, and afterwards it became clear immediately that the LEDs are in fact blinking out a 10-bit pattern, not a 5-bit pattern. That makes a ton more sense, as there are no more ambiguities (32 5-bit patterns vs. 40 LEDs), and there is suddenly a lot of redundancy (1024 10-bit patterns vs. 40 LEDs) to correct misidentified bits. It was easy to implement a detector to track LED blobs through the video stream over time and collect their bits to calculate IDs (see Figure 1), but there was another problem. At random intervals, the extracted ID numbers would suddenly switch over to a completely different set of numbers, then stay at those for a while, and then switch again etc. This also took me a (very) long time to figure out, and the culprit was either the camera’s kernel driver, or my computer’s CPU, which started running hot about a week ago to the point where it sometimes has to enter emergency low-power mode (gotta fix that!). The camera was silently dropping video frames at random but short intervals (around 5-10 seconds), which led to an across-the-board shift in the observed bit patterns.

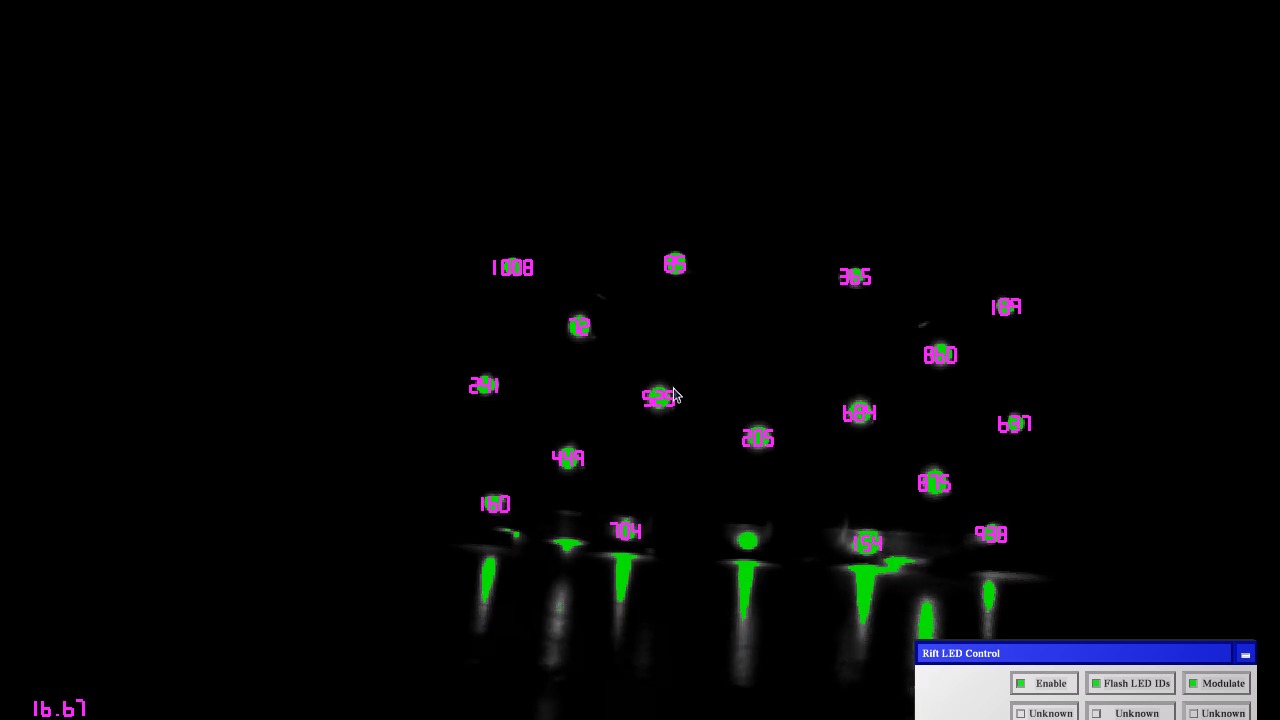

After putting in a fix to detect dropped frames by observing the real time interval between arriving video frames, I was finally able to record a video showing LED tracking and identification working in real time (see Figure 2).

Figure 2: LED tracking and identification algorithm with frame-drop correction. Still frame from “Identifying LEDs Based on Blinking Patterns.”

Correcting detection errors

As can be seen in the video linked above, there are still (rare) instances where the extracted patterns change value for a short time. My algorithm uses a differential detector, to ignore variations in the sizes of LED blobs due to perspective projection or exposure settings. When a new frame arrives, the algorithm first extracts all connected blobs of bright pixels from the frame (green blobs in the video and images), then ignores all blobs that either contain fewer than 10 pixels or are not roughly disk-shaped, and finally looks back at the large disk-like blobs extracted from the previous frame, and finds the one that is closest to the new blob in image space.

If the algorithm finds an old blob that is close enough, it grabs the old blob’s 10-bit pattern and then compares that blob’s size (number of pixels) to the size of the new blob, and if the new blob is at least 10% larger, it sets the bit corresponding to the current frame index to 1; if the old blob is at least 10% larger it sets the same bit to 0, and if neither is true, it copies the value of the bit that was set during the previous frame. This works really well, but it can happen that random variations in frame-to-frame LED brightness cause the algorithm to detect a 0->1 or 1->0 switch when there was in fact none, or sometimes miss a switch that was there. This manifests in single-bit errors in the extracted IDs which are automatically corrected 10 frames later, when the frame counter wraps around to the same bit and the algorithm resets it to its proper value. Still, that’s annoying and might cause tracking problems.

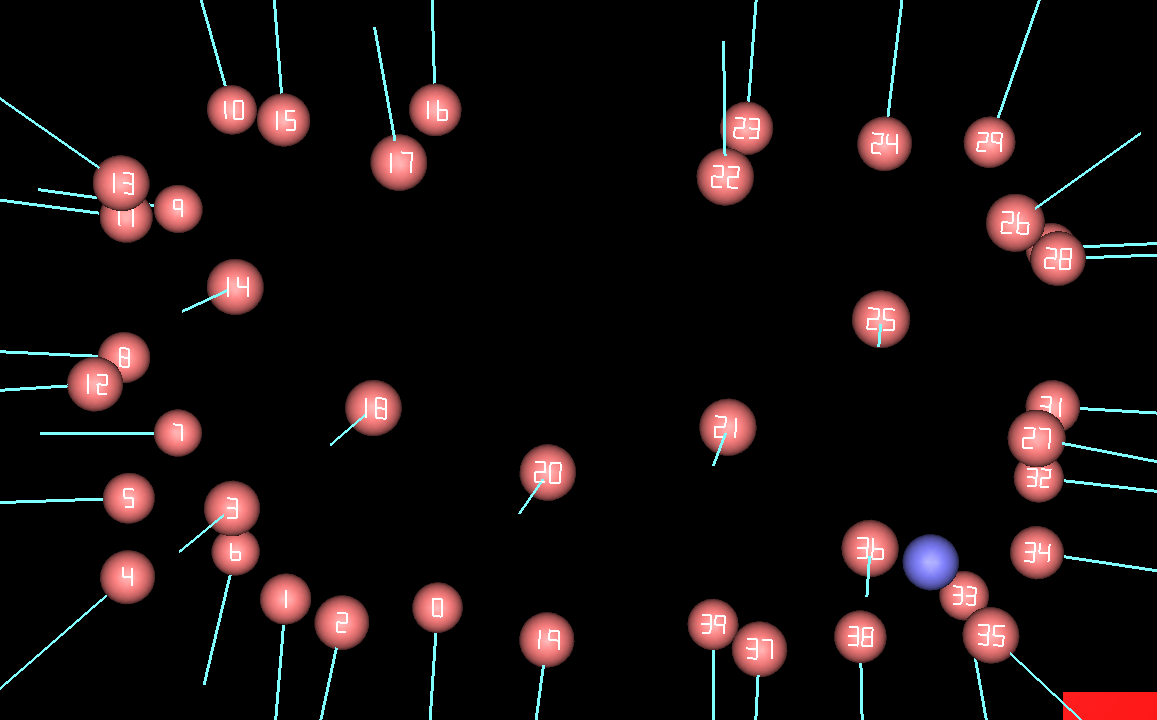

To improve this, I collected the full set of 40 10-bit patterns by turning the DK2 in front of the camera and jotting down numbers into a text file, and while I was at it, I also collected the indices of the associated 3D LED positions as extracted from the firmware via HID feature report 0x0f (see previous post). Here is the full list of 10-bit IDs, ordered by 3D marker position index in report 0x0f, from 0 to 39 (see Figure 3 for a picture of the corresponding marker positions in 3D space):

2, 513, 385, 898, 320, 835, 195, 291, 800, 675, 97, 610, 482, 993, 144, 592, 648, 170, 27, 792, 410, 345, 730, 56, 827, 697, 378, 251, 1016, 196, 165, 21, 534, 407, 916, 853, 727, 308, 182, 119

Figure 3: Oculus Rift DK2’s 3D LED positions, labeled by marker index in the sequence of 0x0f HID feature reports.

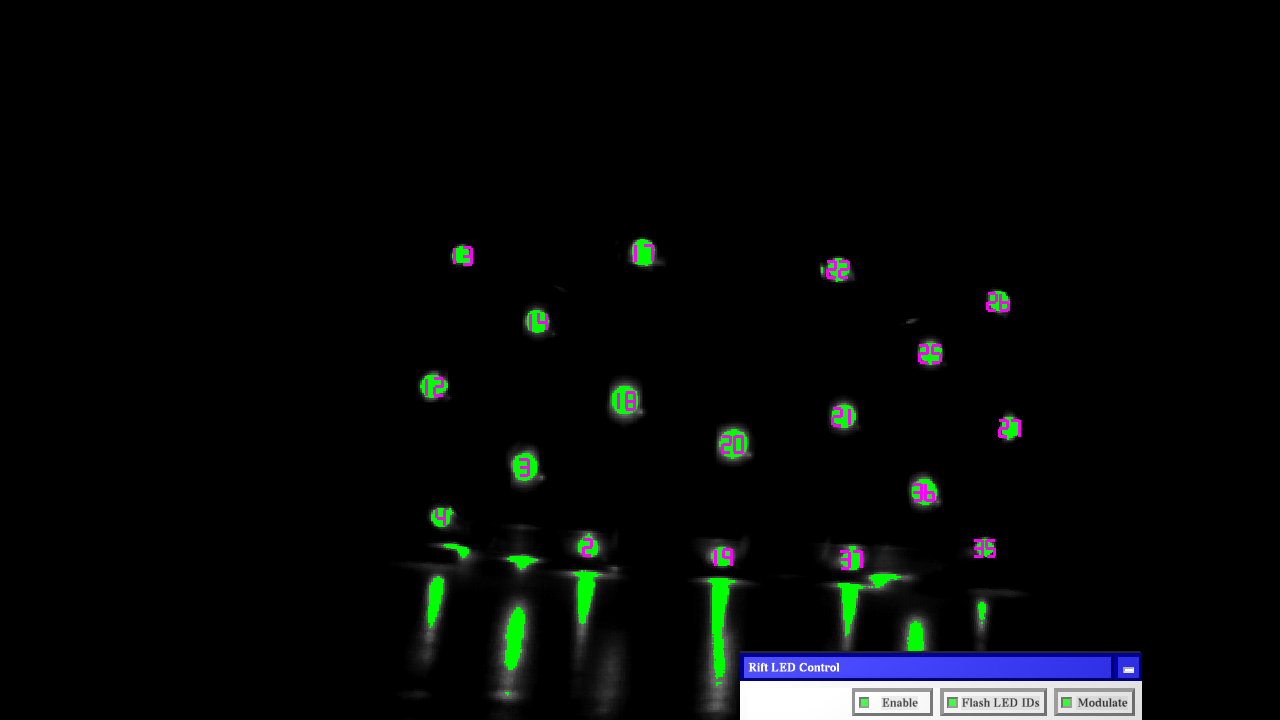

The numbers might look random (and maybe they are), but there’s a reason why they’re spread out over the entire [0, 1024) interval. If we know that the only 10-bit IDs that we expect to find in a video stream are the 40 listed above, we can use the redundancy of assigning 10 bits to encode 40 values to automatically correct the kinds of bit errors I described above. This is based on an information theory concept called Hamming distance. Basically, the Hamming distance between two 10-bit binary numbers is the number of bits one has to flip to turn the first number into the second. If the numbers are identical, their Hamming distance is 0; if they differ in a single bit, their distance is 1, if they are bit-wise negations of each other, their Hamming distance is 10. There’s a related concept called minimal Hamming distance, which is the minimum of Hamming distances between all pairs of elements of a list. In the case of our 40 elements, their minimal Hamming distance happens to be 3. And that’s good news, because it means the minimum number of bit flips it takes to turn one valid D into another valid ID is 3. So if we assume that bit errors are rare enough that it’s improbable that more than one occurs in any sequence of ten video frames (and that seems to be the case), then we can not only detect, but correct those errors on-the-fly. We compare any extracted number to the list of 40, and find the list entry that has the smallest Hamming distance. If that distance is 0 or 1, we know that the number we extracted should be set to the list entry we found. If there happen to be 2 or more bit errors in a sequence of 10 frames, we’re out of luck, but in practice, it seems to be working like a charm. And while we’re at it, instead of setting the LED blob ID to the correct 10-bit number, why don’t we just set it to the index of the associated 3D marker (see Figure 4)? That way we can feed it directly into the pose estimation algorithm.

Figure 4: LED blobs extracted from the video stream, labeled with the indices of their associated 3D marker positions. Compare to Figure 3.

Now, can we use the same mechanism to detect pattern shifts due to dropped frames or other synchronization problems? Alas, not easily. The minimal Hamming distance between the original set of IDs, and left- or right-shifted copies of itself, is only 1, meaning a bit shift on a single ID is can’t be distinguished reliably from a single bit flip error. One would have to look at all LED blobs at once and assume that a bit error in all at the same time indicates a shift, but that makes the algorithm a lot more complex. Fortunately, even with my bad CPU, I didn’t encounter a case where the time-based drop-out detection algorithm missed a dropped frame, so I don’t expect this will be an issue.

Now, on to the low-level stuff and the nitty-gritty technical details.

Setting up the camera

To program the DK2’s camera, refer to pH5’s code and the imaging sensor data sheet. Program a window size of 752×480, horizontal and vertical blanking intervals of 94 pixels and 5 rows, respectively (yielding a total frame time of 15.391 ms), a total coarse shutter width of 11 rows and a total fine shutter width of 111 pixels (yielding a total exposure time of 353.225 microseconds), turn off automatic gain and exposure control and automatic black level calibration, set the analog gain to 16 and the black level to -127, and the chip control register to snapshot mode. The camera will now sit and wait for the first trigger signal from the DK2.

Starting the LED flashing sequence

I haven’t yet spent the time to find the minimum sequence of HID feature reports that reliably puts the DK2 into a stable blinking pattern, but the following works:

- Read a HID feature report 0x02 (7 bytes)

- Write a HID feature report 0x02: 0x02 0x00 0x00 0x20 0x01 0xE8 0x03 (the last two bytes translate to 1000 decimal; maybe a time-out of sorts?)

- Read a HID feature report 0x0C (13 bytes)

- Write a HID feature report 0x0C: 0x0C 0x00 0x00 0x00 0x07 0x5E 0x01 0x1A 0x41 0x00 0x00 0x7F (byte 3 selects one of several different blinking patterns, with 0 the one I used above; byte 4 (0x07) turns on the LEDs and enables modulation and pattern generation; bytes 5 and 6 (0x5E 0x01, or 350 decimal) set the LEDs’ active interval in microseconds, a wee bit shorter than the camera’s programmed 353 microsecond exposure interval; bytes 7 and 8 (0x1A 0x41, or 16666 decimal) set the LEDs’ frame interval in microseconds, a wee bit longer than the camera’s programmed frame interval of 15.391 ms and resulting in 60Hz capture; the next two bytes don’t seem to have a function; the last byte selects the LEDs’ duty cycle when modulation is enabled, which can be used to adjust brightness, 0x7F apparently sets a 50% duty cycle)

Sending report 0x0C will immediately trigger a synchronization pulse and cause the camera to start capturing the first frame. Therefore, before sending it, set your running frame counter to zero so that the LED blobs’ bits will be sorted into their correct binary places. Without doing anything else, the LEDs will automatically turn off again after 10 seconds. To prevent that, keep sending keep-alive HID feature reports (0x11) at regular intervals. I was wondering why the DK2 has a new report doing the same thing as report 0x08 on the DK1, and the answer is that 0x11 keeps both the IMU and the LEDs going. Just make sure to set the keep-alive interval in report 0x11 to 10000 milliseconds, and don’t forget to send another report not more than, say, 9 seconds later. That’s it. Do not send additional 0x0C reports; this appears to sometimes confuse the LED control logic and miss a frame, or switch to an entirely different blinking pattern. Bad news.

If you notice that your LED IDs change over time, or that you lose identification entirely, your kernel camera driver is probably dropping frames. In that case, add a timer to your video frame reader function that calculates the arrival time delay between the previous and the current frame, and if that delay is larger than 25 ms, increment the running frame counter by 2 instead of 1. This will skip a bit in the identification algorithm, but that will be fixed by the Hamming distance corrector right away.

To turn the LEDs off again, first write a feature report 0x0C 0x00 0x00 0x00 0x00 0x5E 0x01 0x1A 0x41 0x00 0x00 0x7F, then write a feature report 0x02 0x00 0x00 0x20 0x13 0xE8 0x03 (note the 0x13 instead of 0x01 in byte 4). I don’t know yet what the 0x02 reports actually do, but the official run-time sends them, so why not.

Pingback: Hacking the Oculus Rift DK2 | Doc-Ok.org

(just subscribing to the comments)

Nice hack! you can use solvePnPRansac from OpenCV for the pose estimation or solvePnP if you don’t have any erroneous matchings.

Hey Oliver, do you have plans to release your latest work like w/ the 0.1 tarball?

Yes, but unfortunately I won’t be able to just tar up all the sources and put them on my web server like I did with the small utility to extract 3D LED coordinates from firmware. That one was mostly self-contained, but for this further work I had to use the firepower of the fully armed and operational Vrui VR toolkit, which (unfortunately) is between releases right now. The code I wrote uses features of the unreleased Vrui-3.2, and that’s not in a packageable state yet. I’ll get the tracking code out with Vrui-3.2, which should hopefully be very soon.

If this is a BCH ECC code, then its m=4, n=15, k=11, t=1. 15 bit codeword, 11 bits of data, 4 bits of ECC, and can correct 1 bit flip. Determining how they’ve juggled their bits is a bit confusing.

At least figuring out which bits in LED data were the data bits and which bits were the ECC bits was easy, the mask:

11 1011 0100

covers the data bits (6 bits). The mask:

00 0100 1011

covers the ECC bits. I’m not sure of a simple algorithm to figure out how the bits are swizzled (and possibly XOR’d) into and out of a codeword though. If anyone has time, here’s a full ECC codeset for (15, 11, 1). The first 4 bits are ECC, the last 11 bits are data:

http://pastebin.com/TeC7kZY7

Are you sure you are listing the LEDs in the right order?

0010 000000

0001 000001

0001 000110

0010 000111

1000 000010

1011 000011

1011 000100

0011 001010

0000 001011

0011 001101

1001 001000

1010 001001

1010 001110

1001 001111

0000 010100

1000 010001

0100 000101

0110 001100

0111 010000

0100 010011

0110 010110

1101 010010

1110 010101

0100 011000

0111 011011

0101 011101

1110 011010

1111 011100

1100 011111

1000 100100

0001 101100

0001 110000

0010 110001

0011 110110

0000 110111

1001 110011

1011 110101

0000 111010

0010 111100

1011 111000

The above takes the data bits from the LED code words you posted and reverse the bit order. The bits on the left are the ECC bits in their original order. Interesting to note once sorted, the numbering goes 0-22,24,26-29,31,36,44,48-49,51,53-56,58,60

The first code word with its data bits all zero doesn’t fit into BCH as its ECC bits are not all zero.

I don’t think it is a BCH code. Not only that, I think it’s not even a linear code.

“The first code word with its data bits all zero doesn’t fit into BCH as its ECC bits are not all zero.”

Even stranger than that is that 0000000010 _is_ a codeword. If the code was a linear code (like Hamming, Reed-Muller, Hadamard, BCH, Reed-Solomon, Golay, …) the existence of this codeword would force a Hamming distance of 1.

(All linear codes have the property that the sum (bit-wise XOR) of two codewords is also a codeword. If there is a codeword with only one “1” bit, adding any other codeword will always create a codeword where only one bit is different. E.g. 101010 + 000001 = 101011)

Excellent point. Didn’t occur to me, or I wouldn’t have wasted my time looking for a way to shuffle the bits into a Hamming code pattern.

This is true, but only if some XOR mask has not been applied to the codeword after generation. This likely reduces the problem space for valid XOR codes greatly though.

Ah, I’ve taken that to its conclusion.

x ^ mask = original keyword.

x0 ^ mask = cw0

x1 ^ mask = cw1

cw0 ^ cw1 = cw2

x0 ^ mask ^ x1 ^ mask = cw2

x0 ^ x1 = cw2

What the heck are they doing?

Yup, I’m going with 100% hand rolled/randomly chosen values that work. While in my list, the missing value 010111 has two possible ECC codes (1000 and 1010), the missing value 011001 has no valid ECC codes. I’ll demonstrate. Here are the values that differ from 011001 by only one bit along with their ecc codes:

???? 011001

0010 011000

0111 011011

0101 011101

1000 010001

1010 001001

???? 111001

The new ECC code must be two bit flips away from each of the ECC codes above for the total hamming distance to be 3. I’ll write out the possible codes along with which code is only one bit flip away:

0000 – 0010

0001 – 0101

0010 – (already above)

0011 – 0111

0100 – 0101

0101 – (already above)

0110 – 0111

0111 – (already above)

1000 – (already above)

1001 – 1000

1010 – (already above)

1011 – 1010

1100 – 1000

1101 – 0101

1110 – 1010

1111 – 0111

Nice work.

You mention in the video that it can take 10 frames to identify (as you have acquire all the 10 bits). Would it be possible to target particular LEDs as ‘guide points’ and use these with a known association of near by LEDs to speed up capture.

ie. using ‘0x2AA’ as center marker you could code other numbers so that this is recognisible within a few frames.

See the next post in the series, Hacking the Oculus Rift DK2, part III.

I understand how you are now using the ‘known wireframe’ along with pose information to predict the location of the LEDs and thus enable quicker identification, however you still have ‘jump’ problem when the whole unit moves to new location…. fusion which MEMs will help but probably not totally.

There would also be the situation where the headset did not move, but all LEDs were occluded by (say) a person walking between camera and headset.

By using a alternating pattern (1010101010b) for one or more LEDs, you’d be able to identify these LEDs within 3 frames (if all other leds used double bits of 00 or 11 in their numbers).

This would allow a crude pose to be computed quickly, and then make the location of the other LEDs roughly known.

Pingback: Hacking the Oculus Rift DK2, part III | Doc-Ok.org

Hello,

Really nice work. I was wondering how do you assign the 0, 1 bits for each led in the first frame and could you mention about how did you find the 10 bit pattern in the 10 frames. The blog clearly explains the processing after first 10 frames.

Thank You

Pingback: Oculus Rift DevKit 2 « modelrail.otenko

Hello, you used OpenCV to identify, do you mind sharing the code with me? I’d really like to make something “similar” (yet smaller) in Python, reading a blinking LED and counting frequency for observating a device and its status via camera.

Thx and best regards

Martin

No, I did not use OpenCV for this. But the code for this project is on GitHub; there’s a link in part IV.