Yesterday, I attended the second annual Silicon Valley Virtual Reality Conference & Expo in San Jose’s convention center. This year’s event was more than three times bigger than last year’s, with around 1,400 attendees and a large number of exhibitors.

Unfortunately, I did not have as much time as I would have liked to visit and try all the exhibits. There was a printing problem at the registration desk in the morning, and as a result the keynote and first panel were pushed back by 45 minutes, overlapping the expo time; additionally, I had to spend some time preparing for and participating in my own panel on “VR Input” from 3pm-4pm.

The panel was great: we had Richard Marks from Sony (Playstation Move, Project Morpheus), Danny Woodall from Sixense (STEM), Yasser Malaika from Valve (HTC Vive, Lighthouse), Tristan Dai from Noitom (Perception Neuron), and Jason Jerald as moderator. There was lively discussion of questions posed by Jason and the audience. Here’s a recording of the entire panel:

One correction: when I said I had been following Tactical Haptics‘ progress for 2.5 years, I meant to say 1.5 years, since the first SVVR meet-up I attended. Brainfart.

But with all that, I still had the opportunity to try some devices and applications that I hadn’t before.

Perception Neuron

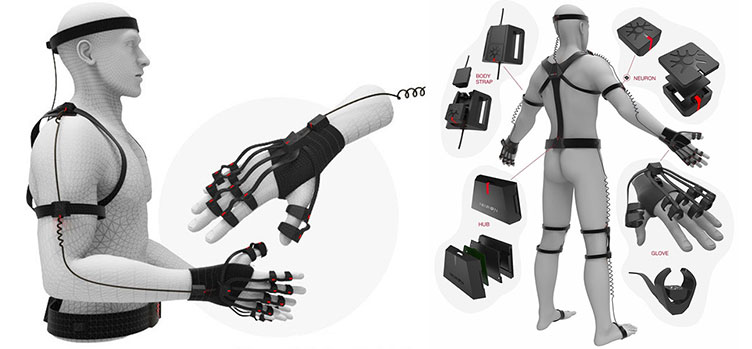

Figure 1: Overview of the Perception Neuron inertial-based motion capture / VR input system. The system demonstrated at SVVR looked slightly different in detail, having a closed glove for example.

Perception Neuron is a modular motion capture system based on a network of small inertial navigation units (“neurons”), and works on the same principle as the PrioVR suit I watched closely last year: by attaching one neuron to each bone along an extremity (such as upper arm, lower arm, back of the hand, tip of each finger), the software can calculate the position of each neuron relative to the “fulcrum” neuron at the root of the chain using forward kinematics. This is not true positional tracking, as the position of the fulcrum neuron is fixed, and the overall orientation of the skeleton can drift around the fulcrum (as it noticeably did during my demo). PN can track an entire body (with the fulcrum on the hips), but the demo system tracked the right arm only, with the shoulder being the fulcrum.

The system is very responsive: when wired to the driving computer, the developers claimed 8ms latency; over wireless, latency rises to 25ms. The system is also very sensitive, with even small motions of the fingers or the hand being reflected. That said, the system is not very accurate. The pose of the virtual arm did not always match my real arm, and I think it got worse over time due to drift. Especially fingers often ended up in unnatural poses, such as bending backwards. Granted, there was only a brief calibration process at the beginning of the demo, and the host who fitted my “suit” was inexperienced.

The main issue with using PN as a VR input device instead of a motion capture tool, however, is that it has no built-in way to generate events, i.e., it has no buttons. In order to trigger actions in an application, the software has to guess the user’s intent from the current pose. For example, the demo application had a lot of small objects either floating or sitting on assorted surfaces. To grab an object, the user had to reach out (needing to consciously aim the virtual hand at the object due to lack of positional tracking) and then make a fist. To release an object, the user had to open the hand, as expected, but the PN’s notions of “fist” and “open hand” did not necessarily line up with mine, which made interaction quite frustrating. Objects often stuck to my hand even when I opened it as wide as possible, or got dropped randomly.

This problem does not only affect PN; I have seen it in all hand pose-based VR input devices from data gloves to optical hand tracking a la Leap Motion. Pressing a button (or closing a contact pad between two fingers on a pinch glove) is an unambiguous action with immediate zero-latency real-world feedback. But detecting a pinch or grab from finger tip positions always requires some fudge factors, and if the user happens to miss those and can’t grab or release an object when desired, the flow of interaction breaks down. Even a relatively low percentage of missed or spurious events, say 5%, can quickly frustrate a user.

The problem was made somewhat worse here because the fingers were not fully tracked. According to the main developer, the software intentionally ignored the spreading angle between the fingers to get around drift problems in the demo booth, and this manifested by large discrepancies between real and virtual when I tried touching the tip of my thumb against the other fingers. I could feel the touch in the real world, but in the virtual world, the finger tips remained up to several inches apart. This would most probably have been improved by more thorough calibration at the start of the demo.

In conclusion, I think PN needs to be combined with a reliable method to cause events, such as buttons or contact pads on all fingertips, before it can be used as an effective VR input device.

Update: The Perception Neuron developers contacted me regarding some bugs in the demo software I saw, which were fixed for the second day of the Expo. Grabbing and releasing objects worked more reliably after the fix, and so did throwing objects (which didn’t work at all when I tried). The drift issues remain.

Sony Project Morpheus

Sony demonstrated a new version of their Project Morpheus headset, which I had tested the year before. PM Mark 2’s most important update is a new screen: 1920×1080, OLED, 120 Hz. The new screen looks great, with no motion blur that I could see, and can be driven by PS4 games in one of two ways: either natively at 120 Hz, or, to give graphically complex games more breathing room, at 60 Hz, with Sony’s own version of timewarp converting the signal to 120 Hz via orientation-only reprojection.

This method works very well in practice. The first demo I tried, which showed a small-scale diorama (basically a dollhouse inhabited by tiny robots), ran in upsampled mode, and there were only very minor artifacts due to head motion. Only when leaning in very close to an object and moving the head quickly from side to side was there barely noticeable judder. Upsampling was slightly more evident for fast-moving objects: as reprojection only updates the rendering based on head orientation, objects only change their positions and orientations relative to the entire VR scene at the game’s real rendering rate of 60 Hz. But this effect is probably not noticeable unless one is looking for it.

This brings me back to a paper I published back in 2003, where I posited that VR needs very low latencies and high frame rates for head tracking (for the well-known reasons), but that the latency and frame rate requirements for animation inside the VR scene are much less strict, because, while animations may appear choppy, they do not cause simulator sickness. This reasoning does not apply, however, if the entire VR scene is moving around the viewer, as during walking or flying navigation. It will be interesting to see how well Sony’s upsampling method performs for, say, racing games.

In this first demo, Sony used a regular Playstation controller for input, but using the controller’s 3D tracking capabilities (provided by the same PS4 Eye camera that tracked the headset) in an interesting way. The controller was represented in the VR scene as a lifelike replica with reactive controls, i.e., the analog sticks and buttons in VR moved in synch with the real ones. The demo also used the controller to show off spatial binaural audio: by pressing a button, the controller became a boombox, and the apparent sound source followed the controller well through 3D space. While this was a neat demonstration, a two-handed controller does not make a good device for natural VR interaction because it forces the user’s hands together, and the controller’s tracking was not as good as the PS Move’s.

The second demo was a stationary shooting game using a pair of PS Move controllers to bring the user’s hands into the virtual space at 1:1 scale. The controllers were represented as virtual hands, and could be used to interact with the environment by, e.g., opening and closing drawers or picking up and throwing objects. After picking up a gun found in one of the drawers, whichever controller was holding the gun could then be used to shoot, by aiming along the virtual gun’s iron sights. The PS Move controllers impressed again with accurate, stable, and low-latency tracking. Target shooting was completely intuitive and accurate. The gun could be reloaded by releasing the empty magazine by pressing a button on the controller currently holding the gun, then picking up a fresh magazine with the other controller, and sliding it into the magazine well. That’s how you do interaction in VR.

The only issue with the second demo was that the positional tracking space from the single camera was a tad too small for the size of the demo booth. The game was optimized for the limited space in that the desk behind which I was supposed to cover exactly fit into the tracking volume, but I was not able to peek around the sides of the desk. There was no visual indication for leaving the tracking space coded into the demo, so it was a quite disruptive the few times it happened.

All in all, Project Morpheus’ newest iteration, when combined with a pair of PS Move controllers, is a great VR system. The only minor complaint I have is due to the screen/lens assembly being only attached to the user’s face via the top head strap. While that means users won’t get “VR welts” on their faces, it also means that the screen wobbles slightly in front of the viewer’s eyes during quick movements. I personally prefer the Oculus Rift’s headstraps.

OSVR HDK

Open Source Virtual Reality were demonstrating the 1.1 version of their “OSVR Hacker Dev Kit”. OSVR aims to create an open-source platform unifying all kinds and brands of VR displays and input devices, and the HDK is their low-cost introductory HMD, aimed to let developers start working on OSVR-compatible applications. In terms of basic features, the HDK sits somewhere between Oculus Rift DK1 and DK2 (but closer to DK1). It features a 1920×1080 OLED screen running at 60 Hz and inertial-based orientational tracking, but no positional tracking (meaning, unlike Oculus Rift DK2 and later, Sony Project Morpheus, and HTC Vive, it is not a holographic display).

What differentiates HDK from the Oculus line of HMDs are multi-element lenses with very low radial distortion and chromatic aberration, and the ability to manually adjust the lens separation and lens-screen distance to account for viewers’ inter-pupillary distance and correct viewers’ vision.

Those are important alternative paths to explore, but the main question is: how well does it work in practice? Not all that well, unfortunately. My first impression was that the headset was less comfortable to wear than Oculus ones. The face rim has a smaller radius of curvature and felt tight on my face, and the foam padding felt coarser than that on DK1 or DK2, and a bit scratchy. But once on the face, it sat in place without shifting (I did have VR welts after taking it off, though).

Next I tried adjusting the lenses, and ran into the first serious issue. The lenses are not adjusted via a thumbscrew as in Samsung/Oculus’ GearVR, but are loosened from the screen enclosure manually via two thumbscrews, and then moved around by pushing the screws, and locked in place again. It was very fiddly to get them to a position where I could see the entire screen, and then to keep them in that position while re-adjusting their distances to the screen so I could see clearly, and I’m afraid I ended the procedure with the lenses slightly rotated out of alignment with the screen plane. Granted, this is a hacker dev kit, but I don’t think this is the way to approach adjustable lenses.

Once I had the lenses in place, I noticed a related problem. There was a good amount of dust on the screen, with individual specks obscuring one or more pixels each. I realized this was probably due to the open design (no pun intended), which was necessary to allow the lenses to be moved for adjustment. Users will have to be careful to store their HDKs in a way that no dust gets in, and should keep a can of compressed air handy.

Ignoring the dust, the screen looked good, with little chromatic aberration around the periphery, and no noticeable radial distortion (there was some very minor bending almost all the way out, but I had to look really hard to find it). I looked closely at the screen through the lens adjustment cut-outs, and am fairly sure there is no software lens distortion correction. So the lenses really do work as advertised. It is hard to judge without side-by-side comparison, but field-of-view looked to be about on par with Oculus Rift DK1/DK2.

The biggest issue in the current OSVR platform as a whole was the software. The booth attendants blamed it on a packaging mistake (demo was allegedly linked against wrong SDK version), but the display latency was way out there, on the order of hundreds of milliseconds. This made the demo I tried, a 3D racing game, completely unplayable and nausea-inducing (I had to take the headset off after a few minutes). It also means I couldn’t check for motion blur, because I didn’t dare move my head around very much. I have written drivers for IMU-tracked headsets before, and I have no idea how they managed to extend the latency this much. Must be quite some bug. Besides that, there was a mismatch between the headset’s real field-of-view and the FOV value programmed into the demo. As a result the VR scene warped under head rotation, which prevented it from feeling like a real space.

That last issue might be related to the adjustable lenses. There is no mechanism to measure the actual lens position and feed it back into the software, which would be necessary to calculate proper projection parameters. I’m assuming that the OSVR software has configurable IPD and lens-screen distance, but user calibration wasn’t part of the demos.

In conclusion, while the OSVR HDK can fulfill its purpose of developing OSVR-based applications, it is not a headset that anybody but hackers should use at this time. Even for its intended purpose, it is missing one big feature: positional tracking. Developers working with HDK might create applications that break when 6-DOF tracking is present on other OSVR-compatible headsets.

Meta 1 Developer Kit

The Meta 1 Developer Kit is a see-through augmented reality headset, and a direct competitor to Microsoft’s HoloLens, albeit one that is inferior in almost every way. The biggest differences are the much higher tracking latency (felt like >100ms), and the complete lack of positional tracking (which are, not coincidentally, the two things that surprised me most about HoloLens). In practice, the difference in tracking quality manifests in virtual objects in the Meta 1 vaguely floating somewhere in front of the viewer, whereas in HoloLens they are locked to a fixed position in space, or to a real object.

Among the smaller differences are the optics (the two have similar fields-of-view for the AR overlay, but the HoloLens has a much wider see-through area around the AR screen; the HoloLens’ AR screen looks sharp everywhere, whereas the Meta 1’s gets dodgy in the periphery) and the Meta 1’s apparently lower resolution (960×540).

The one area where Meta 1 is ahead is interaction. Unlike HoloLens, Meta 1 tracks the position of the user’s hands, meaning that virtual objects can be manipulated by grabbing and dragging them, instead of by selecting them via “air tap” and dragging them by moving the head. The current software does not track hand orientation, so grabbed objects can be translated but not rotated, but I liked this approach a lot better than Hololens’ (which doesn’t allow simultaneous translation and rotation either).

In conclusion, having tried Meta 1 makes me appreciate HoloLens quite a bit more.

Jaunt VR

Last but not least, I got to try, and pay close attention to, live-action full-sphere stereoscopic video, via Jaunt VR‘s viewer running on GearVR. I have said previously that live-action full-sphere stereoscopic video has fundamental issues, and having had a very close look at it now, I can confirm that. In a nutshell, the videos I saw (a recording of a Paul McCartney concert and an alien DJ set in a back alley) did not seem real to me. It felt “off” in a way I can’t put my finger on, and I did not get the feeling of being in a real place, like I got, for example, in Sony’s Project Morpheus demos (or in my own systems, for that matter). I don’t quite know how to describe it. There was depth in the videos — I closed my eyes alternatingly to check for stereo disparity (which was there), and objects or subjects seemed to be at different depths — but the parts didn’t add up to a coherent whole.

In addition, there was annoying eye strain. I was expecting serious problems while tilting my head, because baked-in stereo only works as long as the line connecting the viewer’s pupils is parallel to the fixed stereo separation line of the video, but even while holding my head perfectly level (as far as I could tell), I had the strong feeling that my eyes were looking in different directions in a non-natural way. It felt very similar to using my old eMagin Z800 3D Visor, which had vertically misaligned eye pieces. (And yes, once I tilted my head more than 30 degrees or so in either direction, stereo fell apart completely and I was left with double vision). I had previously used GearVR at last year’s Oculus Connect, and had no problems with the real-time rendered VR experiences. So I blame these issues on the content instead of the display. It is, of course, possible that the particular unit I was using was damaged. Unlike Oculus Connect, where the headsets were guarded and handled like crown jewels, here they were just lying around on chairs, ready to be picked up and abused in who knows what ways. It is also possible that I had some confirmation bias going in (based on my previous article), but I didn’t know that could cause eye strain. And apropos of nothing, black smear was still very evident in GearVR.

I spent about ten minutes or so with the videos, trying to figure out what exactly was going on, and after taking the headset off, it took a minute of blinking and squeezing my eyes to get my vision back to normal. I was not impressed.

1. Great panel. Input is critical to VR and it’s been in a state of confusion as to which direction it’s headed.

It seems like the general sense however is that the Sony Move/ SteamVR wand direction is the most sensible target for the first(consumer) generation of VR. You didn’t endorse this explicitly, what is your sense of the direction of things?

Oculus being absent from this panel may just be since they haven’t announced their “input” solution but it seemed to me from comments by Nate Mitchell that they are really only targeting game controller based experiences so far?

2. One thing that came up peripherally is the lack of desktop or productivity vr controllers.

By this I mean something that you would use from a desk as you were using a keyboard. I’m thinking of CAD, blender or even coding a js threejs experience.

You’ve had good success with two handed controls, as you said, but what do you think of this missing piece? Or just put a SteamVr controller stand on either side of keyboard and get on with the VR revolution?

1. I think 6-DOF tracked hand-held controllers are the best general-purpose devices for VR interaction we have right now, and for the foreseeable future until something completely new comes along. They lead to very intuitive interaction, and can generate events with 100% reliability and immediate tactile feedback. I have been using them as bread-and-butter input devices since the first day I started working in VR. Finger trackers, be they optical or inertial, have fundamental problems in that regard. My favorite VR input device is the Fakespace Pinch Glove, a glove with contact pads on the finger tips. It’s tracked via some external tracking system attached to the back of the hand, and the finger tips generate events.

2. In a desktop setup, it’s not so clear; the best input scheme is much more application- and use-dependent. My personal default setup on the desktop is keyboard/mouse “plus”, meaning in combination with a secondary device. For a large percentage of uses, just keyboard/mouse are fine, but if you need three-dimensional interaction, say translating and rotating 3D objects during modeling, mouse-based interfaces become too abstract and/or cumbersome. The next step up is a 3D desktop device, and my favorite are six-axis spaceballs, such as Logitech’s SpaceNavigator. They allow the user to translate and rotate objects simultaneously and intuitively. The method is: mouse to select, spaceball to move.

If that doesn’t cut it, you’ll have to use full 6-DOF devices. On the desktop, my go-to is Razer Hydra. It’s not very accurate, but in the constrained setting it fits the bill. For example, the Nanotech Construction Kit requires precise and quick 3D dragging operations, and a spaceball doesn’t support those. The Hydra is the perfect tool, especially because it can be used by both hands at the same time.

The bottom line is that, on the desktop, you’ll have to pick your input device based on what you want to do.

This is a topic deserving its own 4,000 word article (one that I’ve been wanting to write for a long time).

1. I was pretty inclined to take the oculusvr as a baseline but based on this panel and your comments I’m going to go ahead and dust off the razer hydra to move forward with my projects. Thanks.

2. This desktop problem is interesting and seems like a blindspot for a lot of the industry at the moment. I suspect as people start to want to do more work in vr as the resolution and stability improves it will be a big focus.

I have a 3dconnexxion spacemouse and I find it pretty handy for moving my viewpoint. It really suffers from the joystick problem however. By this I mean precision issues even with practice.

It isn’t precise enough in any plane, for me, to actually produce output worth looking at.

It’s interesting how useful the mouse still ends up being in blender as that smooth plane you can press against with your forearm allows the precision you need to produce things.

The problems of control in a first person shooter that the mouse (very good) vs console controller (objectively bad in competition) show are reflected in the same issues as you are trying to place vertexes etc.

I see the blender guys are starting to do some work on stereoscopic output and hmd so perhaps soon there will be a good general test environment for everyone to really tease some of these issues out.

Sorry I actually meant “steamvr controllers as a baseline”.

Cheers

Pingback: On the road for VR: Silicon Valley Virtual Reality Conference & Expo (doc-ok) | VR News

Pingback: On the road for VR: Silicon Valley Virtual Reality Conference & Expo (doc-ok) - VR News

Pingback: #148: Soren Harner on Meta’s Augmented Reality Glasses | Judderverse