Since Microsoft’s Build 2015 conference, and increasingly since Microsoft’s showing at E3, everybody (including me) has been talking about HoloLens, and its limited field of view (FoV) has been a contentious topic. The main points being argued (fought) about are:

- What exactly is the HoloLens’ FoV?

- Why is it as big (or small) as it is, and will it improve for the released product?

- How does the size of the FoV affect the HoloLens’ usability and effectiveness?

- Were Microsoft’s released videos and live footage of stage demos misleading?

- How can one visualize the HoloLens’ FoV in order to give people who have not tried it an idea what it’s like?

Measuring Field of View

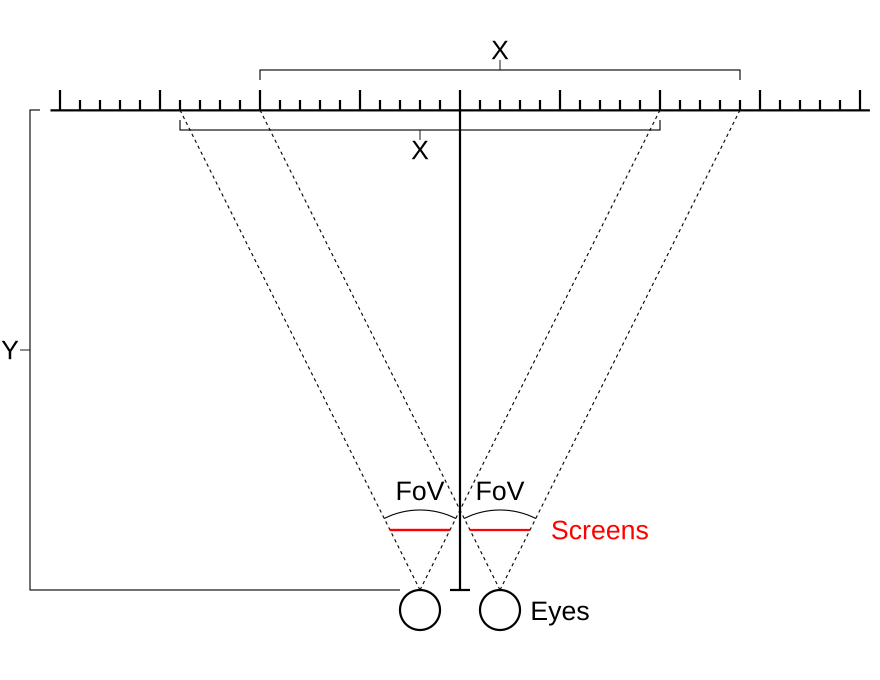

Initially, there was little agreement among those who experienced HoloLens regarding its field of view. That’s probably due to two reasons: one, it’s actually quite difficult to measure the FoV of a headmounted display; and two, nobody was allowed to bring any tools or devices into the demonstration rooms. In principle, to measure see-through FoV, one has to hold some object, say a ruler, at a known distance from one’s eyes, and then mark down where the apparent left and right edges of the display area fall on the object. Knowing the distance X between the left/right markers and the distance Y between the eyes and the object, FoV is calculated via simple trigonometry: FoV = 2×tan-1(X / (Y×2)) (see Figure 1).

Figure 1: Calculating field of view by measuring the horizontal extent of the apparent screen area at a known distance from the eyes. (In this diagram, FoV is 2×tan-1(6″ / (6″×2)) = 53.13°.)

In case no foreign objects are allowed, one can use parts of one’s body. An easy way is to hold out one’s arms directly along one’s line of sight, stretched as much as possible, and then hold the hands, with fingers splayed as widely as possible, along the horizontal axis of the display area. The reason to do this is that stretched arms and fingers are reproducible measurements, albeit ones using “personal” units of measurement — but those can be converted into standard units by measuring the observed distances with the help of a ruler and an assistant afterwards. Using this approach, I measured the HoloLens’ FoV to be 30°×17.5° degrees (aspect ratio 16:9), as the display appeared 1.75 hands wide, and 1 hand tall (see Figure 2).

Figure 2: Measuring the HoloLens’ augmentation field of view by covering it with splayed hands at outstretched arms. The brighter rectangle in the center of the image corresponds to the area in which virtual objects (“holograms”) can appear. It is approximately 30°×17.5°.

Visualizing Field of View

Given that only a tiny number of people now have hands-on experience with HoloLens, and that see-through AR in general is rather esoteric at this time, the next question is how to communicate practical limits of current see-through AR technology to a large audience. The image in Figure 2 above doesn’t really do it, because it insets a known field of view into a photograph. A viewer would intuitively assume that the photograph represents the entire human visual field of about 200°×130°, which would make the inset appear quite large. But in fact the photograph was taken with a camera that has its own quite narrow field of view, about 50° horizontally, or about a quarter of the horizontal full human visual field.

Another approach is to show the field of view of a head-mounted device from an outside, third-person perspective, which is of course impossible using a real AR device, as the images are only virtual. Fortunately, this kind of thing is easy to simulate using a large-scale VR display. I was able to hack virtual blinders into the Vrui VR toolkit, and use the CAVE to show third-person video of a person using a variety of VR applications in two settings: using the full visual field, similarly to how Microsoft presented HoloLens videos and on-stage demos; and limited to the HoloLens’ estimated FoV of 30°×17.5°

Figure 3: Still frame from “Living with Low Field Of View” video, showing a small sliver of a virtual globe floating in a CAVE VR display.

As is clear from the video (see Figure 3), the difference in appearance is rather vast. In all fairness, one has to ask: is this representation just as misleading as Figure 2, only in the other direction? After all, we don’t see in third-person, and looking at a small cone or pyramid apex-on might look very different than looking at it from the side. While that may be the case (I don’t think it is, but won’t argue here), two facts remain: for one, this visualization is at least quantitative, i.e., it allows a viewer to judge how large virtual objects can maximally be to completely appear inside the display for any given distance, which is very important for AR UI design (see below); and second, while I was recording the video, and seeing the virtual objects from a first-person perspective, in stereo just as in a real HoloLens (see Figure 4), the experience felt exactly like my real HoloLens demo, down to the feeling of disorientation, and difficulty working with virtual objects (also see below).

For comparison and contrast, I also made another video showing the field of view of current-generation commodity VR headsets in the same way.

Limits on See-through AR Field of View

If the exact size of HoloLens’ FoV is a point of contention, the reasons for its size are even more so. Based on a nonscientific sampling of the blogo- and forospheres (i.e., skimming some HoloLens blogs and threads on reddit), many people believe that the small FoV is a result of the HoloLens’ limited graphics processing power, and therefore easily improved in the future via more powerful GPUs. Where does this notion come from? For one, there is a common belief that increasing the field of view of a 3D graphics application leads to a (proportional) increase in per-frame rendering time — after all, if the FoV is larger, the graphics processor has to render more “stuff.” And while there are circumstances where this is somewhat true, such as games with explicit frustum culling, or GPU drivers that can cull complex geometric objects automatically, the effect is usually small (as long as the final rendered image size in pixels stays the same). I don’t know this for a fact, but I wouldn’t be surprised to learn that many people believe the FoV of console games is generally lower than that of PC games due to consoles’ reduced graphics horsepower, when in fact it’s due to the typical viewing distance for console games (couch to TV) being larger than the same for PC games (desk chair to monitor).

Anyway, in the case of HoloLens, the “low FoV due to low power” idea probably stems from the very early HoloLens prototypes that were shown to a select audience prior to Build 2015. Those differed from post-Build models in two main ways: they were not integrated, i.e., were run from a bulky processor box the user had to wear like a backpack, in other words, a processing system that looked a lot more powerful than the Build and E3 prototypes, and they had a larger field of view. One mix-up of correlation and causation later, and the conclusion is drawn.

I, on the other hand, am convinced that the current FoV is a physical (or, rather, optical) limitation instead of a performance one. To the best of anyone’s knowledge, HoloLens uses a holographic wave guide to redirect imagery from the left and right display systems into the user’s left and right eye views (see this paper and this web site for a gentle introduction and this paper for full details on a specific implementation), and such wave guides cannot bend incoming light by arbitrary angles. In fact, the maximum field of view achievable through holographic wave guides is directly related to the index of refraction (n) of the wave guide’s material, as described in this Microsoft patent. Concretely, the maximum balanced field of view for a wave guide made of material with index of refraction n=1.7 is 36.1°, and given that typical optical glass (crown or flint) has somewhere from n=1.5 to n=1.6, this matches my FoV estimate of 30° rather well. High-end materials with n=1.85 (which is close to the physical limit for glass) would have a balanced field of view of 47°. Diamond, which has n=2.4, would probably yield a comfortably large FoV, but given the required size of the waveguide, I imagine only Bill Gates could afford that.

Given this information, it is possible that the first HoloLens prototype used extremely high-quality material to create a somewhat larger field of view, but that Microsoft decided to go with cheaper material, and a concomitant smaller FoV, for the second prototype and retail version. It is also possible that the first prototype used a different wave guide geometry that allowed a larger FoV at the cost of a larger and heavier overall system, and that Microsoft had to sacrifice FoV to achieve a marketable form factor.

Either way, it appears that there is no simple fix to increase FoV. It might require extensive R&D and maybe a completely different optical approach to increase FoV by significant amounts, let alone bring it to the same ranges achievable by VR headsets such as Oculus Rift. It seems it’s not just a matter of waiting for more powerful mobile GPUs.

(As an aside, it’s much easier to pull off large-FoV VR headsets because those don’t have the requirement to show an undistorted view of the real world through the screen.)

Field of View and Usability

If one accepts that HoloLens’ current FoV is very narrow, and that it will be hard to increase it by significant amounts in the near future, the next question is whether small FoV negatively affects usability and user experience. Some argue that this won’t be the case, but I disagree. I have used VR headsets with a somewhat comparable FoV, specifically eMagin’s Z800 3DVisor with 45° diagonally, and while those definitely create a feeling of tunnel vision, I noticed an important difference when I tried HoloLens.

In a narrow-FoV VR headset, the user might not be able to see all virtual objects at all times, and might have to move around her head a lot to compensate. That said, the user still knows where all relevant virtual objects are, even if they’re not currently in view. For example, if you place an interaction object or dialog box at some spot in virtual space and then look away, you will later on be able to look back at the object without having to think about it. To me as a layperson, this seems like a practical result of object permanence — we learn very early on as infants that objects do not cease to exist when they temporarily move out of view, say are occluded by another object such as a wall (I just started learning that this is probably related to how the brain creates mental maps of environments). In a VR headset, the brain might interpret the “blinders” of the screen edges as physical objects, and react in the way it usually does.

In my HoloLens experience, on the other hand, this didn’t kick in. During the demo I noticed that I was feeling disoriented when working with virtual objects. For example, I was asked to place a control panel on the wall to my left, and use it to change the appearance of an object in front of me. But the moment I looked away from the panel and at the object, my brain immediately forgot where the panel was, and I got a sense of unease because my tools had just been taken away. And when I tried to look back at the panel, I didn’t find it immediately; I had to look around a bit until it happened to (partially) pop back into view. I didn’t really notice this at a conscious level at the time.

But when I implemented “virtual blinders” into the Vrui toolkit to simulate the HoloLens’ FoV using a VR display, I had the same experience, and a chance to think about it more. For example, in low-FoV VR, working with a pop-up menu might only show part of the menu at a time, but my brain would still accept that the menu’s top part still exists, and have a good idea where it is (above the bottom part, that is). But in my simulated HoloLens, this didn’t happen. When searching for a top menu entry while currently looking at the bottom end, I had to consciously force myself to look up — it wasn’t automatic. By the way, here’s the video:

My hypothesis is the following: object permanence is based on objects being occluded by other objects. In a see-through AR headset, on the other hand, virtual objects are cut off at the edges of the display area without being occluded by anything else. They simply vanish into thin air. This never happens in reality, which is why our brains might not be equipped to deal with it. In short, object permanence doesn’t kick in because the brain fails to suspend disbelief when objects are clipped by an invisible boundary.

If this is really the case, it explains why I was having a hard time, and why I found the HoloLens’ narrow field of view so limiting without being able to immediately explain why. More importantly, this will have a big impact on user interface design. AR applications might have to be limited to small virtual objects that move around to follow the user’s viewing direction, or are so small that they quickly disappear entirely when hitting the edges of the field of view, instead of being cut in half by invisible edges. Most importantly, most of the applications Microsoft showed in their HoloLens demo reels might not work well. Which leads us to…

Were Microsoft’s Videos and Stage Demos Misleading?

It is very hard to sell completely new media to an unaware public. Virtual Reality has suffered from this for three decades, and AR is very similar in that aspect. Look around the Internet for videos attempting to “explain” VR, and most don’t. Some may show a person wearing a headset, and nothing else. Some may show a captured view from a secondary monitor, including side-by-side barrel-distorted images. To someone who hasn’t already experienced VR, those seem to make no sense whatsoever. Witness the VR discussions on non-VR message boards, where most people’s takeaway message of VR is “a monitor that you strap to your face.” And how could they understand what it’s really like?

Along the same lines, trying to explain VR in words often fails as well. I’m witnessing a lot of that on the Oculus subreddit, where users try to be helpful by answering repeated questions of “what is it like to use VR?” by explaining that VR is based on small stereo screens, but there are lenses to make them bigger, and there is head tracking so that the image changes when you move your head… Those of you who know VR: if you didn’t, would you understand what it’s like from technical descriptions like these? For example, according to this, the image in a VR headset changes as users move their heads. But in the real world, the image doesn’t change when you move your head; quite the opposite, the real world stays perfectly still! Then the next reply will be that yes, that’s true, and it’s the same in VR because the change in the image is computed so that the motion of the headset and the motion of the head exactly cancel out… anybody still following?

On a serious note, this is a problem. And Microsoft’s videos and on-stage demos nailed it. Instead of explaining AR at a technical level, they simply show what the augmented world would look like to the user, but from an outside perspective so that it makes sense to the audience. AR “embeds holograms into your world,” and those videos show exactly that. Incidentally, that’s how we ended up trying to communicate VR, with some success, but it’s even more applicable to AR where the real world provides useful anchoring for the virtual objects.

On the downside, while these videos give an immediately understandable impression of what AR is (just compare the agog reaction of the mainstream to HoloLens to the lukewarm reaction to VR), they don’t give an accurate representation of the technical limitations of current-generation AR, and how they affect UI design and application usability. In other words, they lead to unrealistic expectations which are usually followed by disappointment when experiencing the real thing, or, you know, hype.

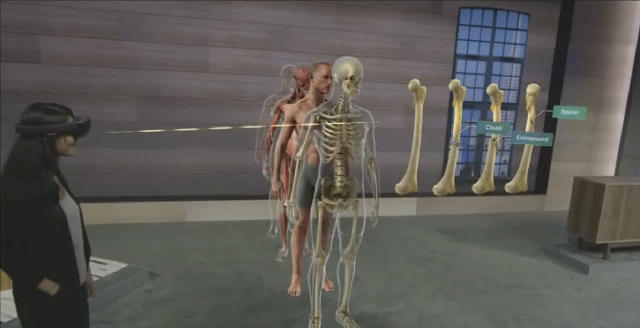

Figure 5: One example application Microsoft chose to highlight, a virtual anatomy lesson. Problem is, this wouldn’t work. See Figure 6 for what this would approximately look like in reality.

What I’m trying to say here is that I don’t blame Microsoft for creating these videos, and I definitely am not calling them liars, but they should have talked about — and shown — limitations earlier to keep expectations grounded. What I am saying is: good job Microsoft at explaining AR to a mass audience at an intuitive level. The only real gripe I have is that Microsoft chose to highlight several applications that probably won’t work well with the current state of technology, to wit: watching movies on a virtual big screen, playing Minecraft on a living room table, “holographic” life-size anatomy atlas (see Figures 5 and 6).

(just subscribing to the comments)

Hi Oliver

Thanks for a very insightful article. You are right about the difficulties in achieving large FoV for AR, and it is primarily a physical (optical) limitation. We recognized this (FoV) and other limitations including the “vergence-accommodation” conflict of existing optical technologies a decade ago and took a radically different approach to the optics. After 10 years of research and development, we have created a optical technology that is able to deliver a large FoV, natural see-through AR, 3D, high resolution, and without the “vergence-accommodation” conflict. We call this “lensless” technology the Natural Eye Optics as it replicates the human vision and delivers a natural and safe viewing experience. Our soon to be released first product has a 55°x32° FoV (with 100% overlap) with future iterations of the technology will deliver even larger FoV. Love to speak to you more about this and the work that you are doing in this space.

Do go on…

Edit: Forgive my terseness; I just arrived on Maui and had my Welcome Mai Tai (and Lei). What I meant to say was, please tell me more. I am curious about technologies to address accommodation/vergence conflict right now, and my HoloLens experience and simulations have given me a new appreciation of the importance of field of view in AR.

Hi Oli, great article as per usual.

I’m curious as to why we haven’t seen AR HMDs with outside-in positional tracking yet, using either magnetic or optical tracking systems. I see this could be beneficial in indoor situations, where the HMD will only be used within a set space or situations where sub-millimeter accuracy is vital.

Also, I’ve read up on some studies measuring the accuracy of optical vs magnetic tracking systems and it seems like they are comparable. Have you seen any research comparing inside-out vs outside-in pose estimation? I assume some form of sensor fusion would be used to increase accuracy.

Unless the pose estimation is accurate to within a few millimeters, it seems certain applications, like medical for example, would not be possible.

I think we haven’t seen outside-in tracking because it appears to many people to defeat the purpose of AR, that you can walk around untethered and see virtual objects. But in principle it would not be a problem. You could use the same tracking system as Valve did in their original VR room, where you plaster an environment in QR codes and use a headset-mounted camera for tracking. So it’s inside-out tracking technically, but it requires an instrumented environment.

The issue with E/M tracking is global field warp. I have extensive experience with Polhemus Fastrak, Ascension Flock of Birds, and Razer Hydra. The first two are high-end but old EM tracking systems, and the problem is that they have high precision, but low accuracy. Meaning, if you measure the same point in space twice in a row you get the same results, but if you compare measurements from EM with measurements from an independent, say optical, tracking system, you get discrepancies up to tens of centimeters. You can calibrate against such global field distortions, but it’s very cumbersome, and the distortion changes the moment someone brings any metal into the environment. I have a video where I (conicidentally) ran into E/M field warp, with a way to visualize it directly. Granted, that’s with a Razer Hydra, which is rather bad, but the same thing, to basically the same extent, happens with Polhemus and Ascension.

Addition: Optical tracking is a practical remedy, and can be done right now, albeit not as cheaply as it will be in a few months. A tracking system with global accuracy below 1mm over a 15′ x 15′ x 7′ tracking space, requiring only small retro-reflective markers on the AR or VR headset, costs about $8k. Here’s a video showing one, and the tracking quality. When putting some more intelligence into the tracked device, a system similar to Oculus’ Rift DK2 tracker can be done for around $100 I assume, but it requires some coding work. Vive’s Lighthouse tracker will also be a very good fit for indoors AR applications, just about as good as Oculus’, for probably the same effort.

Would the addition of IMUs allow for the distortions to be mapped and corrected on-the-fly, by comparing imaginary IMU values calculated from the detected magnetic tracking changes with the real values read by the physical IMU?

That’s what Sixense claim they’re doing with the STEM, but I’m skeptical. Correcting orientation distortion via IMU is a no-brainer, and they’re definitely doing that, but position distortion is another problem. It happens gradually, not abruptly, and I imagine it would be almost impossible to distinguish field warp from normal IMU drift.

Thanks Oliver. Given the high local accuracy, low latency of the magnetic tracking system and the high global accuracy but high(er) latency of the optical tracking system, couldn’t you use some form of sensor fusion to get the best of both worlds?

That’s true, but in my opinion the best combination is optical+inertial. You get the very high global accuracy and precision of optical (less than 1mm, in some cases around 0.1mm) and the very low latency (around 1ms) of inertial, plus inertial is able to tide the tracking solution over short drop-outs in optical tracking due to occlusion. This is how the Oculus Rift’s and HTC Vive’s positional tracking algorithms work.

Pingback: How Hololens Displays Work - The Imaginative Universal

Great article doc! Quick question: you mention that diamond would afford a high fov because it has such a high refractive index. Is there a reason why a silicon waveguide can’t be used for this purpose? It seems to have an exceptionally high refractive index (at 3.0+) and seems much less expensive than diamond for this purpose.

I don’t know for certain, but the in- and outcoupling holograms described in the articles I linked are volume holograms, and appear to be several millimeters thick. Silicon wafers for chip production are around 0.5mm thick, and thinking back to the last time I held one in my hand, I remember it being completely opaque.

Pingback: Hitchhiking the Backroads to Augmented Reality – The Imaginative Universal

Pingback: HoloLens OcClusion vs Field of View – The Imaginative Universal

Pingback: Is the HoloLens Development Edition Worth Buying? - slothparadise

Pingback: Doc-Ok.org

Pingback: ODG’s New R-8 And R-9 Mixed Reality Headsets Are Powered By Qualcomm Snapdragon 835 | Moor Insights & Strategy

Pingback: 'HOLOSCOPE' Headset Claims to Solve AR Display Hurdle with True Holography - Road to VR

Pingback: HoloLens Occlusion vs Field of View – The Imaginative Universal

Pingback: Hitchhiking the Backroads to Augmented Reality – The Imaginative Universal

Pingback: How Hololens Displays Work – The Imaginative Universal

Pingback: Playing with the HoloLens Field of View – The Imaginative Universal

Pingback: ODG’s New R-8 And R-9 Mixed Reality Headsets Are Powered By Qualcomm Snapdragon 835 – IoT up2date

Hi

I am wondering if you’ve tested your object permanence hypothesis in any formal studies, or found papers to support?

Thanks,

Marie

No, it’s really just a hunch to explain a strange observation with a possibly-related known mechanism. I don’t even know how one would test this scientifically (it’s also way outside my area). But if you happen to find anything, or have any ideas, please let me know.

Pingback: Project B, Part 1 – Lindsay Browder