“Can I make a full-field-of-view AR or VR display by directly shining lasers into my eyes?”

No.

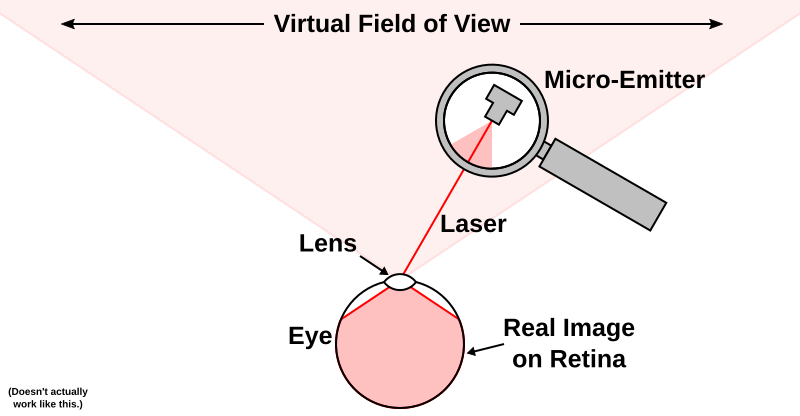

Well, technically, you can, but not in the way you probably imagine if you asked that question. What you can’t do is mount some tiny laser emitter somewhere out of view, have it shine a laser directly into your pupil, and expect to get a virtual image covering your entire field of view (see Figure 1). Light, and your eyes, don’t work that way.

Figure 1: A magical retinal display using a tiny laser emitter somewhere off to the side of each eye. This doesn’t work in reality. If a single beam of light entering the eye could be split up to illuminate large parts of the retina, real-world vision would not work.

Our eyes are meant to capture images of the real world, and to do that, they have to obey one fundamental rule:

Rule 1: All light that originates from a single point in 3D space and enters the eye has to end up in the same point on the retina.

If this rule isn’t followed, there will be no image on the retina. If this rule is even slightly violated, say by light from a point in space forming a tiny disk instead of a point, you get a blurry image. That’s exactly what happens when your eyes are not focused properly, or you are not wearing your prescription glasses.

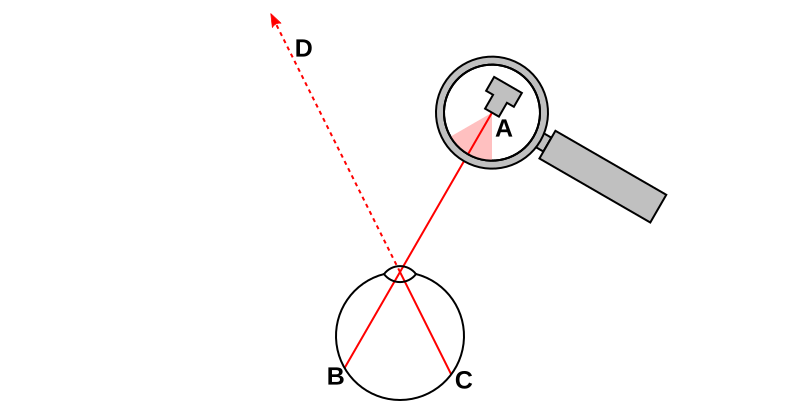

This means that if you have a tiny laser emitter somewhere in front of your face, and let it shoot directly into your eye, light from the laser will only end up in a single spot on your retina, and nowhere else. If you put a pinprick of light in front of your eyes, you see a pinprick of light, no matter whether that light is from a laser or not (see Figure 2).

Figure 2: Light entering the eye from a single point in space (A), or along a single line, ends up in a single point on the retina (B). In order to put light onto a different spot (C), that light must have originated somewhere along line D.

This fundamental rule of optics and vision leads to a corollary:

Rule 2: To create an image covering H°×V° of your field of view, you need to have a direct area light source, or at least one intermediate optical element (a screen, a mirror, a prism, a lens, a waveguide, etc.), covering at least those H°×V°.

As an aside, that’s the same reason why real holographic images are not free-standing in the sense many people imagine. You can only see them inside the field of view covered by the holographic plate that creates them (I drew a nifty diagram of that a couple of years ago: compare and contrast Figures 1 and 4 in this old post about the Holovision kickstarter project).

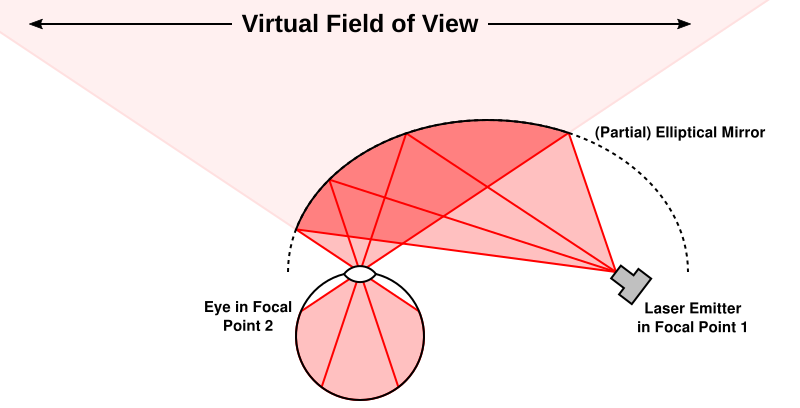

Given these two rules, how then can you use lasers (or other point light sources) to create virtual images? By following rule 2 and placing an optical element between the light source and your eye. For a simplified but to-scale diagram of such a setup see Figure 3. That system (including the mirror or prism or similar element) is called a virtual retinal display.

Figure 3: A real virtual retinal display, in this case using an elliptical mirror as FoV-expanding optical element. The scanning laser projector and the eye’s pupil are located at the opposing focal points of the ellipse; as a result, all light emitted from the projector, in any direction, ends up exactly in the pupil. (This simplified system does not allow for eye movement, and is not see-through.)

There are many examples of real-world displays based on this principle of small image source and field-of-view-expanding optical element. All see-through AR headsets and some opaque (VR) headsets use it. Microsoft’s HoloLens, for example, is assumed to use an LCoS microdisplay and a holographic waveguide to inject virtual objects into the real world. CastAR uses retro-reflective mats. Microdisplay-based VR HMDs use complex lens systems.

No confirmed details are known about Magic Leap’s upcoming AR headset, but Magic Leap’s patents describe an oscillating optical fiber that can emit light from a very small spot (< 1mm) over a wide angle (claimed as 120°), and mention a large number of different waveguide technologies or free-form prisms to then bend the emitted light into the viewer’s eyes over some yet-to-be-determined field of view. I’ll say it again: the oscillating fiber projector by itself is not sufficient to create an image; you also need some intermediate optical element. (And, assuming that they in fact do have a fiber that can emit light throughout a 120° cone, that in no way means their display has a field of view of 120°. Those two aspects are entirely unrelated.)

“But wait,” you say, “what about those free-air volumetric displays, like the one in the video below? They are completely free-standing, and don’t need an optical element between the laser and the eye!”

I’m glad you asked. The loophole is that in the case of free-air displays, the laser is not the light source. The air itself is the light source, specifically an area light source as required by rule 2. The downside is that in order to turn air into a light source, you have to super-heat air molecules and convert them to plasma, which in turn emits light that can directly be observed by viewers.

If super-heating tiny pockets of air to serve as pixels sounds slightly too dangerous (or too loud) to employ in your living room or in a near-eye context, then that’s because it is.

“If super-heating tiny pockets of air to serve as pixels sounds slightly too dangerous…” –

Did you have a chance to see this latest development (Femtoseconds lasers plasma pixels) ? – https://youtu.be/ML79u9zPZu4?t=80

Yes, I have (not in person, though). It’s very interesting as a technical curiosity, and as a crazy “Science!” thing to do with high-powered lasers, but I’m not so sure about practical applications.

There are engineering limitations like the number of points that can be fired up simultaneously and the amount of heat and ozone produced, but also fundamental limitations like isotropy (points look the same from any direction, hence no surface effects), additivity (farther-away surfaces are not occluded by closer surfaces, hence severe depth conflicts when showing anything but 2D-ish flat objects), and monochromaticity (there’s no way to control the color of the light emitted by the plasma effect).

To use a 3D graphics analogy: the physics of the display technology only support unlit, single-color point primitives and no z buffer.

Thank you for your so complete and interesting observation.

A pleasure to read.

Oliver,

I love your work, you are a very refreshing bright light in a very foggy sea of confusion in the AR & VR space. The investment community would do themselves (and their shareholders) a great service by including your work as part of their due diligence process. Please keep up the great work, I look forward to your next article.

Doug

Hm, so a perfectly colimated point-source is never gonna look blurry regardless of optics? (assuming it it’s a instant exposure, so no motionblur is involved)

With the current tecnology, can we shoot enough photons to be visible, one by one, aiming them all precisely enough that it doesn’t get noticeably fuzzy at any distance inside the atmosphere?

If a perfectly collimated point light source were possible — which it is not, due to Heisenberg’s uncertainty principle, as we would know both the position and momentum of photons exiting the light source with infinite precision — then yes, it would always be in focus, no matter what optics would be involved.

Virtual retinal displays, for example, are diffraction limited. However, if I recall correctly, under normal conditions, the laser spot is still smaller than the distance between adjacent rods or cones, meaning that the display would appear perfectly sharp to the viewer.

There are a handful of ‘laser projector’ displays that utilise exactly this idea (emit only collimated light) to project an image onto an arbitrarily shaped surface without the need for complex lenses or mirrors to keep the image in focus. Some people have even used these projectors to produce internally-lit spherical displays, e.g:http://eclecti.cc/computergraphics/snow-globe-part-one-cheap-diy-spherical-projection

What is the difference between “direct area light source” and an oscillating optical fiber? I really don’t understand why an an oscillating optical fiber couldn’t produce an image on the retina

“assuming that they in fact do have a fiber that can emit light throughout a 120° cone, that in no way means their display has a field of view of 120°. Those two aspects are entirely unrelated.”

Why are they unrelated? It seems to me they would be the same.

“Rule 1: All light that originates from a single point in 3D space and enters the eye has to end up in the same point on the retina.”

Does that apply even if the point is an oscillating fiber, wouldn’t the light from the fiber enter the eye at different points as it oscillates?

You got it. The only thing you missed is that the spatial area over which the fiber tip oscillates is much less than one millimeter in diameter. It is an area light source, just a really, really tiny one. If you put it in front of your eye, you see a spot of light less than 1mm across (or much less than 1° across, depending on distance).

Ah, so basically the optics would be for making that 1° into 45° or 120° or whatever will be the FOV?

Exactly. And that’s why the emission angle (which is measured from the light source to the eye) and the field-of-view angle (which is measured from the eye to the light source / optical element) are unrelated.

What if the optical fiber were to emit light directly into the surface of the cornea via a rigid coupling such as the one presented here? http://youtu.be/BZjtufbRZwA

If they can get the formfactor low enough, we might be able to make specialty VR contact lenses: Eyebuds!

Yes, that could in principle work. Ideally, you’d remove any optical interfaces between the fiber and the cornea/lens to be able to better control where the image ends up on the retina. A drop of water, mixed with something to approximate the index of refraction of the cornea, sitting directly on the cornea, with the fiber embedded into it. Keeping all of that in place under eye movement might be a bit of a challenge, unless you go with the nerf dart model.

So a special contact lens could work in conjunction with a laser?

In theory, sure.

Just to make sure I’m understanding correctly… are simple projectors (like those that would play a DVD) considered a virtual retinal display since they are projecting onto a medium which is then reflecting the light into your eye?

Followup as well:

What’s the difference between a standard smartphone display and a microdisplay? Why can we make very high pixel density microdisplays but not seem to scale them up to the same size as a smartphone display so that we can high super high resolution displays for VR headsets?

I don’t know affirmatively, but I assume it’s that microdisplays and panel displays are based on different manufacturing processes. I think I’ve read that microdisplays are produced just like computer chips, meaning via lithography onto a silicon monocrystalline solid. Scaling those up causes exponentially increasing costs.

No. Regular projectors, just like regular monitors, form real images (in the optical sense). On a monitor, the screen itself is the real image; in a projector, the real image is created by focusing light onto the screen surface.

In a Rift/Vive-style HMD, the screen is again the real image, but the user only sees a virtual image created by the lenses, see Head-mounted Display and Lenses.

In a virtual retinal display, the only real image is formed on the viewer’s retina.