“Virtual Worlds Using Head-mounted Displays” is the most complex video I’ve made so far, and I figured I should explain how it was done (maybe as a response to people who might say I “cheated”).

Tag Archives: Kinect

How Milo met CAVE

I just read an interesting article, a behind-the-scenes look at the infamous “Milo” demo Peter Molyneux did at 2009’s E3 to introduce Project Natal, i.e., Kinect.

This article is related to VR in two ways. First, the usual progression of overhyping the capabilities of some new technology and then falling flat on one’s face because not even one’s own developers know what the new technology’s capabilities actually are is something that should be very familiar to anyone working in the VR field.

But here’s the quote that really got my interest (emphasis is mine):

Others recall worrying about the presentation not being live, and thinking people might assume it was fake. Milo worked well, they say, but filming someone playing produced an optical illusion where it looked like Milo was staring at the audience rather than the player. So for the presentation, the team hired an actress to record a version of the sequence that would look normal on camera, then had her pretend to play along with the recording. … “We brought [Claire] in fairly late, probably in the last two or three weeks before E3, because we couldn’t get it to [look right]” says a Milo team member. “And we said, ‘We can’t do this. We’re gonna have to make a video.’ So she acted to a video. “Was that obvious to you?” Following Molyneux’s presentation, fans picked apart the video, noting that it looked fake in certain places.

Gee, sounds familiar? This is, of course, the exact problem posed by filming a holographic display, and a person inside interacting with it. In a holographic display, the images on the screens are generated for the precise point of view of the person using it, not the camera. This means it looks wrong when filmed straight up. If, on the other hand, it’s filmed so it looks right on camera, then the person inside will have a very hard time using it properly. Catch 22.

With the “Milo” demo, the problem was similar. Because the game was set up to interact with whoever was watching it, it ended up interacting with the camera, so to speak, instead of with the player. Now, if the Milo software had been set up with the level of flexibility of proper VR software, it would have been an easy fix to adapt the character’s gaze direction etc. to a filming setting, but since game software in the past never had to deal with this kind of non-rigid environment, it typically ends up fully vertically integrated, and making this tiny change would probably have taken months of work (that’s kind of what I meant when I said “not even one’s own developers know what the new technology’s capabilities actually are” above). Am I saying that Milo failed because of the demo video? No. But I don’t think it helped, either.

The take-home message here is that mainstream games are slowly converging towards approaches that have been embodied in proper VR software for a long time now, without really noticing it, and are repeating old mistakes. The Oculus Rift will really bring that out front and center. And I am really hoping it won’t fall flat on its face simply because software developers didn’t do their homework.

Intel’s “perceptual computing” initiative

I went to the Sacramento Hacker Lab last night, to see a presentation by Intel about their soon-to-be-released “perceptual computing” hardware and software. Basically, this is Intel’s answer to the Kinect: a combined color and depth camera with noise- and echo-cancelling microphones, and an integrated SDK giving access to derived head tracking, finger tracking, and voice recording data.

AR Sandbox news

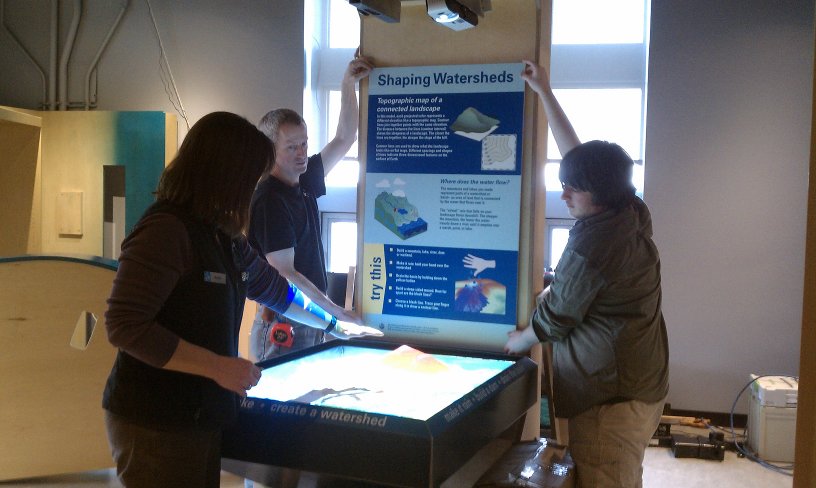

The first “professionally built” AR sandbox, whose physical setup was designed and built by the fine folks from the San Francisco Exploratorium, arrived at its new home at ECHO Lake Aquarium and Science Center.

Figure 1: Picture of ECHO Lake Aquarium and Science Center’s Augmented Reality Sandbox during installation on the exhibit floor. Note the portrait orientation of the sand table with respect to the back panel, the projector tilt to make up for it, and the high placement of the Kinect camera (visible at the very top of the picture). Photo provided by Travis Cook, ECHO.

… and they did!

… build their own augmented reality sandboxes, that is.

We still haven’t installed the three follow-up AR sandboxes at the participating institutions of our informal science education NSF project — Tahoe Environmental Research Center, Lawrence Hall of Science, and ECHO Lake Aquarium and Science Center — but others have picked up the slack and gone ahead and built their own, based on our software and designs.

Figure 1: Augmented reality sandbox constructed by “Code Red,” Ithaca High School’s FIRST Robotics Team 639, and shown here at the school’s open house on 02/02/2013.

The newest addition to my External Installations page is “Code Red,” Ithaca High School’s FIRST Robotics Team 639, who just unveiled theirs at their school’s open house (see Figure 1), and were kind enough to send a note and some pictures, with many more “behind the scenes” pictures on their sandbox project page. There’s an article in the local newspaper with more information as well.

Together with Bold Park Community School’s, this is the second unveiled AR sandbox that I’m aware of. That doesn’t sound like much, but the software hasn’t been out for that long, and there are a few others that I know are currently in the works. And who knows how many are being built or are already completed that I’m totally unaware of; after all, this is free software. Team 639’s achievement, for one, came completely out of the blue.

Update: And I missed this Czech project (no, not that other Czech project that gave us the idea in the first place!). They built several versions of the sandbox and showed them off at hacker meets. And they say they’re currently trying to port the software to lower-power computers. Good on them!

Update 2: One more I missed, this time done by/for the Undergraduate Library at the University of Illinois, Urbana-Champaign. I don’t have any more information; but this is the YouTube video.

I should point out that these last two were news to me; I only found out about them after googling for “AR sandbox.”

So please, if you did build one and don’t mind, send me a note. 🙂 There’s a ready-made box awaiting your input right there ↓↓↓↓

Kinect factory calibration

Boy, is my face red. I just uploaded two videos about intrinsic Kinect calibration to YouTube, and wrote two blog posts about intrinsic and extrinsic calibration, respectively, and now I find out that the factory calibration data I’ve always suspected was stored in the Kinect’s non-volatile RAM has actually been reverse-engineered. With the official Microsoft SDK out that should definitely not have been a surprise. Oh, well, my excuse is I’ve been focusing on other things lately.

So, how good is it? A bit too early to tell, because some bits and pieces are still not understood, but here’s what I know already. As I mentioned in the post on intrinsic calibration, there are several required pieces of calibration data:

- 2D lens distortion correction for the color camera.

- 2D lens distortion correction for the virtual depth camera.

- Non-linear depth correction (caused by IR camera lens distortion) for the virtual depth camera.

- Conversion formula from (depth-corrected) raw disparity values (what’s in the Kinect’s depth frames) to camera-space Z values.

- Unprojection matrix for the virtual depth camera, to map depth pixels out into camera-aligned 3D space.

- Projection matrix to map lens-corrected color pixels onto the unprojected depth image.

Multi-Kinect camera calibration

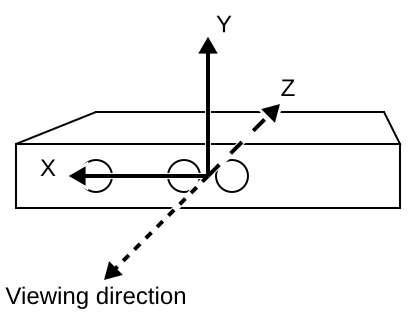

Intrinsic camera calibration, as I explained in a previous post, calculates the projection parameters of a single Kinect camera. This is sufficient to reconstruct color-mapped 3D geometry in a precise physical coordinate system from a single Kinect device. Specifically, after intrinsic calibration, the Kinect reconstructs geometry in camera-fixed Cartesian space. This means that, looking along the Kinect’s viewing direction, the X axis points to the right, the Y axis points up, and the negative Z axis points along the viewing direction (see Figure 1). The measurement unit for this coordinate system is centimeters.

Kinect camera calibration

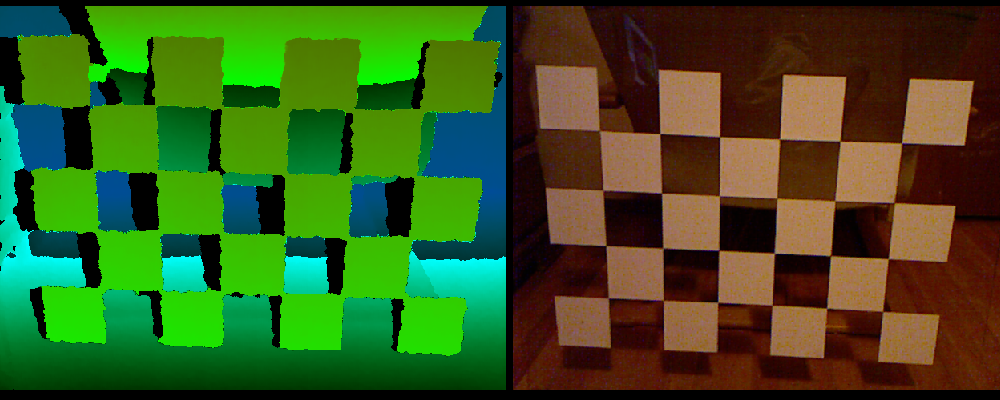

I finally managed to upload a pair of tutorial videos showing how to use the new grid-based intrinsic calibration procedure for the Kinect camera. The procedure made it into the Kinect package at least 1.5 years ago, but somehow I never found the time to explain it properly. Oh well. Here are the videos: Intrinsic Kinect Camera Calibration with Semi-transparent Grid and Intrinsic Kinect Camera Calibration Check.

Whither Leap Motion?

Leap Motion‘s Leap, an optical tracking system enabling using one’s hands directly to interact with computers in three dimensions, has been the talk of the town recently. So what’s my take on it, and particularly its use for immersive graphics?

Cool story, bro. Two months ago, a group of researchers from UC Davis and I visited the company in their San Francisco offices to see the device for ourselves. Several of Leap Motion’s engineers had seen our booth at the recent Bay Area Maker Faire, and invited us to bring one of our low-cost semi-immersive displays (a 3D TV with a Razer Hydra 6-DOF input device) and show our stuff. We obliged, packed our things, and down along I-80 to SF we went. We showed them ours, they showed us theirs, and fun was had by all.

So what’s the intelligence gathered from this visit? There’s good news, and there’s bad news. The good news is the hardware. Leap Motion have been touting the Leap as a much more precise alternative to the Kinect, and they have that absolutely right. The precision, resolution, and responsiveness of the device are exactly what they claim. Interestingly, I did not glean that insight from the actual software demos they were showing, but from a very simple utility that just showed the raw 3D point cloud of everything that entered the device’s capture space, and identified hands, fingers, and other gadgets such as pencils accurately and in real time. Having done extensive work with the Kinect, I can say that it’s an entirely different kind of tracking, altogether.

So what’s the bad news? Well, as usual, it’s the software and application side. Leap Motion’s company line is that the Leap will make mouse and keyboard obsolete. Not so fast there, buckaroo. Probably 99.99% of computer interactions done by normal people are two-dimensional in nature, and the mouse/keyboard are really good at those. You would not want to use a free-space 3D interface for intrinsically 2D interactions, which is, incidentally, my only gripe with the famous Minority Report interface (but that’s a topic for another post). The end result from doing that already has a fitting name: “Gorilla Arm.” I think I can speak to that because that’s exactly what happens when you’re doing 2D tasks (like using a web browser or filling in a spreadsheet) in an immersive display environment. Trust me, it’s not something you want to do if you can avoid it.

On the other hand, if you’re one of the minority of people who use their computers for 3D tasks, e.g., 3D modeling, sculpting, or, naturally, immersive 3D graphics, it’s an entirely different story. For such applications in the desktop realm, the Leap is a godsend. Instead of having to do the mental gymnastics of using a 2D input device to perform 3D interactions, you just interact directly with the 3D data. This is, again, exactly what’s happening in immersive graphics, and yes, it’s something you definitely do want to do.

So that’s good news, right? Well, yeah, but… The problem here is, and it’s a big problem, that in order to pipe 3D interactions captured by a device like the Leap into a 3D application, you have to punch through the existing 2D-based user interface of that application. The previous approach companies developing novel 3D input devices (think all the data gloves, 3D mice, etc. that have come out and failed over the years) have taken is to provide some form of mouse emulation, so that their devices can be used immediately with existing software. This does not work, ever. In this setup, 3D interactions performed with the device are first boiled down to 2D by the device’s driver, fed into the application, and then turned back into 3D interactions using whatever interface paradigm the application is using. The first step, going from 3D to 2D, is already awkward, and the second step is typically optimized for particular 2D devices, such as mice, which a “simulated” mouse device is most decidedly not. In other words, there are two levels of ill-fitting interface paradigms stacked on top of each other.

So what needs to be done? The answer is quite simple: if you want to effectively use the Leap with a piece of 3D software, that software has to explicitly support the Leap, and needs to use appropriate direct 3D interaction metaphors. Meaning the application developers have to buy into the Leap, dream up good problem-specific 3D interaction metaphors, do studies or experiments to fine-tune them, and then include them in their software. That takes a lot of time and money, and they won’t do it unless there is high demand, i.e., the Leap is already a widely-used device. But it won’t become a widely-used device unless a lot of widely-used 3D software already supports it in an effective way.

So it’s a classical chicken-and-egg problem. Unless you happen to use a certain VR development toolkit that is based around exactly this idea: providing device-optimized 3D interaction metaphors outside of an application’s purview, so that hardware developers can integrate their devices into existing applications without having to change those applications in any way, or even getting to their source code. But I digress…

Back on topic, what Leap Motion need to do is find at least one “killer application,” and do their utmost to get that application just exactly right. And then they have to bundle that application with every device sold. If the people buying their device are stuck with playing Fruit Ninja, or navigating with Google Earth (another thing a mouse is really good at, because Google successfully boiled down the interaction to 2D, and Leap’s Google Earth plug-in doesn’t add any new functionality) or have to use the device to write emails, they won’t recommend it to their friends.

By the way: will the Leap work out-of-the box for 3D video games? Hard to say, but I’m skeptical. They show a “finger gun” control scheme for first-person shooters — again implemented via mouse emulation — but doing that for more than a few minutes will lead to a very sore shoulder. Not that it’s a bad idea in itself — see below for a video showing exactly that interface in a CAVE — but unless the Leap is integrated into a fully calibrated desktop system, it won’t allow a player to actually aim with the “finger gun;” it will be just an equally indirect replacement for moving the mouse left-to-right.

On their web site, Leap Motion mention CAD and clay modeling as applications that inspired them to develop it. Could these be killer applications? Time will tell, but it’s at least a good starting point. So, go ahead and do it! I happen to have a 3D virtual clay modeling application with direct 3D interaction metaphors lying around, just saying…

Now, to restate my overall point after all this skepticism. From what I’ve personally seen, the Leap is an awesome device. I will definitely buy at least one when it comes out. That’s because all the software I’m developing and using on a daily basis is already poised to work with it, due to its input abstraction paradigm. Give me a low-level driver, and the rest is gravy — please, give me a low-level driver! But will the device succeed in the mainstream market, given the issues discussed here? Will it sell hundreds of millions of units, as they hope? For that to happen, I think, they’ll have to do significantly more than what they showed us. Maybe that’s why they pushed back the release date by half a year — here’s hoping.