Why does everything in my VR headset look so pixelated? It’s supposed to be using a 2160×1200 screen, but my 1080p desktop monitor looks so much sharper!

This is yet another fundamental question about VR that pops up over and over again, and like the others I have addressed previously, it leads to interesting deeper observations. So, why do current-generation head-mounted displays appear so low-resolution?

Here’s the short answer: In VR headsets, the screen is blown up to cover a much larger area of the user’s field of vision than in desktop settings. What counts is not the total number of pixels, and especially not the display’s resolution in pixels per inch, but the resolution of the projected virtual image in pixels per degree, as measured from the viewer’s eyes. A 20″ desktop screen, when viewed from a typical distance of 30″, covers 37° of the viewer’s field of vision, diagonally. The screen (or screens) inside a modern VR headset cover a much larger area. For example, I measured the per-eye field of view of the HTC Vive as around 110°x113° under ideal conditions, or around 130° diagonally (it’s complicated), or three and a half times as much as that of the 20″ desktop monitor. Because a smaller number of pixels (1080×1200 per eye) is spread out over a much larger area, each pixel appears much bigger to the viewer.

Now for the long answer.

Well, actually, the short answer is fine. Current VR headsets look low-resolution because they are low-resolution, in terms of pixels per degree. What’s interesting is a related question: how can one measure the effective resolution of VR headsets, so that one could evaluate different trade-offs between price, resolution, field of view, comfort, image quality, etc., or compare different headsets? Or, so that an application developer could decide at what size important virtual objects should appear so that they can reliably be detected by users?

As I already pointed out, using the screens’ (easily measured) resolution in pixels per inch (ppi) is meaningless, because the screen is not viewed directly, but magnified by one or more lenses. For example, if a microdisplay with an insanely high ppi number is blown up to fill HxV degrees of field of vision, and a full-size panel with a moderate ppi number but the same total number of pixels is magnified to the same HxV degrees, their apparent resolutions will be the more or less the same.

Pixels per degree (ppd) is a better measure, because it takes lens magnification into account, and is also compatible with how the resolution of our eyes is measured. But the problem is how to measure a headset’s ppd. It’s not as simple as taking the total field of view and dividing it by the total number of pixels. Due to the screens being flat, and due to distortion from the magnifying lenses, the ppd number changes from the center of the screen towards its edges, and not in straightforward ways. Take a look at the pictures I took in the article I already linked above. The green lines are straight horizontal and vertical lines on the headset’s screen, equal numbers of pixels apart, and the purple circles show angle to the center of the observing camera, in increments of 5°. Notice how the relationship between green lines and purple circles changes.

To improve the measure, one could expend more effort and measure the angular resolution at the center of the screen and use that as the single quantifier, but even that doesn’t tell the whole story. It ignores aliasing, the effects of super- and/or multi-sampling, reconstruction filter quality during the lens distortion correction warping step, remaining uncorrected chromatic aberration, glare, Fresnel lens artifacts, how many of the screen’s pixels actually end up visible to the user, and an increase in perceived resolution due to tiny involuntary head movements (“temporal super-sampling,” more on that later).

What I propose, instead, is to measure a headset’s effective resolution by the visual acuity it provides, or the size of the smallest details that can reliably be resolved by a normal-sighted viewer. After all, that’s what really counts in the end. Users want to know how well they can see small or far-away objects, read gauges in virtual cockpits, or read text. Other resolution measures are just steps on the way of getting there, so why not directly measure the end result?

Visual Acuity Measurement Procedure

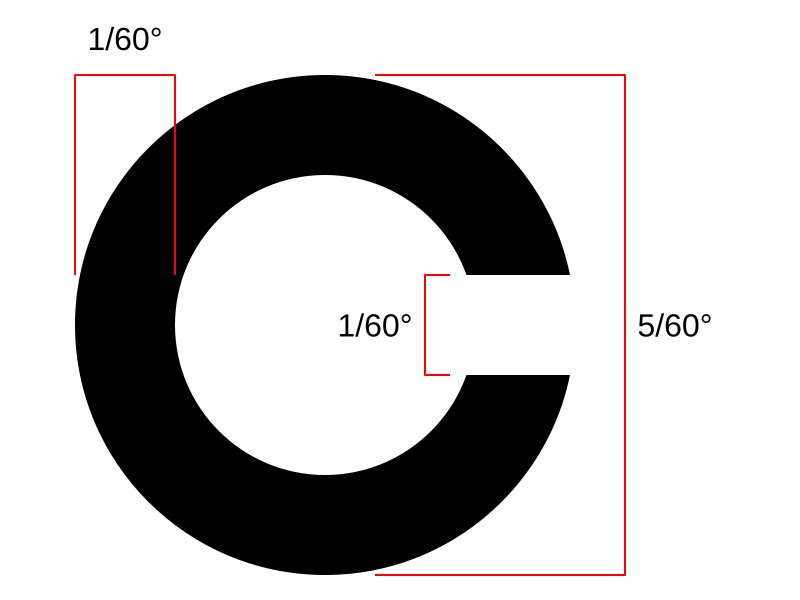

It’s a good idea on paper, but the tricky bit is that it tries to measure not what a display objectively displays, but what a viewer subjectively sees. As you can’t just shove a ruler inside someone’s eyeball, measurement will have to rely on user feedback. Fortunately, there’s an established procedure for that: the standard vision test chart (“Snellen chart”) used in optometric eye exams. The chart is set up at a fixed distance from the viewer (6m in metric countries, 20′ elsewhere), and it displays glyphs or so-called optotypes of decreasing sizes, calculated to subtend specific angles of the viewer’s field of vision. Most prominently, the central line in a Snellen chart features optotypes exactly five minutes of arc (1 minute of arc = 1/60°) in diameter, with important features such as character stems exactly one minute of arc across, defining (somewhat arbitrarily) “normal” or 20/20 visual acuity (the twenty in the numerator refers to the chart’s distance of 20′), or 6/6 visual acuity in metric countries. Other acuities such as 20/10 (6/3 metric) refer to optotypes half that size, or 20/40 (6/12 metric) to optotypes double that size. Expressed in terms of angular resolution, 20/x visual acuity means that a viewer can reliably detect features that are x/20 minutes of arc across.

The process of measuring visual acuity in a repeatable manner, and giving the viewer minimal opportunity to cheat, is best demonstrated in a video (see Figure 2). Note that the measuring utility uses a specific optotype, called “Landolt C” after its inventor, that is more easily randomized and provides exactly one critical feature, a gap that is precisely one minute of arc across at 20/20 size (see Figure 1).

Figure 2: Repeatable visual acuity measurement using feedback-driven test charts, using the standardized “Landolt C” optotype. Test performed on a 28″ 3840×2160 LCD monitor, viewed from a distance of 31.5″, using 16x multisampling.

Measurement Results

The following are results from visual acuity tests I performed myself. By themselves they are neither scientific nor indicative, due to the sample size of one. To make meaningful judgments about the resolution provided by headsets, or the improvements gained from changing parameters such as super-sampling, the measurements would have to be repeated by a large number of test subjects. Nonetheless, these give some first indications.

28″ 2160p LCD Desktop Display

These are measurements I took using my desktop monitor, an Asus PB287Q. I set up rendering parameters such that the displayed size of optotypes precisely matched the sizes of the same optotypes on a real test chart, by carefully measuring the size of the monitor’s display area, and the distance from my eyes to the screen surface.

| Super-sampling | Multi-sampling | Visual Acuity |

|---|---|---|

| 1.0x | 1x | 20/15 |

| 1.0x | 16x | 20/14 |

Interestingly, while I was recording the video in Figure 2 (which generated the result in table row 2), I speculated how the result is probably limited as much by the monitor as by my eyes. While reviewing the video footage I recorded, I realized I was wrong — when “cheating” and leaning in closer, or magnifying the footage, I could see the optotypes more clearly than I could during the test, and could easily notice all the mistakes I made. Meaning, my eyes were the limiting factor here, and are not as good as I thought. 🙁 I need to have a talk with my optometrist. I am estimating that the visual acuity my monitor actually provides is closer to 20/10.

The real resolution of my screen, under the viewing conditions used in the test, is 86.6 pixels per degree, or 0.7 minutes of arc per pixel, which, if nothing else mattered, would correspond to a visual acuity of 20/13.8. That closely matches my result of 20/14, but looking at the test images up close, it is possible to resolve optotypes smaller than that. This can primarily be chalked up to multi-sampling.

HTC Vive

This is, of course, where it gets interesting. I performed a bunch of tests (again, only on myself), using different rendering settings. My VR software, Vrui, supports several different ways to tweak image quality. The simplest is super-sampling, which exploits that the 3D environment is first rendered to an intermediate frame buffer, and then resampled to the screen’s real pixels during lens distortion correction. This intermediate buffer can be as large or small as desired. For the HTC Vive, the default intermediate size is 1512×1680 pixels, which corresponds to a 1:1 scale in the center of the screen after lens distortion. Super-sampling increases or decreases the intermediate size by some factor, which gives the lens correction step more pixels to work with, and could therefore lead to less aliasing and better clarity.

The second method is multi-sampling, which follows the same approach of rendering more (sub-)pixels to reduce aliasing, but does it under the hood, so that it even works when directly rendering to a display window (such as in my desktop test above). In Vrui, the intermediate frame buffer can use multi-sampling, which has similar effects as super-sampling, but is “cheaper” in some ways, and could still, in theory, offer superior results due to better averaging filters when generating the final pixels.

The third method is an additional tweak to super-sampling. If the chosen super-sampling factor is too large, the method backfires: There are now many pixels to work with, but the reconstruction filter used during lens distortion correction is typically a simple bilinear filter, which is now the limiter on visual quality because it only uses four source pixels when calculating a final pixel color. This would degrade image quality at super-sampling factors close to and above 2.0x. To experiment with this, Vrui has the option of running a bicubic reconstruction filter during lens distortion correction, which uses 16 source pixels to calculate one final image pixel (this is the same filter used in Photoshop or The Gimp when “high quality” or “cubic” image resizing is selected).

The following table is a non-exhaustive (well, to me, it was!) series of tests playing with those three parameters:

| Super-sampling | Multi-sampling | Filter | Visual Acuity |

|---|---|---|---|

| 1.0x | 1x | bilinear | 20/42 |

| 1.0x | 16x | bilinear | 20/46 |

| 2.0x | 1x | bilinear | 20/35 |

| 2.0x | 16x | bilinear | 20/32 |

| 2.0x | 16x | bicubic | 20/36 |

| 3.0x | 1x | bilinear | 20/32 |

| 3.0x | 1x | bicubic | 20/34 |

Besides the raw numbers, performing these tests was interesting in itself. At the base settings (1.0xSS, 1xMS, bilinear), everything looked pixelated and jaggy, but I could still resolve shapes smaller than the actual pixel grid, by continuously moving my head side-to-side. Due to head tracking, this caused the fixed pixel grid to move relatively to the fixed optotypes, which meant that on subsequent frames, different points of those optotypes were sampled into the final image, which let my brain form a hyper-resolution virtual image. I know this is not a new discovery, but my Google-fu is failing me and I cannot find a reference or an agreed-upon name for this phenomenon right now, so I’m going to call it “temporal super-sampling” for the time being.

The difference going from the base settings to 16x multi-sampling was a vast visual improvement — no more jaggies, beautiful straight and uniformly wide screen protector lines — but, somehow, this improvement caused a decrease in visual acuity. This might just be an outlier, but one possible explanation is that the smoothness of multi-sampling interferes with temporal super-sampling. Because the optotypes are sampled evenly in every frame, their images don’t change appreciably under head movement.

Going from 1.0x to 2.0x super-sampling (and back to 1x multi-sampling) brought the jaggies back, but apparently it also brought temporal super-sampling back. Things looked subjectively worse than in the previous test, but the resulting acuity of 20/35 is surprisingly high.

Adding 16x multi-sampling to 2.0x super-sampling yielded another improvement, removing jaggies without a concomitant loss in visual acuity. The question is whether this setting is realistic for non-trivial VR applications, given that the graphics card would have to render 10 megapixels (or 160 mega-sub-pixels) per eye per frame, 90 times per second. The simple vision test utility, at least, didn’t break a sweat, comfortably coasting along at 480 fps.

At 3.0x super-sampling without multi-sampling, aliasing came back big time, even when using a bicubic reconstruction filter, dropping subjective quality below 2.0x levels. But the acuity results remained high.

In general, it appears that bicubic filtering during lens distortion correction at high super-sampling factors reduces visual acuity. I don’t have a good explanation for this, and need to look at it more closely.

Conclusion

I believe, and I think my preliminary tests show, that it is possible to use subjective visual acuity, measured via a feedback-driven automated system, as a reliable way to judge the visual clarity, or effective resolution, of head-mounted or regular displays. The measure takes effects besides raw display panel resolution and lens magnification into account, and even applies to future head-mounted displays that might use foveated rendering, near-eye light fields, or holography as their display technologies. In a practical sense, it also allows evaluation of different display settings, and gives users and developers a way to predict required minimum sizes for user interface elements, depending on what display hardware and rendering settings are used.

On another note, I need to admit a mistake. Before running these tests, I repeatedly claimed that the visual acuity provided by current VR headsets, specifically the HTC Vive, would be on the order of 20/60. This was based on an earlier implementation of the vision test utility, which was not yet automated and relied on self-assessment by the user. My results there were worse, because at first glance, optotypes below 20/60 looked unresolvable. But the automated method shows that I was able to identify optotypes at a rate that would be hard to explain by guessing. If my math is right, the probability of correctly identifying four or five out of five optotypes, under the assumption that the user is picking orientations randomly, would be 0.11%.

This does not mean that it was easy identifying those optotypes, or that it would be practical or even possible to read text at those sizes. It took me several seconds each, and a lot of squinting, to identify them during the later stages of each test. Nevertheless, it appears that my old 20/60 estimate was too pessimistic.

I don’t think you should consider the display as “magnified by lenses”; it’s just “properly focused”, as our eyes are not able to properly focus objects so close to them.

I set up a page to calculate the best viewing distance for a TV depending on its resolution, but it also works fine to figure out which resolution a display should have to make the “screendor effect” (aka “pixelation”) disappear.

http://jumpjack.altervista.org/acuita/

My samsung S7 has a WQHD display (2560×1440), 5.1″.

For a 5.1″ display, at 9cm distance my page says I can see 2433 lines, which, being more than available lines, results in some screendor effect. I should move the display 15 cm away to get optimal vision, but unfortnuately this woukd lead to a mere 21° FOV, w.r.t. 32° at 9 cm (the distance availalable in my head-up display).

To make screendor effect disappear in my head-up display I would need at least a 4K display (2160 lines).

Putting displays even closer to eyes would allow a better FOV (90° would be nice), but this would start causing too much distortion and aberration on edges of the images; I think the way to go is curved displays with 8K resolution.

Maybe in 2020 or around that….

There is some minor actual magnification from the lenses, but in principle you are correct about the lenses’ purpose. I decided the shorthand would be fine here, for full details there’s always the article “Head-mounted Displays and Lenses.”

Only tangentially related, but have you come across this recent optical solution for 2D viewing?

https://www.indiegogo.com/projects/mogo-immersive-cinematic-experience-for-2d-video-cinema-smartphone

It’s not their main focus, but they mention applications to VR in their patents. (Assuming there exists an appropriate color filter or lcd switching mechanism )

-Rob

The point being that it may offer a potential solution to double existing VR headset resolutions by solving a different, hopefully easier problem.

Their idea seems to be to use relatively complex optics to project a single screen to two virtual screens. If your goal is to make a personal video viewer, then that’s a smart approach. There are detail problems, such as how they spread apart the virtual screens so that viewers don’t have to cross their eyes to see them, but I can’t assess those unless knowing details of their optics.

I’m not holding my breath for their product, as I personally think head-mounted personal video viewers are a bad idea. I have a Sony HMZ-T1, and hated using it so much I watched exactly half a movie in it ($800 well spent!). The screen following you around as you move your head means the only way to look at the corners or edges of the screen is to move your eyes, and if the virtual screen is big — bigger than a typical desktop monitor, and if it isn’t, what’s the point? — that gets painful. Also, they tout the lack of head tracking as a positive; for me it was definitely a negative. I got quite dizzy after a while. These are personal judgments.

Anyway, for VR, this is obviously a no-go. The entire idea is about showing the same image to both eyes. If you want to do stereo or VR, you would then have to use some form of multiplexing, say “active stereo” time-domain multiplexing, or anaglyphic or sub-band frequency-domain multiplexing. All of those have flaws, the same as 3D TVs actually. I would guess that it’s easier to double the number of pixels of a VR headset’s screens than it would be to turn this into a VR headset.

Hi.

Thanks for the feedback.

I wouldn’t call it relatively complex, as it’s already shipping as a single set of lenses in a relatively cheap form factor ($60-$80 if I recall).

Personally, Ive watched entire TV series on my samsung s7, so I feel this might make sense for some setups (airplanes, buses, late night bed watching, etc). Add to the fact, it doesn’t require any special software, and runs all apps ‘natively’ at original resolution with full original touchscreen access.

(disclaimer: I have nothing to do with them, just find their approach unique!).

But as for VR,, is it that established that doubling the resolution of an OLED screen is easier than multiplexing?

By their own marketing, they claim a 25% pixel usage on current conventional setups, so this seems like quite a jump in quality if one could find a suitable multiplexing system.

Current shutter glasses run at 120hz (60hz x 2), so it seems not far from an ideal 90hz x 2 solution for example.

As for sub-band anaglyphic solutions, I’ve found much more people complaining about screen door effects, vs limited color palette in any VR experience.

my two cents,

Cheers

Rob

I gave them the benefit of the doubt by assuming “complex” optics. If they only have a single lens per eye, they should have a real problem with requiring the user to cross their eyes to see the full screen, or their claim that the user can see the whole screen with both eyes is a fib. I’d have to try this.

Their claim of 25% pixel usage in VR headsets is misleading. It only applies to the special case of viewing 16:9 video content. In Rift and Vive, the screens have a portrait orientation. If you want to fit 16:9 footage onto those screens, you do indeed lose a lot of pixels. But for displaying general VR content, the ratio of used pixels is much higher.

“or their claim that the user can see the whole screen with both eyes is a fib. I’d have to try this.”

I shall contact them and ask them.

You’ve piqued my interest!

“Their claim of 25% pixel usage in VR headsets is misleading.”

Their marketing is aimed at viewing 2D contact in a touch-screen phones in a mobile headset / virtual screen environment.

ie: planes, casual viewing etc.

The extension to VR content is not mentioned anywhere publicly (except deep in their patent as a potential application).

I emailed mogo and they were good enough to clarify some of our points, that I can post here:

(part 1/2)

“First, I found this blog is very professional , interesting and relevant for our Company that also working on VR-170deg and VR-210deg optics. I probably will find some time to respond there, and look for cooperation with the guys from this blog, but currently we are very focused on MoGo marketing.”

All what Oliver and you discussed is correct; what is missing for Oliver claiming that seeing full screen will make impossible eyes convergence, is the info that main part of MoGo lenses innovation – is extremely strong prismatic power, doubling single display into two enlarged virtual displays taken far away and shifting their centers toward visual axes of straight gazing eyes, see below:

https://c1.iggcdn.com/indiegogo-media-prod-cld/image/upload/c_limit,w_620/v1502115550/n3qnzuu22cwttz0yjspk.jpg

2/2

“The solution is good for 16:9 contents and provides x4 sharpness, that as correctly noticed could be also be stereoscopic. Also, it is true that with horizontal FoV of 90 degrees it is hard to take eyes to peripheral zones and therefore solution is good for cases when the action is in the center – mostly in movies and flying drones. In addition the eye movements is good workout for the extra-ocular-muscles. However, vertical FoV is limited in this solution by 50degress, and therefore, solution is not appropriate for immersive VR.

Hope that I did some clarifications that are also clear to you and you can use this info for the Blog”

Pingback: Measuring the Effective Resolution of Head-mounted Displays – VRomit's World

Hi, with a lot of interest I read your scientific article. Well done!

I have been into Gaming for over 30 years and I am into VR for 10 weeks now and there is a lot of work to get it right and as a nature of first movers there a lot of people with subjective opinions out there including myself. OEMs are not very helpful, it is a playground for enthusiasts. Your work comes highly appreciated.

I do own the HTC Vive, Geforce 1080ti@2,0 and Intel Core i7 i7700k@4,9, together pretty close to what you can buy for reasonable money these days. All and every possible setting maxed out in Bios, power supply, Windows and Taskmanager.

I agree with your observations and interpretation and as a hardcore Sim-Racer it is really a trade off between shimmering, blurriness and sharpness. I believe I have spent close to 100 hrs on optimization and testing all possible settings and combinations and for a real life application such as racing – for which all users ask for maximum immersion as a nature of the highspeed challenge – I can replicate your findings by using multisampling and no Supersampling versus AA and maximum supersampling amongst all variants of other combinations.

Well, I need to know where the other car exactly is (your point on visual acuity), with SS 3.0 I get the best results in terms of sharpness but objects are shimmering (as well as in 1.5 or 2.0). With maximum multisampling and SS 1.0 I get no shimmering but blurry objects and less sharpness. The first is easier on the eyes, the latter is more practical for the purpose of the application.

Last but not least: A 4K TV is by far superior to any HMD and I can easily get way above 100 FPS with all settings maxed out and a flawless image. But the immersion factor in VR is so dominant, anyone and no matter if they are novice or expert, will be seconds a lap faster just by getting this sense of sitting right in that cockpit. I wonder what will be possible with 4K HMDs pretty soon: No shimmering, no blurriness and crystal clear VR maybe.

Just wanted to let you know that I have tested your findings and give you feedback,

Kind regards

Chris

Thanks for posting your observations. Another game where people have experimented a lot with different settings to optimize visual acuity is Elite: Dangerous, but I haven’t tried that myself. I wonder whether the results are the same there as in racing games.

Nice article!!! Thanks! I’m just approaching to VR for a neuroscience study and wondering which is the best way to calculate/calibrate the visual stimuli size in the VR environment (Unity3D/UnrealEngine) given its visual degrees-expressed dimension. It could be another good theme for this interesting blog!!

Hi okreylos,

what I hear from Elite users sounds so much different. Even with my one or two other VR applications it is a complete different ball game: GoogleVR is perfect, Arizona Sunshine is too, I am not testing high speed ego shooters but what I take from past experience, there is nothing like a racing sim when it comes to parallel calculations for the CPU and GPU, the shear speed of bringing 10+ cars at 300 km/h in focus in front, beside and behind you with no stuttering and an exact representation of their position together with the world flying by and rendering the track, the cars and the sky, all in all, this is to my knowledge the most demanding VR application today.

A lot of VR is perception though. Do you focus the screen door effect and you will see it. Do you focus shimmering on the edges of the buildings and you will see it. Do you allow full immersion in your head, you see the main action. It is like listening to audiophile music on an expensive stereo, someday you love it and someday you think it is all out of sync.

Chris