Leap Motion‘s Leap, an optical tracking system enabling using one’s hands directly to interact with computers in three dimensions, has been the talk of the town recently. So what’s my take on it, and particularly its use for immersive graphics?

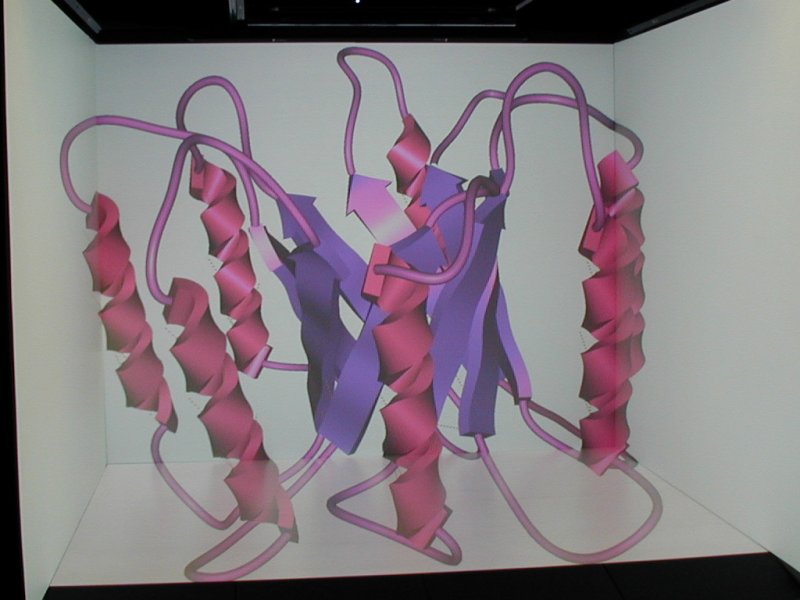

Cool story, bro. Two months ago, a group of researchers from UC Davis and I visited the company in their San Francisco offices to see the device for ourselves. Several of Leap Motion’s engineers had seen our booth at the recent Bay Area Maker Faire, and invited us to bring one of our low-cost semi-immersive displays (a 3D TV with a Razer Hydra 6-DOF input device) and show our stuff. We obliged, packed our things, and down along I-80 to SF we went. We showed them ours, they showed us theirs, and fun was had by all.

So what’s the intelligence gathered from this visit? There’s good news, and there’s bad news. The good news is the hardware. Leap Motion have been touting the Leap as a much more precise alternative to the Kinect, and they have that absolutely right. The precision, resolution, and responsiveness of the device are exactly what they claim. Interestingly, I did not glean that insight from the actual software demos they were showing, but from a very simple utility that just showed the raw 3D point cloud of everything that entered the device’s capture space, and identified hands, fingers, and other gadgets such as pencils accurately and in real time. Having done extensive work with the Kinect, I can say that it’s an entirely different kind of tracking, altogether.

So what’s the bad news? Well, as usual, it’s the software and application side. Leap Motion’s company line is that the Leap will make mouse and keyboard obsolete. Not so fast there, buckaroo. Probably 99.99% of computer interactions done by normal people are two-dimensional in nature, and the mouse/keyboard are really good at those. You would not want to use a free-space 3D interface for intrinsically 2D interactions, which is, incidentally, my only gripe with the famous Minority Report interface (but that’s a topic for another post). The end result from doing that already has a fitting name: “Gorilla Arm.” I think I can speak to that because that’s exactly what happens when you’re doing 2D tasks (like using a web browser or filling in a spreadsheet) in an immersive display environment. Trust me, it’s not something you want to do if you can avoid it.

On the other hand, if you’re one of the minority of people who use their computers for 3D tasks, e.g., 3D modeling, sculpting, or, naturally, immersive 3D graphics, it’s an entirely different story. For such applications in the desktop realm, the Leap is a godsend. Instead of having to do the mental gymnastics of using a 2D input device to perform 3D interactions, you just interact directly with the 3D data. This is, again, exactly what’s happening in immersive graphics, and yes, it’s something you definitely do want to do.

So that’s good news, right? Well, yeah, but… The problem here is, and it’s a big problem, that in order to pipe 3D interactions captured by a device like the Leap into a 3D application, you have to punch through the existing 2D-based user interface of that application. The previous approach companies developing novel 3D input devices (think all the data gloves, 3D mice, etc. that have come out and failed over the years) have taken is to provide some form of mouse emulation, so that their devices can be used immediately with existing software. This does not work, ever. In this setup, 3D interactions performed with the device are first boiled down to 2D by the device’s driver, fed into the application, and then turned back into 3D interactions using whatever interface paradigm the application is using. The first step, going from 3D to 2D, is already awkward, and the second step is typically optimized for particular 2D devices, such as mice, which a “simulated” mouse device is most decidedly not. In other words, there are two levels of ill-fitting interface paradigms stacked on top of each other.

So what needs to be done? The answer is quite simple: if you want to effectively use the Leap with a piece of 3D software, that software has to explicitly support the Leap, and needs to use appropriate direct 3D interaction metaphors. Meaning the application developers have to buy into the Leap, dream up good problem-specific 3D interaction metaphors, do studies or experiments to fine-tune them, and then include them in their software. That takes a lot of time and money, and they won’t do it unless there is high demand, i.e., the Leap is already a widely-used device. But it won’t become a widely-used device unless a lot of widely-used 3D software already supports it in an effective way.

So it’s a classical chicken-and-egg problem. Unless you happen to use a certain VR development toolkit that is based around exactly this idea: providing device-optimized 3D interaction metaphors outside of an application’s purview, so that hardware developers can integrate their devices into existing applications without having to change those applications in any way, or even getting to their source code. But I digress…

Back on topic, what Leap Motion need to do is find at least one “killer application,” and do their utmost to get that application just exactly right. And then they have to bundle that application with every device sold. If the people buying their device are stuck with playing Fruit Ninja, or navigating with Google Earth (another thing a mouse is really good at, because Google successfully boiled down the interaction to 2D, and Leap’s Google Earth plug-in doesn’t add any new functionality) or have to use the device to write emails, they won’t recommend it to their friends.

By the way: will the Leap work out-of-the box for 3D video games? Hard to say, but I’m skeptical. They show a “finger gun” control scheme for first-person shooters — again implemented via mouse emulation — but doing that for more than a few minutes will lead to a very sore shoulder. Not that it’s a bad idea in itself — see below for a video showing exactly that interface in a CAVE — but unless the Leap is integrated into a fully calibrated desktop system, it won’t allow a player to actually aim with the “finger gun;” it will be just an equally indirect replacement for moving the mouse left-to-right.

On their web site, Leap Motion mention CAD and clay modeling as applications that inspired them to develop it. Could these be killer applications? Time will tell, but it’s at least a good starting point. So, go ahead and do it! I happen to have a 3D virtual clay modeling application with direct 3D interaction metaphors lying around, just saying…

Now, to restate my overall point after all this skepticism. From what I’ve personally seen, the Leap is an awesome device. I will definitely buy at least one when it comes out. That’s because all the software I’m developing and using on a daily basis is already poised to work with it, due to its input abstraction paradigm. Give me a low-level driver, and the rest is gravy — please, give me a low-level driver! But will the device succeed in the mainstream market, given the issues discussed here? Will it sell hundreds of millions of units, as they hope? For that to happen, I think, they’ll have to do significantly more than what they showed us. Maybe that’s why they pushed back the release date by half a year — here’s hoping.