I know, the Oculus Rift DK2 is obsolete equipment, but nonetheless — there are a lot of them still out there, it’s still a decent VR headset for seated applications, I guess they’re getting cheaper on eBay now, and I put in all the work back then to support it in Vrui, so I might as well describe how to use it. If nothing else, the DK2 is a good way to watch DVD movies, or panoramic mono- or stereoscopic videos, in VR.

Category Archives: VR Software

Vive la Vrui!

It has been way too long that I have publicly released a new version of the Vrui VR toolkit. The main issue was that I had been chasing evolving hardware, from the Oculus Rift DK1 to the Oculus Rift DK2, and now to HTC’s Vive. During that long stretch of time, I was never happy with the state of support of any of these devices.

That’s finally changed. I have been working on full native support for HTC’s Vive head-mounted display over the last few months (with the first major break-through in May), and I think it’s working really well. There are still a lot of improvements to make and sharp edges to sand off, but I feel it is worthwhile releasing the software as it is now to get some early testing done. So without much further ado, here is Vrui-4.2-004.

Figure 1: Vrui’s ClusterJello toy application running on an HTC Vive head-mounted display. Recorded using a second-generation Microsoft Kinect camera (Kinect-for-Xbox-One).

Continue reading

Keeping VR users from hurting themselves

Just the other day, I jumped on the wayback machine and posted an article about our work in immersive tele-collaboration, featuring research (and a video) from about four years ago. The shame! I figured it would be excusable that one time, and I would never do it again. Oh well, here we go.

Keeping VR users from hurting themselves

… or their expensive VR equipment.

It’s a pretty big deal. Virtual Reality, especially its head-mounted implementation, is quite good at overriding its users’ sense of place and space. “Presence,” or the feeling of bodily being in a place where one knows to be not, is a powerful and compelling experience, but it has a downside: users experiencing it lose touch with their real physical environments. Exhibit A: Figure 1 (granted, there are some concerns that the following video clip was staged, but let’s pretend it’s for reals).

Figure 1: When instinct takes over. Source: imgur

To prevent this kind of thing from happening — at least in most cases — Valve implemented a system called “Chaperone” into the SteamVR run-time framework that runs their and HTC’s Vive VR headset (and potentially other headsets, through Valve’s OpenVR layer). Continue reading

For Science!

I’ve been busy finalizing the upcoming 4.0 release of the Vrui VR toolkit (it looks like I will have full support for Oculus Rift DK2 just before it is obsoleted by the commercial version, haha), and needed a short break.

So I figured I’d do something I’ve never done before in VR, namely, watch a full-length theatrical movie. I’m still getting DVDs from Netflix like it’s 1999, and I had “Avengers: Age of Ultron” at hand. The only problem was that I didn’t have a VR-enabled movie player.

Well, how hard can that be? Not hard at all, as it turns out. I installed the development packages for the xine multimedia framework, browsed through their hacker’s guide, figured out where to intercept audio buffers and decoded video frames, and three hours later I had a working prototype. A few hours more, and I had a user interface, full DVD menu navigation, a scrub bar, and subtitles. In 737 lines of code, a big chunk of which is debugging output to trace the control and data flow of the xine library. So yeah, libxine is awesome.

Then it was time to pull the easy chair into the office, start VruiXine, put on the Rift, map DVD navigation controls to the handy SteelSeries Stratus XL bluetooth gamepad they were giving away at Oculus Connect2, and relax (see Figure 1).

Figure 1: The title menu of the “Avengers: Age of Ultron” DVD in a no-frills VR movie player (VruiXine). Fancy virtual environments are left as an exercise for the reader.

Zero-latency Rendering

I finally managed to get the Oculus Rift DK2 fully supported in my Vrui VR toolkit, and while there are still some serious issues, such as getting the lens distortion formulas and internal HMD geometry exactly right, I’ve already noticed something really neat.

I have a bunch of graphically simple applications that run at ridiculous frame rates (some get several thousand fps on an Nvidia GeForce 770 GTX), and with some new rendering configuration options in Vrui 4.0 I can disable vsync, and render directly into the display window’s front buffer. In other words, I can let these applications “race the beam.”

There are two main results of disabling vsync and rendering into the front buffer: For one, the CPU and graphics card get really hot (so this is not something you want to do this naively). But second, let’s assume that some application can render 1,000 fps. This means, every millisecond, a new complete video frame is rendered into video scan-out memory, where it gets picked up by the video controller and sent across the video link immediately. In other words, almost every line of the Rift’s display gets a “fresh” image, based on most up-to-date tracking data, and flashes this image to the user’s retina without further delay. Or in other words, total motion-to-photon latency for the entire screen is now down to around 1ms. And the result of that is by far the most solid VR I’ve ever seen.

Not entirely useful, but pretty cool nonetheless.

On the road for VR: Silicon Valley Virtual Reality Conference & Expo

Yesterday, I attended the second annual Silicon Valley Virtual Reality Conference & Expo in San Jose’s convention center. This year’s event was more than three times bigger than last year’s, with around 1,400 attendees and a large number of exhibitors.

Unfortunately, I did not have as much time as I would have liked to visit and try all the exhibits. There was a printing problem at the registration desk in the morning, and as a result the keynote and first panel were pushed back by 45 minutes, overlapping the expo time; additionally, I had to spend some time preparing for and participating in my own panel on “VR Input” from 3pm-4pm.

The panel was great: we had Richard Marks from Sony (Playstation Move, Project Morpheus), Danny Woodall from Sixense (STEM), Yasser Malaika from Valve (HTC Vive, Lighthouse), Tristan Dai from Noitom (Perception Neuron), and Jason Jerald as moderator. There was lively discussion of questions posed by Jason and the audience. Here’s a recording of the entire panel:

One correction: when I said I had been following Tactical Haptics‘ progress for 2.5 years, I meant to say 1.5 years, since the first SVVR meet-up I attended. Brainfart. Continue reading

Archaeologists use LiDAR to find lost cities in Honduras

I wasn’t able to talk about this before, but now I guess the cat’s out of the bag. About two years ago, we helped a team of archaeologists and filmmakers to visualize a very large high-resolution aerial LiDAR scan of a chunk of dense Honduran rain forest in the CAVE. Early analyses of the scan had found evidence of ruins hidden under the foliage, and using LiDAR Viewer in the CAVE, we were able to get a closer look. The team recently mounted an expedition, and found untouched remains of not one, but two lost cities in the jungle. Read more about it at National Geographic and The Guardian. I want to say something cool and Indiana Jones-like right now, but I won’t.

Figure 1: A “were-jaguar” effigy, likely representing a combination of a human and spirit animal, is part of a still-buried ceremonial seat, or metate, one of many artifacts discovered in a cache in ruins deep in the Honduran jungle.

Photograph by Dave Yoder, National Geographic. Full-resolution image at National Geographic.

Messing around with 3D video

We had a couple of visitors from Intel this morning, who wanted to see how we use the CAVE to visualize and analyze Big Datatm. But I also wanted to show them some aspects of our 3D video / remote collaboration / tele-presence work, and since I had just recently implemented a new multi-camera calibration procedure for depth cameras (more on that in a future post), and the alignment between the three Kinects in the IDAV VR lab’s capture space is now better than it has ever been (including my previous 3D Video Capture With Three Kinects video), I figured I’d try something I hadn”t done before, namely remotely interacting with myself (see Figure 1).

Hacking the Oculus Rift DK2, part IV

Link

Note: This is part 4 of a four-part series. [Part 1] [Part 2] [Part 3]

I caved and uploaded a snapshot of the current optical tracking sources, including a pre-release snapshot of upcoming Vrui-3.2-001 (please don’t use it outside of the tracking project; it’s bound to change some before it’s really released), to github: http://github.com/Doc-Ok/OpticalTracking.

Hacking the Oculus Rift DK2, part III

Note: This is part 3 of a four-part series. [Part 1] [Part 2] [Part 4]

In the previous part of this ongoing series of posts, I described how the Oculus Rift DK2’s tracking LEDs can be identified in the video stream from the tracking camera via their unique blinking patterns, which spell out 10-bit binary numbers. In this post, I will describe how that information can be used to estimate the 3D position and orientation of the headset relative to the camera; the first important step in full positional head tracking.

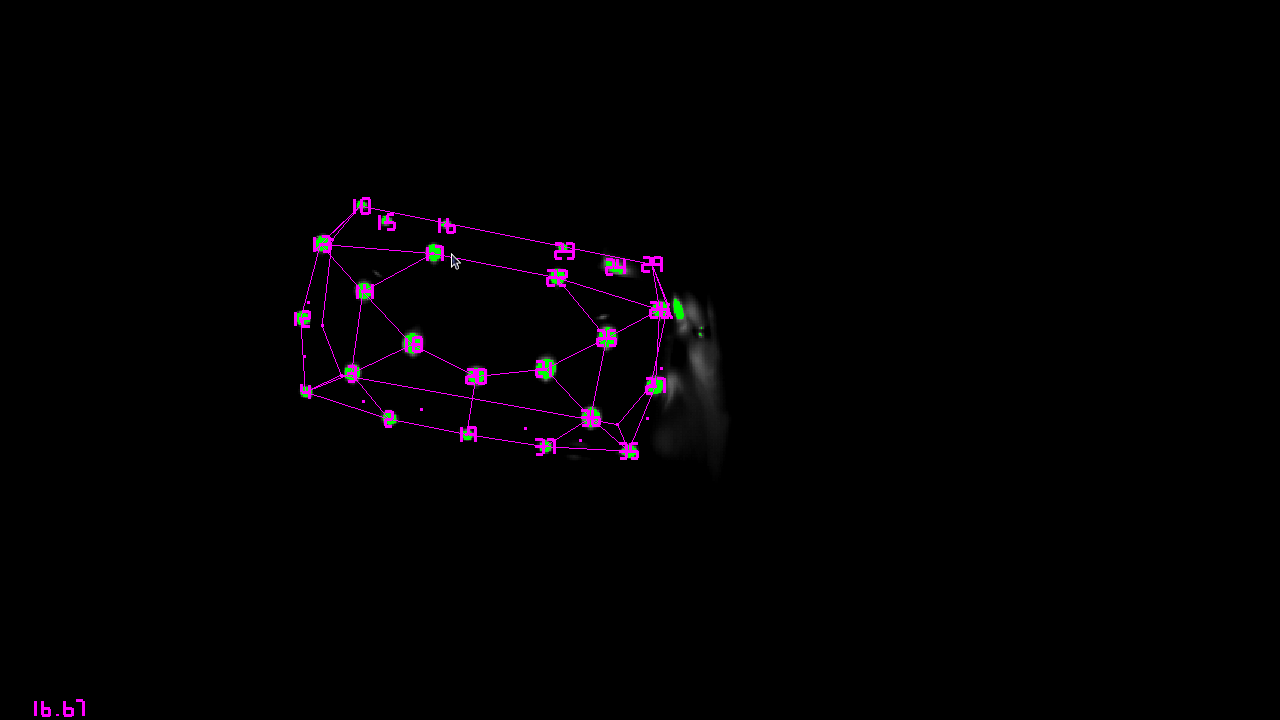

Figure 1: Still frame from pose estimation video, showing a 3D model of the DK2’s headset (the purple wireframe) projected onto a raw 2D video frame from the tracking camera based on reconstructed position and orientation.

3D pose estimation, or the problem of reconstructing the 3D position and orientation of a known object relative to a single 2D camera, also known as the perspective-n-point problem, is a well-researched topic in computer vision. In the special case of the Oculus Rift DK2, it is the foundation of positional head tracking. As I tried to explain in this video, an inertial measurement unit (IMU) by itself cannot track an object’s absolute position over time, because positional drift builds up rapidly and cannot be controlled without an external 3D reference frame. 3D pose estimation via an external camera provides exactly such a reference frame. Continue reading