Now this is why I run a blog. In my video and post on the Oculus Rift’s internals, I talked about distortions in 3D perception when the programmed-in camera positions for the left and right stereo views don’t match the current left and right pupil positions, and how a “perfect” HMD would therefore need a built-in eye tracker. That’s still correct, but it turns out that I could have done a much better job approximating proper 3D rendering when there is no eye tracking.

This improvement was pointed out by a commenter on the previous post. TiagoTiago asked if it wouldn’t be better if the virtual camera were located at the centers of the viewer’s eyeballs instead of at the pupils, because then light rays entering the eye straight on would be represented correctly, independently of eye vergence angle. Spoiler alert: he was right. But I was skeptical at first, because, after all, that’s just plain wrong. All light rays entering the eye converge at the pupil, and therefore that’s the only correct position for the virtual camera.

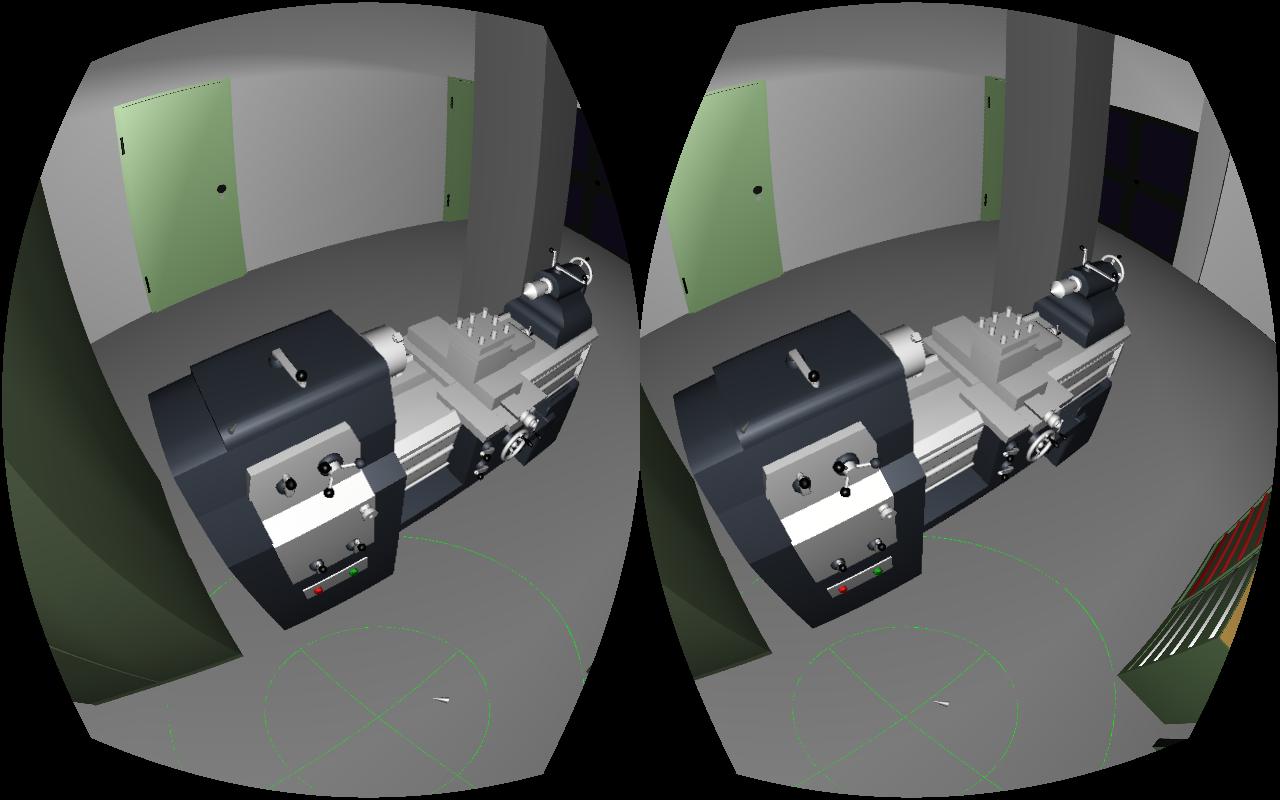

Well, that’s true, but if the current pupil position is unknown due to lack of eye tracking, then the only correct thing turns into just another approximation, and who’s to say which approximation is better. My hunch was that the distortion effects from having the camera in the center of the eyeballs would be worse, but given that projection in an HMD involving a lens is counter-intuitive, I still had to test it. Fortunately, adding an interactive foveating mechanism to my lens simulation application was simple.

Turns out that I was wrong, and that in the presence of a collimating lens, i.e., a lens that is positioned such that the HMD display screen is in the lens’ focal plane, distortion from placing the camera in the center of the eyeball is significantly less pronounced than in my approach. Just don’t ask me to explain it for now — it’s due to the “special properties of the collimated light.” 🙂