I promised I would keep off-topic posts to a minimum, but I have to make an exception for this. I just found out that Roger Ebert died today, at age 70, after a long battle with cancer. This is very sad, and a great loss. There are three primary reasons why I have always stayed aware of Mr. Ebert’s output: I love movies, video games, and 3D, and he had strong opinions on all three of those areas.

When hearing about a movie, my first step is always the Internet Movie Database, and the second step is a click-through to Mr. Ebert’s review. While I didn’t always agree with his opinions, his reviews were always very useful in forming an opinion; and anyway, after having listened to his full-length commentary track on Dark City — something that everybody with even a remote interest in movies or science fiction should check out — he could do no wrong in my book.

I do not want to weigh in on the “video games as art” discussion, because that’s neither here nor there.

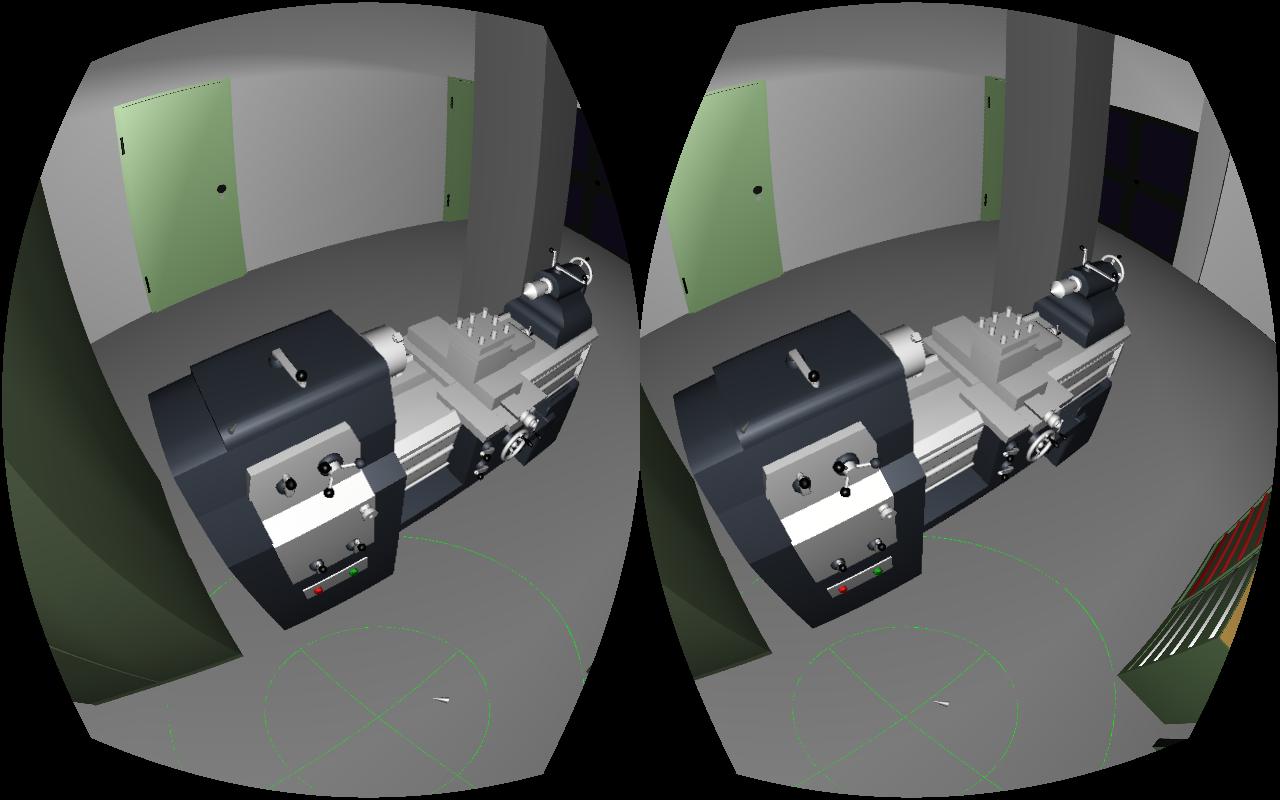

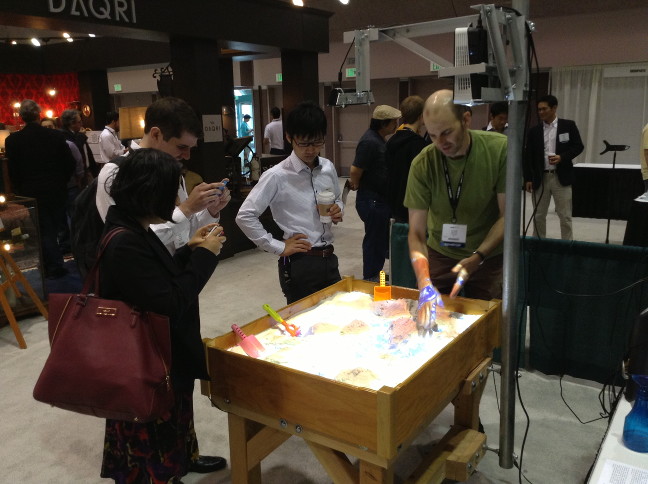

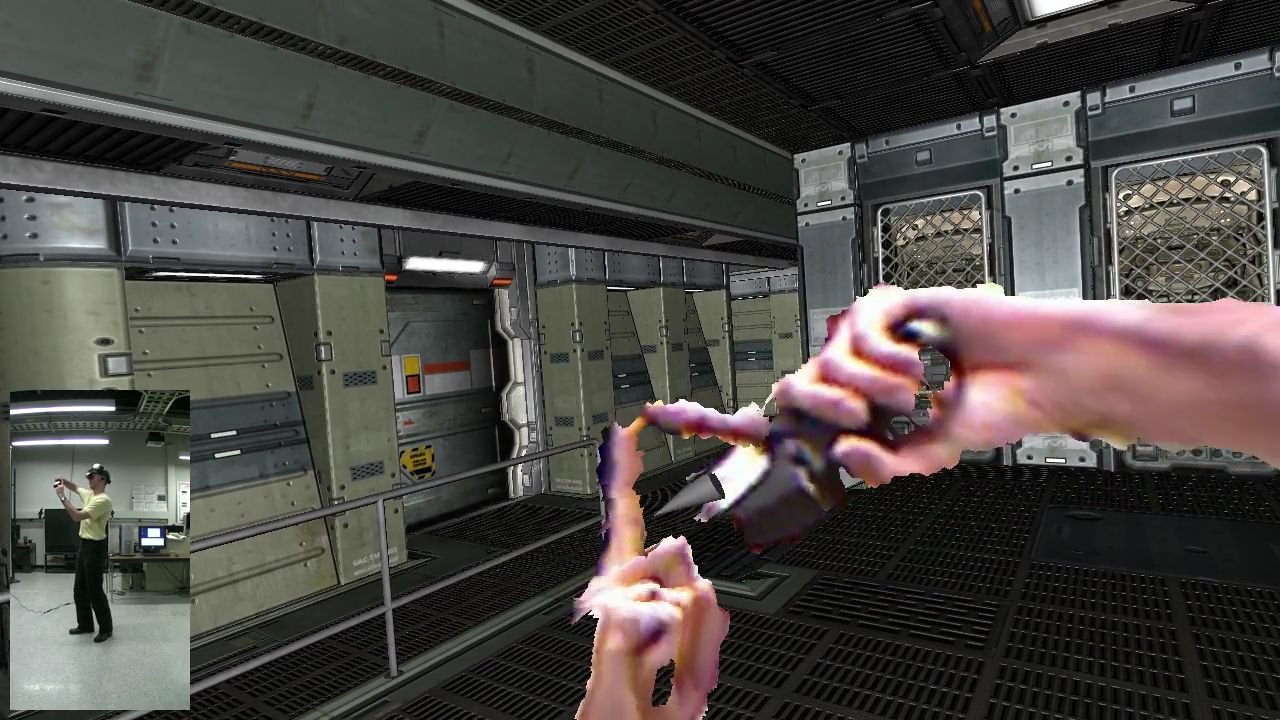

However, I do want to address Mr. Ebert’s opinions on stereoscopic movies (I’m not going to say 3D movies!), because that’s close to my heart (and this blog… hey, we’re on topic again!). In a nutshell, he did not like them. At all. And the thing is, I don’t really think they work either. Where I strongly disagreed with him is the reason why they don’t work. For Mr. Ebert, 3D itself was a fundamentally flawed idea in principle. For me, the current implementation of stereoscopy as seen in most movies is deeply flawed (am I going to see “Jurassic Park 3D?” Hell no!). What I’m saying is, 3D can be great; it’s just not done right in most stereoscopic movies, and maybe properly applying it will require a change in the entire idea of what a movie is. I always felt that the end goal of 3D movies should not be to watch the proceedings on a stereoscopic screen from far away, but to be in the middle the action, as in viewing a theater performance by being on stage amidst the actors.

I had always hoped that Mr. Ebert would at some point see how 3D is supposed to be, and then nudge movie makers towards that ideal. Alas, it was not to be.

Like this:

Like Loading...