Through a complex chain of circumstances, we got ourselves invited to demonstrate the Augmented Reality Sandbox at the White House Water Summit on March 22, coinciding with the United Nations’ World Water Day 2016, as part of the National Science Foundation‘s presence (NSF funded initial development of the AR Sandbox through an Informal Science Education grant).

Author Archives: okreylos

Optical Properties of Current VR HMDs

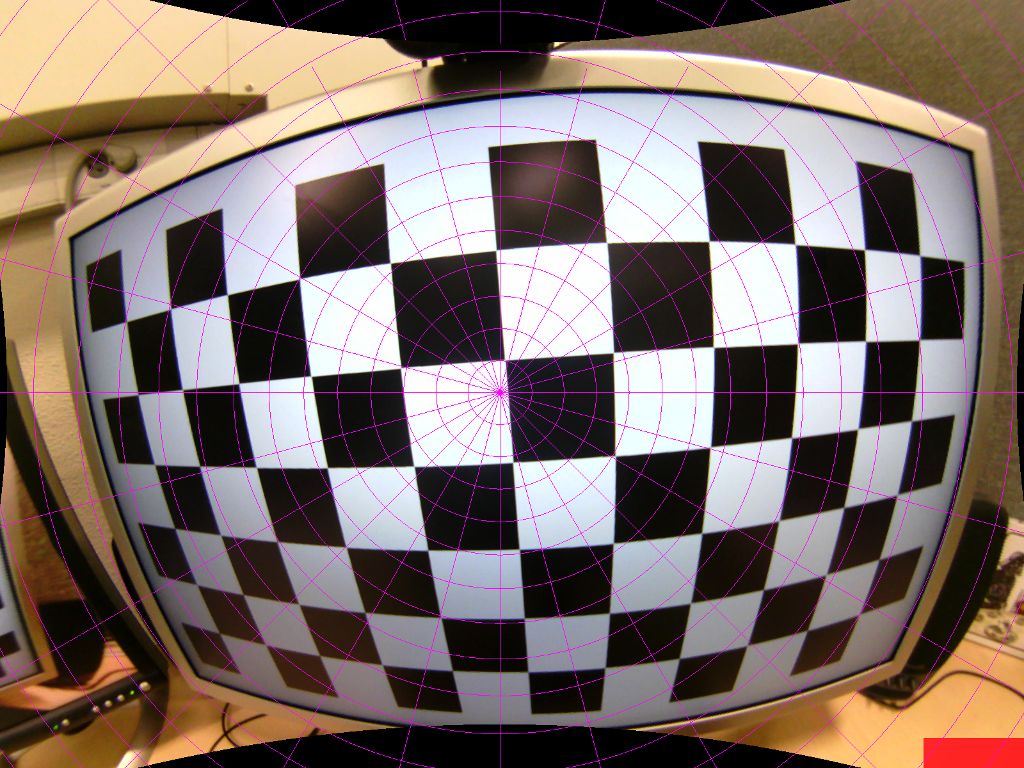

With the first commercial version of the Oculus Rift (Rift CV1) now trickling out of warehouses, and Rift DK2, HTC Vive DK1, and Vive Pre already being in developers’ hands, it’s time for a more detailed comparison between these head-mounted displays (HMDs). In this article, I will look at these HMDs’ lenses and optics in the most objective way I can, using a calibrated fish-eye camera (see Figures 1, 2, and 3).

Figure 1: Picture from a fisheye camera, showing a checkerboard calibration target displayed on a 30″ LCD monitor.

Figure 2: Same picture as Figure 1, after rectification. The purple lines were drawn into the picture by hand to show the picture’s linearity after rectification.

Figure 3: Rectified picture from Figure 2, re-projected into stereographic projection to simplify measuring angles. Concentric purple circles indicate 5-degree increments away from the projection center point.

Oculus Rift DK2’s tracking update rate

I’ve been involved in some arguments about the inner workings of the Oculus Rift’s and HTC/Valve Vive’s tracking systems recently, and while I don’t want to get into any of that right now, I just did a little experiment.

The tracking update rate of the Oculus Rift DK2, meaning the rate at which Oculus’ tracking driver sends different position/orientation estimates to VR applications, is 1000 Hz. However, the time between updates is 2ms, meaning that the driver updates the position/orientation, and then updates it again immediately afterwards, 500 times per second.

This is not surprising at all, given my earlier observation that the DK2 samples its internal IMU at a rate of 1000 Hz, and sends data packets containing 2 IMU samples each to the host at a rate of 500 Hz. The tracking driver is then kind enough to process these samples individually, and pass updated tracking data to applications after it’s done processing each one. That second part is maybe a bit superfluous, but I’ll take it.

Here is a (very short excerpt of a) dump from the test application I wrote:

0.00199484: -0.0697729, -0.109664, -0.458555 6.645e-06 : -0.0698003, -0.110708, -0.458532 0.00199313: -0.069828 , -0.111758, -0.45851 6.012e-06 : -0.0698561, -0.112813, -0.458488 0.00200075: -0.0698847, -0.113875, -0.458466 6.649e-06 : -0.0699138, -0.114943, -0.458445 0.0019885 : -0.0699434, -0.116022, -0.458427 5.915e-06 : -0.0699734, -0.117106, -0.45841 0.0020142 : -0.070004 , -0.118196, -0.458393 5.791e-06 : -0.0700351, -0.119291, -0.458377 0.00199589: -0.0700668, -0.120392, -0.458361 6.719e-06 : -0.070099 , -0.121499, -0.458345 0.00197487: -0.0701317, -0.12261 , -0.45833 6.13e-06 : -0.0701651, -0.123727, -0.458314 0.00301248: -0.0701991, -0.124849, -0.458299 5.956e-06 : -0.0702338, -0.125975, -0.458284 0.00099399: -0.0702693, -0.127107, -0.458269 5.971e-06 : -0.0703054, -0.128243, -0.458253 0.0019938 : -0.0703423, -0.129384, -0.458238 5.938e-06 : -0.0703799, -0.130529, -0.458223 0.00200243: -0.0704184, -0.131679, -0.458207 7.434e-06 : -0.0704576, -0.132833, -0.458191 0.0019831 : -0.0704966, -0.133994, -0.458179 5.957e-06 : -0.0705364, -0.135159, -0.458166 0.00199577: -0.0705771, -0.136328, -0.458154 5.974e-06 : -0.0706185, -0.137501, -0.458141

The first column is the time interval between each row and the previous row, in seconds. The second to fourth rows are the reported (x, y, z) position of the headset.

I hope this puts the myth to rest that the DK2 only updates its tracking data when it receives a new frame from the tracking camera, which is 60 times per second, and confirms that the DK2’s tracking is based on dead reckoning with drift correction. Now, while it is possible that the commercial version of the Rift does things differently, I don’t see a reason why it should.

PS: If you look closely, you’ll notice an outlier in rows 15 and 17: the first interval is 3ms, and the second interval is only 1ms. One sample missed the 1000 Hz sample clock, and was delivered on the next update.

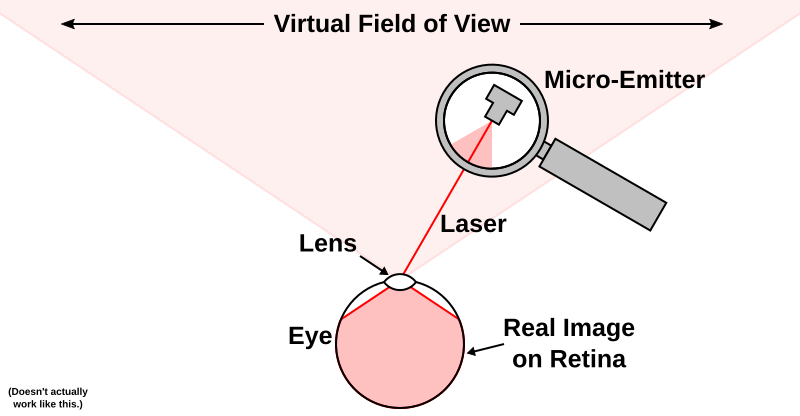

Lasers Are Not Magic

“Can I make a full-field-of-view AR or VR display by directly shining lasers into my eyes?”

No.

Well, technically, you can, but not in the way you probably imagine if you asked that question. What you can’t do is mount some tiny laser emitter somewhere out of view, have it shine a laser directly into your pupil, and expect to get a virtual image covering your entire field of view (see Figure 1). Light, and your eyes, don’t work that way.

Head-mounted Displays and Lenses

“It can’t be comfortable or healthy to stare at a screen a few inches in front of your eyes.”

The popularity of Google Cardboard, and the upcoming commercial releases of the Oculus Rift, HTC Vive, and other modern head-mounted displays (HMDs) have raised interest in virtual reality and VR devices in parts of the population who have never been exposed to, or had reason to care about, VR before. Together with the fact that VR, as a medium, is fundamentally different from other media with which it often gets lumped in, such as 3D cinema or 3D TV, this leads to a number of common misunderstandings and frequently-asked questions. Therefore, I am planning to write a series of articles addressing these questions one at a time.

First up: How is it possible to see anything on a screen that is only a few inches in front of one’s face?

Short answer: In HMDs, there are lenses between the screens (or screen halves) and the viewer’s eyes to solve exactly this problem. These lenses project the screens out to a distance where they can be viewed comfortably (for example, in the Oculus Rift CV1, the screens are rumored to be projected to a distance of two meters). This also means that, if you need glasses or contact lenses to clearly see objects several meters away, you will need to wear your glasses or lenses in VR.

Now for the long answer. Continue reading

For Science!

I’ve been busy finalizing the upcoming 4.0 release of the Vrui VR toolkit (it looks like I will have full support for Oculus Rift DK2 just before it is obsoleted by the commercial version, haha), and needed a short break.

So I figured I’d do something I’ve never done before in VR, namely, watch a full-length theatrical movie. I’m still getting DVDs from Netflix like it’s 1999, and I had “Avengers: Age of Ultron” at hand. The only problem was that I didn’t have a VR-enabled movie player.

Well, how hard can that be? Not hard at all, as it turns out. I installed the development packages for the xine multimedia framework, browsed through their hacker’s guide, figured out where to intercept audio buffers and decoded video frames, and three hours later I had a working prototype. A few hours more, and I had a user interface, full DVD menu navigation, a scrub bar, and subtitles. In 737 lines of code, a big chunk of which is debugging output to trace the control and data flow of the xine library. So yeah, libxine is awesome.

Then it was time to pull the easy chair into the office, start VruiXine, put on the Rift, map DVD navigation controls to the handy SteelSeries Stratus XL bluetooth gamepad they were giving away at Oculus Connect2, and relax (see Figure 1).

Figure 1: The title menu of the “Avengers: Age of Ultron” DVD in a no-frills VR movie player (VruiXine). Fancy virtual environments are left as an exercise for the reader.

Information Superhighway Robbery

So, apparently this is a business model: you trawl YouTube for videos with a decent number of views (not too many, mind you), uploaded by someone that is not a bona fide YouTube star or well-known personality, or part of some content or ad exchange network, and file copyright claims on those videos. But you’re nice about it. You don’t threaten to take down those videos right away, you just give a friendly heads-up that some of the content in those videos is owned by you, and that you are therefore entitled to monetize those videos on behalf of (and instead of) the uploader. No big deal, it’s only fair, right?

Well, granted, it can be. YouTube is obviously a cesspool of blatant and gleeful copyright infringement. I especially like those uploaders who are rather clueless about copyright, and think it’s perfectly fine to rip off and upload someone else’s work, be it a music video or TV show episode or whole movie, as long as they put a disclaimer like “uploaded under fair use” or “no copyright infringement intended” into the video description. I very especially like the second “excuse,” because the cognitive dissonance is so delicious. “I just threw a well-aimed brick through your window, but I totally didn’t intend to do that!” Sure you didn’t. I have a few semi-popular videos on YouTube myself, and it grinds my gears if someone else re-uploads them, in lousy quality and with ads plastered all over. There was one case early on where a re-uploaded video got significantly more views and discussion than my original, and I had to go over there and answer questions. Wasn’t cool.

Anyway, back to topic. While there needs to be some mechanism for copyright holders to assert their rights, the current mechanism seems to be skewed towards appeasing “big content providers,” and seems open to abuse by, well, scum. For the former, exhibit A: “Sony Filed a Copyright Claim Against the Stock Video I Licensed to Them.” There’s really nothing I can add to that except that this is an instance where someone’s livelihood was seriously messed with.

As for (likely) abuse, last night I noticed a Content ID copyright claim on one of my aforementioned semi-popular videos: Continue reading

HoloLens and Holograms

Today Microsoft announced a release window (first quarter 2016) and price (USD 3,000) for HoloLens developer kits, so suddenly HoloLens, and discussion thereof, is all over the Internet again.

I’ve already talked about HoloLens ad nauseam, but I found myself several times today trying to explain where (I think) the “Holo” in HoloLens comes from, and what HoloLens has to do with actual, real, honest-to-goodness holograms. Continue reading

Blast from the Past

Aside

I just stumbled upon an interview I did almost four years ago for Greg Borenstein’s book “Making Things See: 3D vision with Kinect, Processing, Arduino, and MakerBot.”

The relevant part of the book, starting on page 29, is available online via Google Books. It’s cringeworthy because I’m talking about basically the same things I’m talking about these days, but had a hard time as this was before the current VR renaissance. I probably failed entirely to get my main points across to an audience that had never experienced VR, and had never considered it anything but an old and busted thing from the ’90s.

Zero-latency Rendering

I finally managed to get the Oculus Rift DK2 fully supported in my Vrui VR toolkit, and while there are still some serious issues, such as getting the lens distortion formulas and internal HMD geometry exactly right, I’ve already noticed something really neat.

I have a bunch of graphically simple applications that run at ridiculous frame rates (some get several thousand fps on an Nvidia GeForce 770 GTX), and with some new rendering configuration options in Vrui 4.0 I can disable vsync, and render directly into the display window’s front buffer. In other words, I can let these applications “race the beam.”

There are two main results of disabling vsync and rendering into the front buffer: For one, the CPU and graphics card get really hot (so this is not something you want to do this naively). But second, let’s assume that some application can render 1,000 fps. This means, every millisecond, a new complete video frame is rendered into video scan-out memory, where it gets picked up by the video controller and sent across the video link immediately. In other words, almost every line of the Rift’s display gets a “fresh” image, based on most up-to-date tracking data, and flashes this image to the user’s retina without further delay. Or in other words, total motion-to-photon latency for the entire screen is now down to around 1ms. And the result of that is by far the most solid VR I’ve ever seen.

Not entirely useful, but pretty cool nonetheless.