I’ve recently written a loose series of articles trying to explain certain technical aspects of virtual reality, such as what the lenses in VR headsets do, or why there is some blurriness, but I haven’t — or at least haven’t in a few years — tackled the big question:

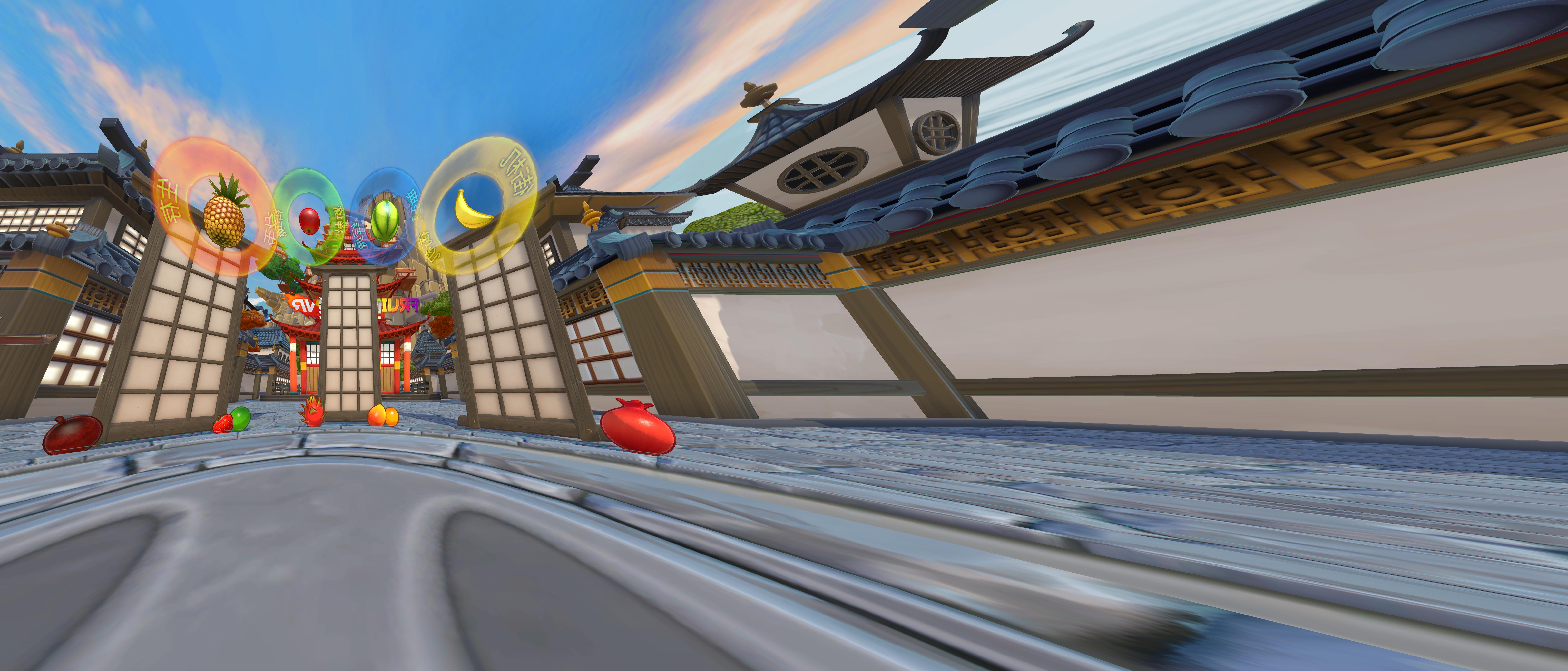

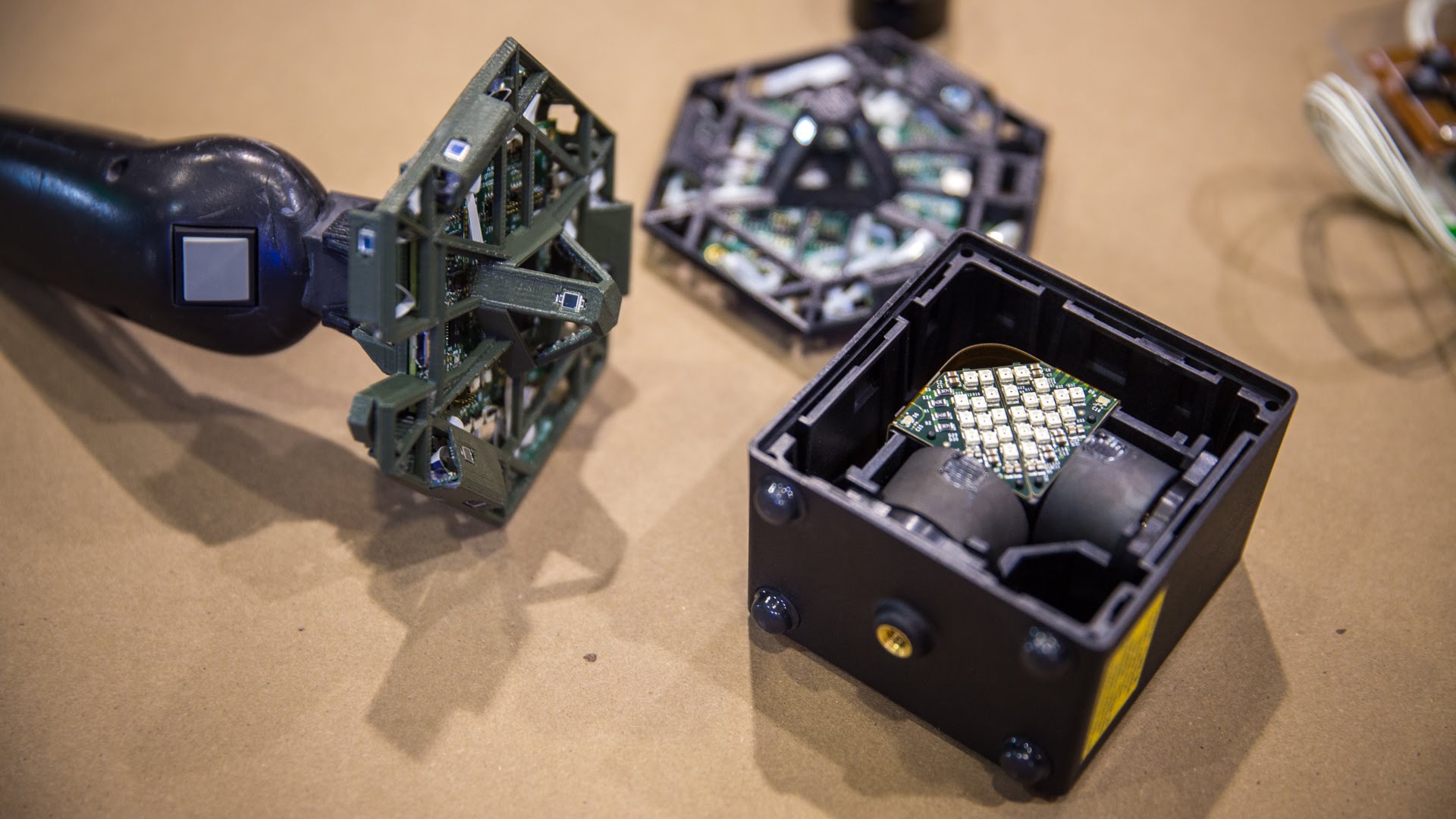

How do all the technical components of VR headsets, e.g., screens, lenses, tracking, etc., actually come together to create realistic-looking virtual environments? Specifically, why do virtual environment in VR look more “real” compared to when viewed via other media, for example panoramic video?

The reason I’m bringing this up again is that the question keeps getting asked, and that it’s really kinda hard to answer. Most attempts to answer it fall back on technical aspects, such as stereoscopy, head tracking, etc., but I find that this approach somewhat misses the point by focusing on individual components, or at least gets mired in technical details that don’t make much sense to those who have to ask the question in the first place.

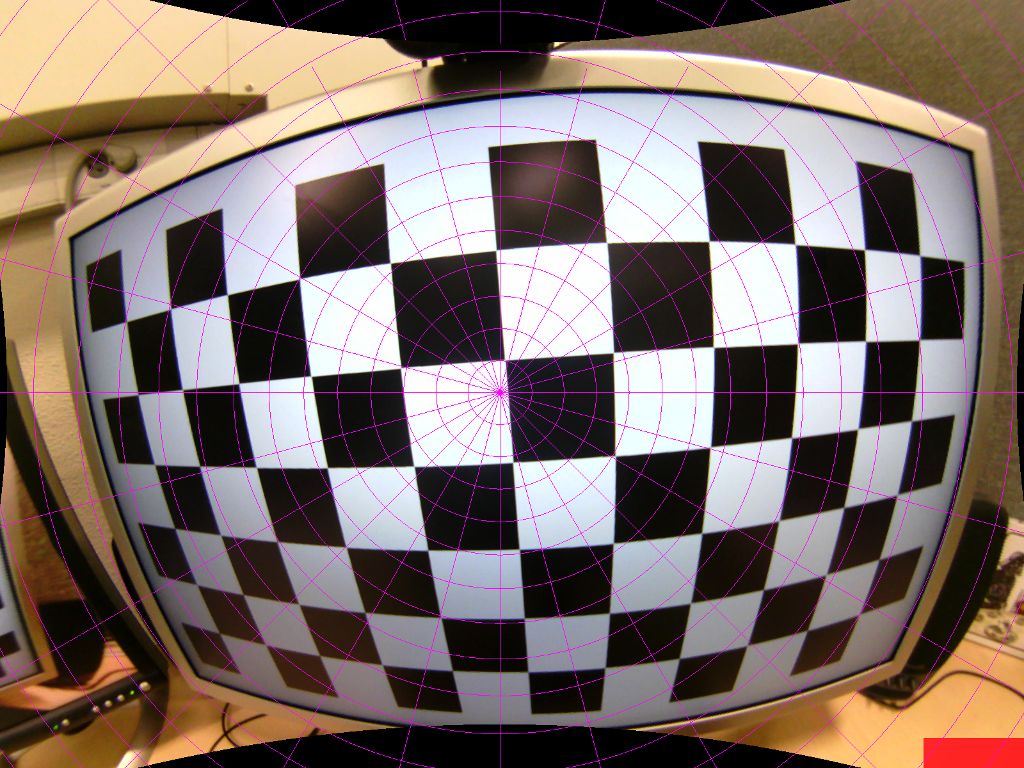

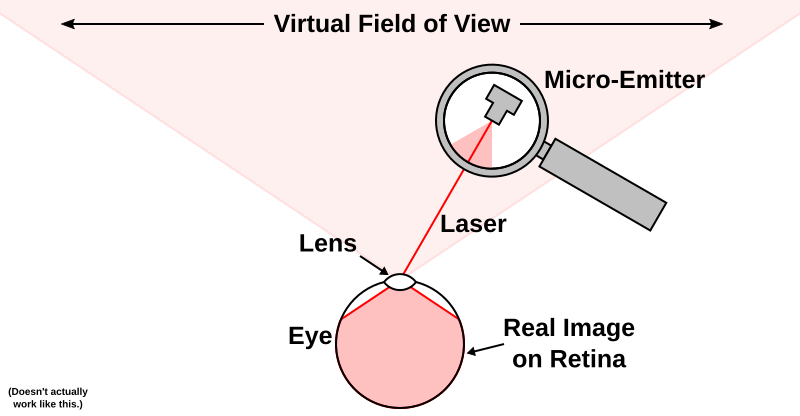

I prefer to approach the question from the opposite end: not through what VR hardware produces, but instead through how the viewer perceives 3D objects and/or environments, and how either the real world on the one hand, or virtual reality displays on the other, create the appropriate visual input to support that perception.

The downside with that approach is that it doesn’t lend itself to short answers. In fact, last summer, I gave a 25 minute talk about this exact topic at the 2016 VRLA Summer Expo. It may not be news, but I haven’t linked this video from here before, and it’s probably still timely: