There was an article on Medium yesterday: My First Virtual Reality Groping. In it, a first-time VR/HTC Vive user describes how she was virtually groped by another player inside an online multi-player VR game, within the first three minutes of her first such endeavor, and how it ruined her experience and deeply disturbed her.

I do not know what to call player “BigBro442’s” behavior, but I do know that it is highly inappropriate, and toxic for VR as a whole. This, people, is why we can’t have nice things. This is by far not the first instance of virtual harassment or VR griefing that I’ve heard of, but it’s the one that got me thinking because of this comment on the article:

This is reality. The best we can do is educate, starting with articles like this.

No. That is not true. We can do better than that. Unlike reality, where someone might be assaulted inside their own home, or in some dark back alley, with no witnesses around or evidence left behind, this is virtual reality, which only exists as a sequence of ones and zeros on some Internet server. That server has absolute knowledge of anything that goes on anywhere inside the virtual world it maintains, like an omniscient Big Brother. If virtual harassment happens in virtual reality, maybe virtual reality needs virtual courts.

Here is a not-so-modest proposal, off the top of my head, using SteamVR/Steam as example platforms:

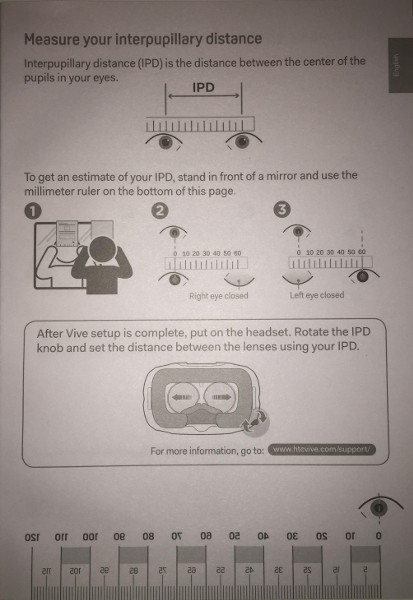

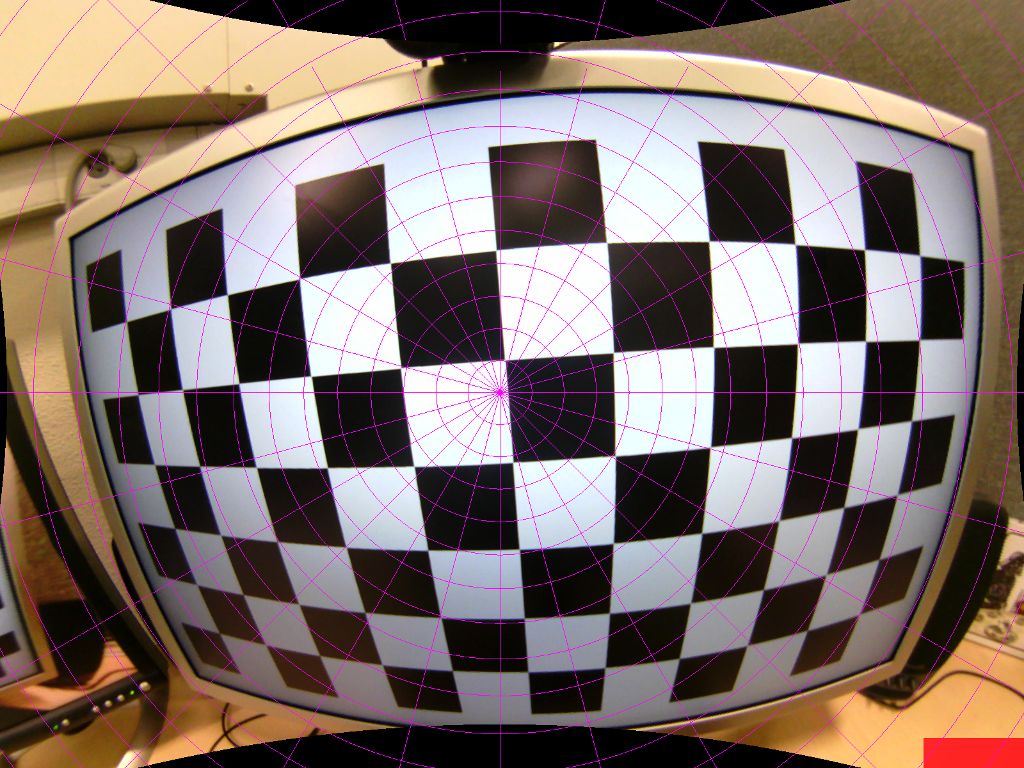

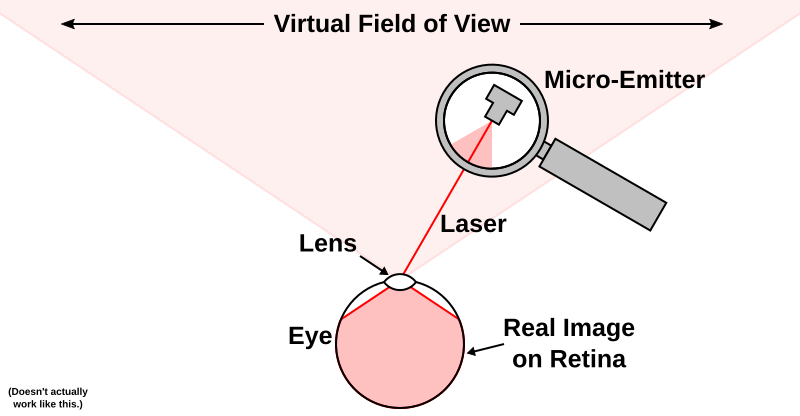

- Any server maintaining a virtual world potentially used by more than one person at the same time keeps a ring buffer of each connected user’s avatar state for the last, say, five minutes. That’s not overly demanding: sampling a head tracker and two hand trackers at, say, 30 Hz, over five minutes, results in approx. 750kB of data total, per user.

- The client user interface of any shared virtual environment contains a button in some easily accessible standard place, say in SteamVR’s overlay, to file a harassment complaint.

- If a user (“Alice”) files a complaint, several things happen. Most importantly, the server immediately dumps the avatar state ring buffers of all connected (or recently connected) players to a file. Second, Alice is immediately charged a small fee, say $5, on the credit card associated with her Steam account. This is a micro-transaction, an existing Steam feature. The fee’s purpose is to discourage another form of harassment, namely filing frivolous complaints against innocent users.

- Files generated by complaints, with personally identifying information redacted, will be reviewed by a peer group of humans. This might be done by appointed moderators, or might even be crowd-sourced.

- If review determines that behavior contained in the 5-minute replay violates community standards, Alice will be refunded the fee she was charged, and offending user Bob’s Steam account will be temporarily suspended, say for one day on the first offense, starting either immediately or the next time Bob attempts to log in. And I mean Bob’s entire Steam account is suspended, not just his access to one particular server or shared VR application: Bob’s on time-out and can go read a book.

- If review determines that the complaint was without merit, nothing happens to accused user Bob, and Alice is not refunded her fee. If Alice disagrees, she can raise the stakes by re-filing the same complaint for another $5 fee, the total $10 then being refundable or not, etc.

- If review cannot reach agreement, or review does not happen within a reasonable time frame, Alice is refunded her fee.

Okay, so this is ridiculous, right? Not from a technical feasibility point of view, which I think I laid out above, but from an organizational and cultural point of view. One might say that it is a severe regulatory overreach, a violation of the freedom and the very fundamental principles of online gaming, and that the idea of community review is ludicrous on the face of it.

Well, I might have agreed — until recently, that is, when I stumbled across this. Holy Moly! What’s that? Multi-player game servers retaining state data of all players, which can be dumped to a permanent file as evidence for later peer review by a number of appointed or self-appointed judges, with crowd-sourced verdicts and suspensions or bans handed out to cheaters, and judges being rewarded or punished for good or bad judgment? And it works?

If cheating in Counter-Strike is a big enough deal to create a system like this, would it be so outrageous to apply the same basic idea to harassment in shared virtual reality, which, due to VR’s strong sense of immersion and presence, arguably has a larger negative impact on the harassed than losing a round of CS?

Discuss.

Like this:

Like Loading...