Note: This is part 2 of a four-part series. [Part 1] [Part 3] [Part 4]

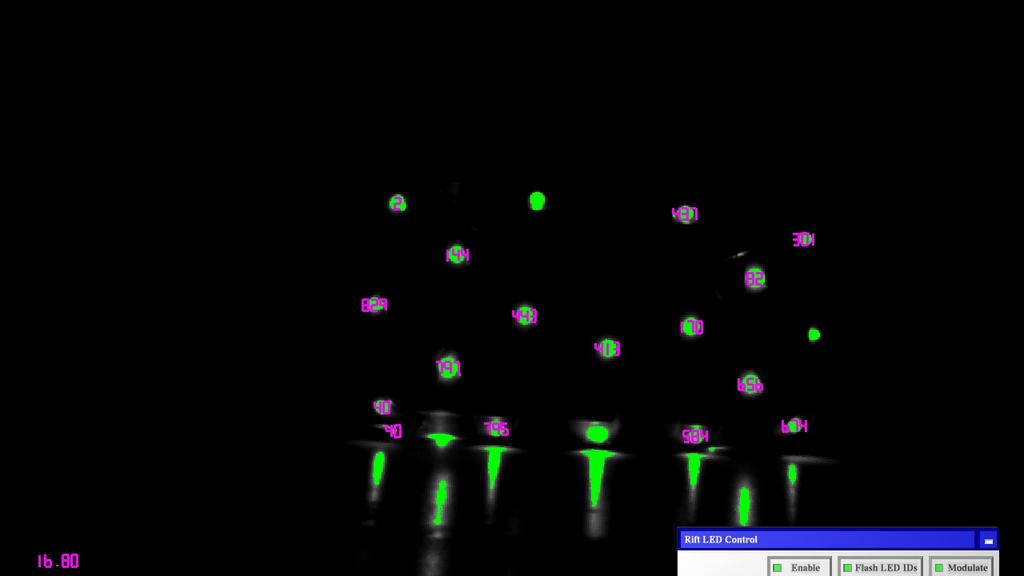

The final update/edit to my previous post was to report that I had managed to synchronize the DK2’s tracking LEDs to its camera’s video stream by following pH5’s ouvrt code, and that I was able to extract 5-bit IDs for each LED by observing changes in that LED’s brightness over time. Unfortunately I’ll have to start off right away by admitting that I made a bad mistake.

Understanding the DK2’s camera

Once I started looking more closely, I realized that the camera was only capturing 30 frames per second when locked to the DK2’s synchronization cable, instead of the expected 60. After downloading the data sheet for the camera’s imaging sensor, the Aptina MT9V034, and poring over the documentation, I realized that I had set a wrong vertical blanking interval. Instead of using a value of 5, as the official run-time and pH5’s code, I was using a value of 57, because that was the original value I found in the vertical blanking register before I started messing with the sensor. As it turns out, a camera — or at least this camera — captures video in the same way as a monitor displays it: padded with a horizontal and vertical blanking period. By leaving the vertical blanking period too large, I had extended the time it takes the camera to capture and send a frame across its host interface. Extended by how much? Well, the camera has a usable frame size of 752×480 pixels, a horizontal blanking interval of 94 pixels, and a (fixed) pixel clock of 26.66MHz. Using a vertical blanking interval of 5 lines, the total frame time is ((752+94)*(480+5)+4)/26.66MHz = 15.391ms (in case you’re wondering where the “+4” comes from, so am I. It’s part of the formula in the data sheet). Using 57 as vertical blanking interval, the total frame time becomes ((752+94)*(480+57)+4)/26.66MHz = 17.041ms. Notice something? 17.041ms is longer than the synchronization pulse interval of 16.666ms. Oops. The exposure trigger for an odd frame arrives at a time when the camera is still busy processing the preceding even frame, and is therefore ignored, resulting in the camera skipping every odd frame and capturing at 30Hz. Lesson learned.

Figure 1: First result from LED identification algorithm, showing wrong ID numbers due to the camera dropping video frames all over the place.