I am getting the feeling that Big Brother is watching me. When I released the inital version of the Vrui VR toolkit with native Oculus Rift support, it had magnetic yaw drift correction, which the official Oculus SDK didn’t have at that point (Vrui doesn’t use the Oculus SDK at all to talk to the Rift; it has its own tracking driver that talks to the Rift’s inertial movement unit directly via USB, and does its own sensor fusion, and also does its own projection setup and lens distortion correction). A week or so later, Oculus released an updated SDK with magnetic drift correction.

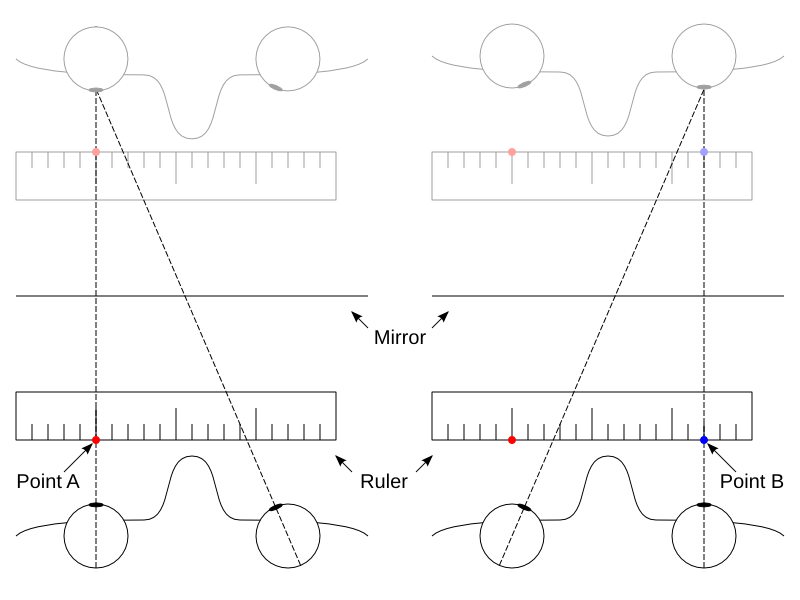

A little more than a month ago, I wrote a pair of articles investigating and explaining the internals of the Rift’s display, and how small deviations in calibration have a large effect on the perceived size of the virtual world, and the degree of “solidity” (for lack of a better word) of the virtual objects therein. In those posts, I pointed out that a single lens distortion correction formula doesn’t suffice, because lens distortion parameters depend on the position of the viewers’ eyes relative to the lenses, particularly the eye/lens distance, otherwise known as “eye relief.” And guess what: I just got an email via the Oculus developer mailing list announcing the (preview) release of SDK version 0.3.1, which lists eye relief-dependent lens correction as one of its major features.

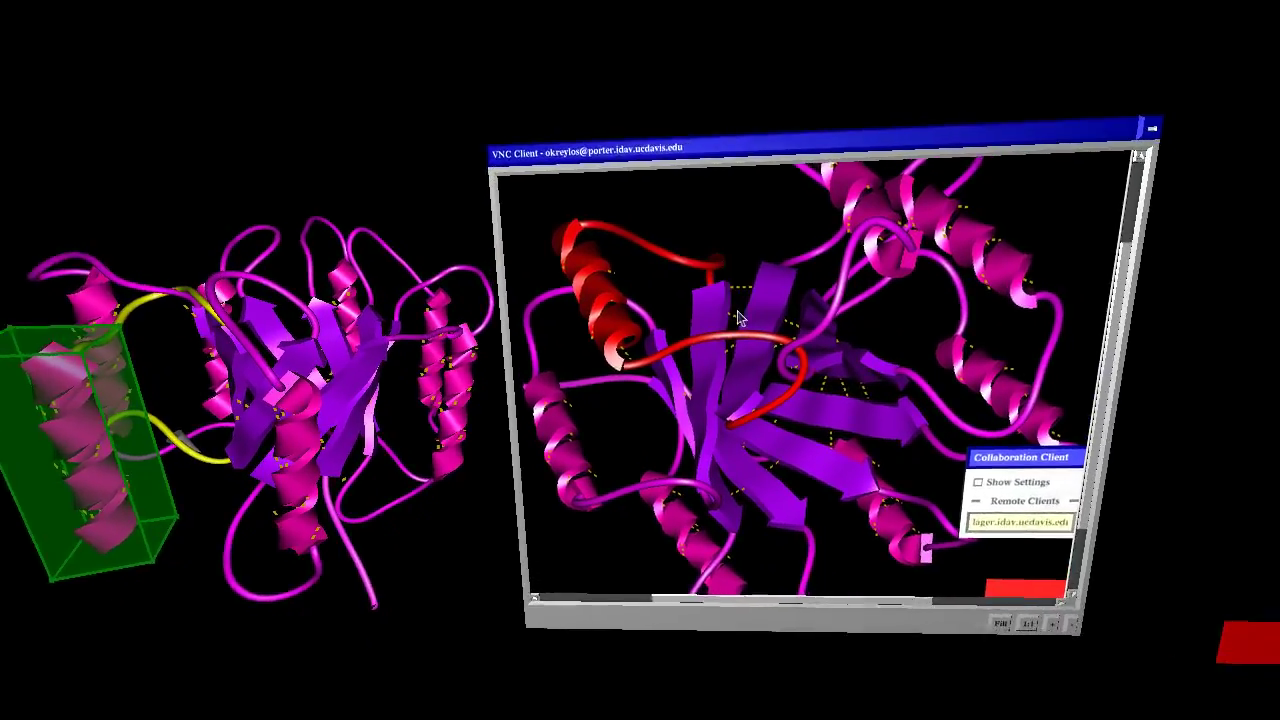

Maybe I should keep writing articles on the virtues of 3D pupil tracking, and the obvious benefits of adding an inertially/optically tracked 6-DOF input device to the consumer-level Rift’s basic package, and those things will happen as well. 🙂