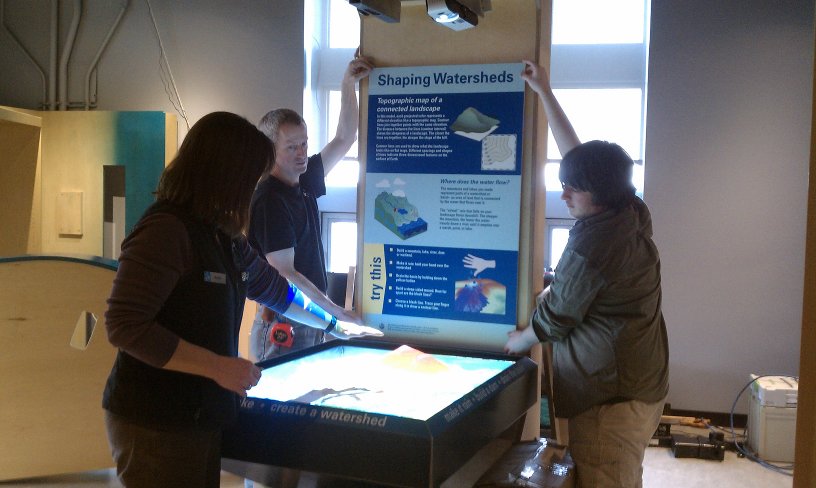

We have been collaborating with the UC Davis Tahoe Environmental Research Center (TERC) for a long time. Back in — I think — 2006, we helped them purchase a large-screen stereoscopic projection system for the Otellini 3-D Visualization Theater and installed a set of Vrui-based KeckCAVES visualization applications for guided virtual tours of Lake Tahoe and the entire Earth. We have since worked on joint projects, primarily related to informal science education. Currently, TERC is one of the collaborators in the 3D lake science informal science education grant that spawned the Augmented Reality Sandbox.

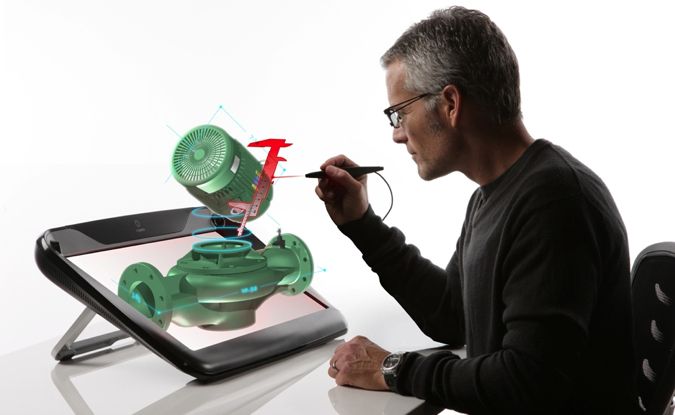

The original stereo projection system, driven by a 2006 Mac Pro, was getting long in the tooth, and in the process of upgrading to higher-resolution and brighter projectors, we finally convinced the powers-that-be to get a top-of-the line Linux PC instead of yet another Mac (for significant savings, one might add). While the Ubuntu OS and Vrui application set had already been pre-installed by KeckCAVES staff in the home office, I still had to go up to the lake to configure the operating system and Vrui to render to the new projectors, update all Vrui software, align the projectors, and train the local docents in using Linux and the new Vrui application versions.