About once a day I check out this blog’s access statistics, and specifically the search terms that brought viewers to it (that’s how I found out that I’m the authority on the Oculus Rift being garbage). It’s often surprising, and often leads to new (new to me, at least) discoveries. Following one such search term, today I learned that Apple was awarded a patent for interactive holographic display technology. Well, OK, strike that. Today I learned that, apparently, reading an article is not a necessary condition for reblogging it — Apple wasn’t awarded a patent, but a patent application that Apple filed 18 months ago was published recently, according to standard procedure.

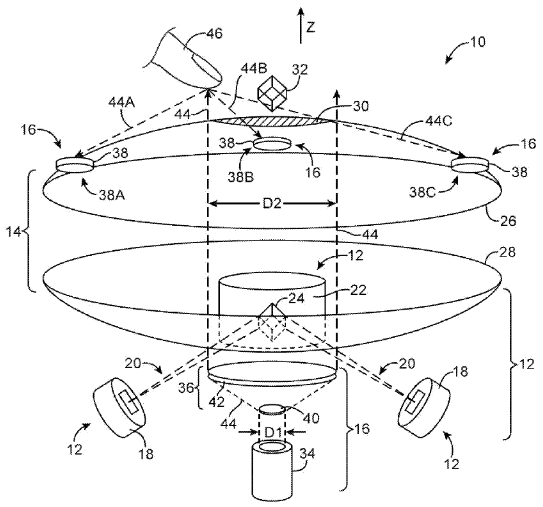

But that aside, what’s in the patent? The main figure in the application (see Figure 1) should already clue you in, if you read my pair of posts about the thankfully failed Holovision Kickstarter project. It’s a volumetric display of some unspecified sort (maybe a non-linear crystal? Or, if that fails, a rotating 2D display? Or “other 3D display technology?” Sure, why be specific! It’s only a patent! I suggest adding “holomatter” or “mass effect field” to the list, just to be sure.), placed inside a double parabolic mirror to create a real image of the volumetric display floating in air above the display assembly. Or, in other words, Project Vermeer. Now, I’m not a patent lawyer, but how Apple continues to file patents on the patently trivial (rounded corners, anyone?), or some exact thing that was shown by Microsoft in 2011, about a year before Apple’s patent was filed, is beyond me.

Figure 1: Main image from Apple’s patent application, showing the unspecified 3D image source (24) located inside the double-parabolic mirror, and the real 3D image of same (32) floating above the mirror. There is also some unspecified optical sensor (16) that may or may not let the user interact with the real 3D image in some unspecified way.