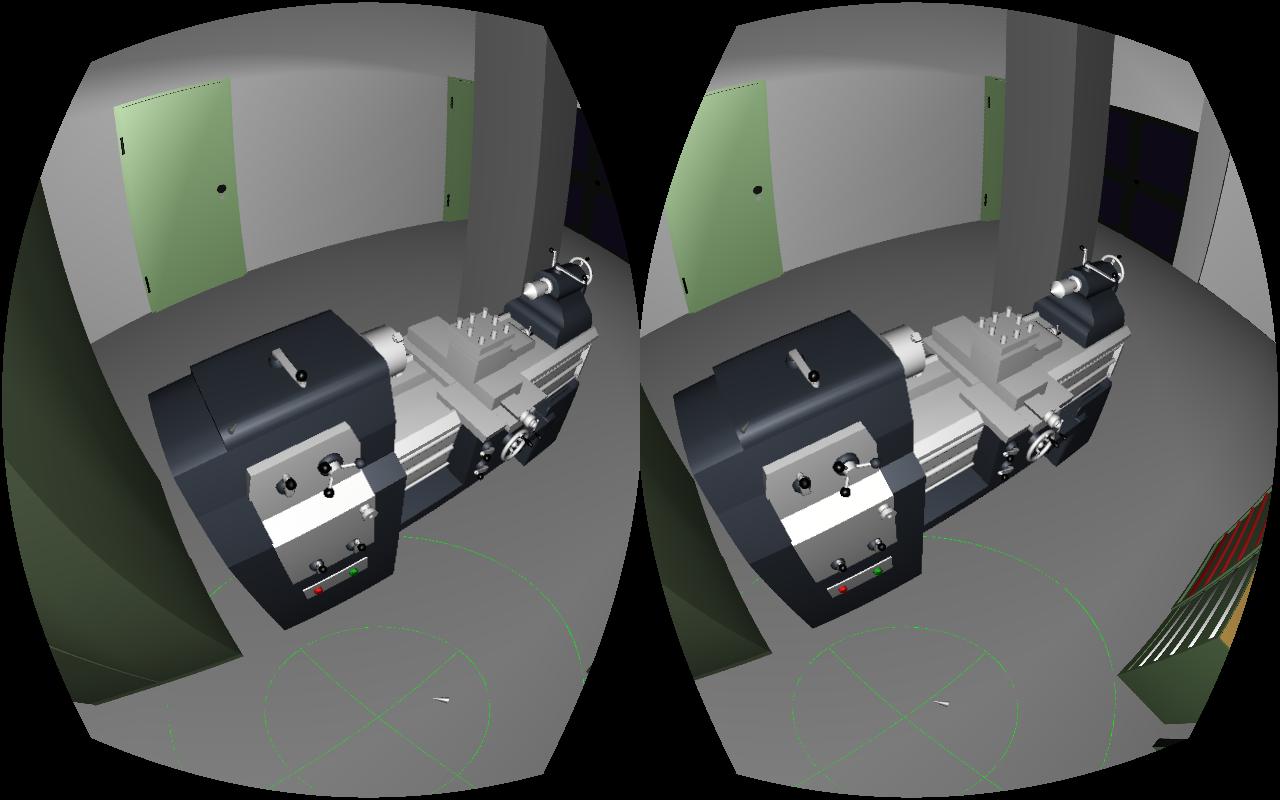

I just released version 3.0 of the Vrui VR toolkit. One of the major new features is native support for the Oculus Rift head-mounted display, including its low-latency inertial 3-DOF (orientation-only) tracker, and post-rendering lens distortion correction. So I thought it’s time for the first (really?) Vrui post in this venue.

What is Vrui, and why should I care?

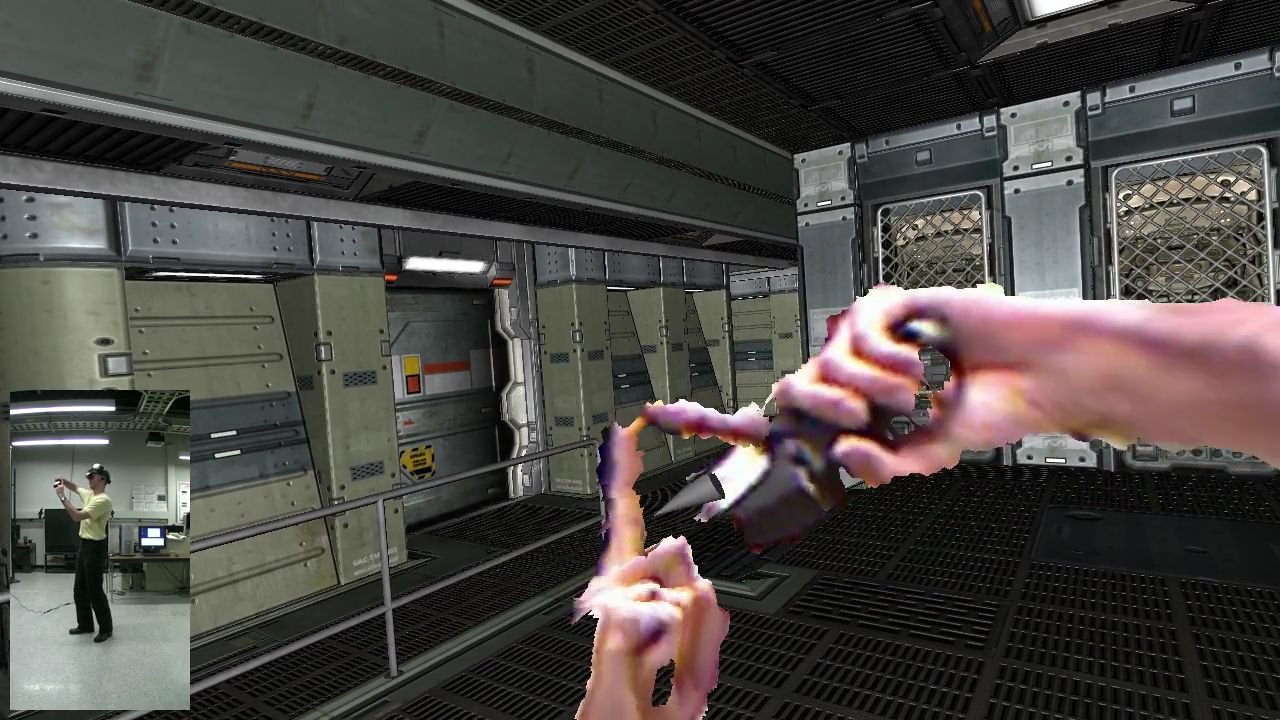

Glad you’re asking. In a nutshell, Vrui (pronounced to start with vroom, and rhyme with gooey) is a high-level toolkit to develop highly interactive applications aimed at holographic (or fully-immersive, or VR, or whatever you want to call them) display environments. A large selection of videos showing many Vrui applications running in a wide variety of environments can be found on my YouTube channel. To you as a developer, this means you write your application once, and users can run it in any kind of environment without you having to worry about it. If new input or output hardware comes along, it’s Vrui’s responsibility to support it, not yours.