I have talked many times about the importance of eye tracking for head-mounted displays, but so far, eye tracking has been limited to the very high end of the HMD spectrum. Not anymore. SensoMotoric Instruments, a company with around 20 years of experience in vision-based eye tracking hardware and software, unveiled a prototype integrating the camera-based eye tracker from their existing eye tracking glasses with an off-the-shelf Oculus Rift DK1 HMD (see Figure 1). Fortunately for me, SMI were showing their eye-tracked Rift at the 2014 Augmented World Expo, and offered to bring it up to my lab to let me have a look at it.

Tag Archives: 3D tracking

On the road for VR: Silicon Valley Virtual Reality Conference & Expo

I just got back from the Silicon Valley Virtual Reality Conference & Expo in the awesome Computer History Museum in Mountain View, just across the street from Google HQ. There were talks, there were round tables, there were panels (I was on a panel on non-game applications enabled by consumer VR, livestream archive here), but most importantly, there was an expo for consumer VR hardware and software. Without further ado, here are my early reports on what I saw and/or tried.

Game Engines and Positional Head Tracking

Oculus recently presented the “Crystal Cove,” a version of the Rift head-mounted display with built-in optical tracking, which is combined with the existing inertial tracker to provide a full 6-DOF (position and orientation) tracking solution at low latency, and it is rumored that the Crystal Cove will be released as development kit mark 2 after this year’s Game Developers Conference.

This is great news. I’ve been saying for a long time that Oculus cannot afford to drop positional head tracking on developers at the last minute, because it will break several assumptions built into game engines and other VR software (but let’s talk about game engines here). I’m also happy because the Crystal Cove uses precisely the tracking technology that I predicted: active markers (LEDs) on the headset, and an external camera placed at a fixed position in the environment. I am also sad because I didn’t manage to finish my own after-market optical tracking add-on before Oculus demonstrated their new integrated technology, but that’s life.

So why does positional head tracking break existing games? Because for the first time, the virtual camera used to render a game world is no longer under sufficient control of the software. Let’s take a step back. In a standard, desktop, 3D game, the camera is entirely controlled by the software. The software sets it to some position and orientation determined by the game logic, the 3D engine renders the virtual world for that camera setup, and the result is the displayed image.

Elon Musk discovers AR/VR

Serial entrepreneur Elon Musk posted this double whammy of cryptic messages to his Twitter account on August 23rd:

@elonmusk: We figured out how to design rocket parts just w hand movements through the air (seriously). Now need a high frame rate holograph generator.

@elonmusk: Will post video next week of designing a rocket part with hand gestures & then immediately printing it in titanium

As there are no further details, and the video is now slightly delayed (per Twitter as of September 2nd: @elonmusk: Video was done last week, but needs more work. Aiming to publish link in 3 to 4 days.), it’s time to speculate! I was hoping to have seen the video by now, but oh well. Deadline is deadline.

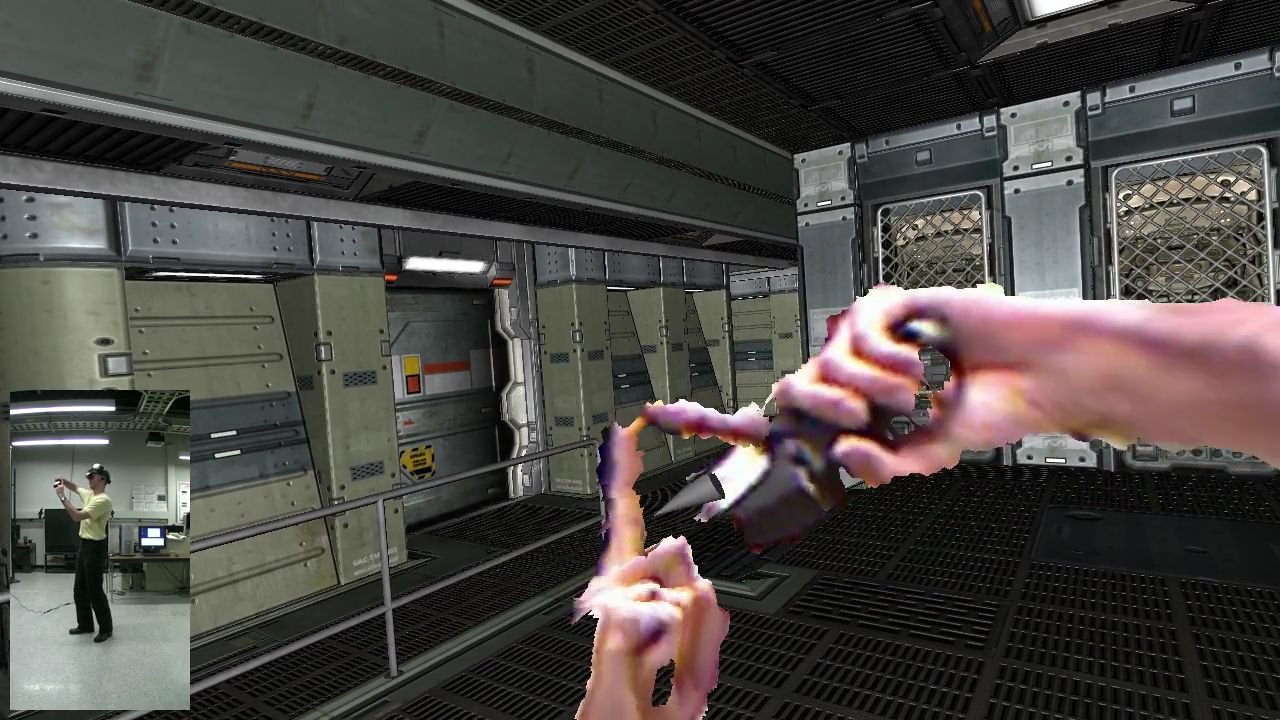

First of all: what’s he talking about? My best guess is a free-hand, direct-manipulation, 6-DOF user interface for a 3D computer-aided design (CAD) program. In other words, something roughly like this (just take away the hand-held devices and substitute NURBS surfaces and rocket parts for atoms and molecules, but leave the interaction method and everything else the same):

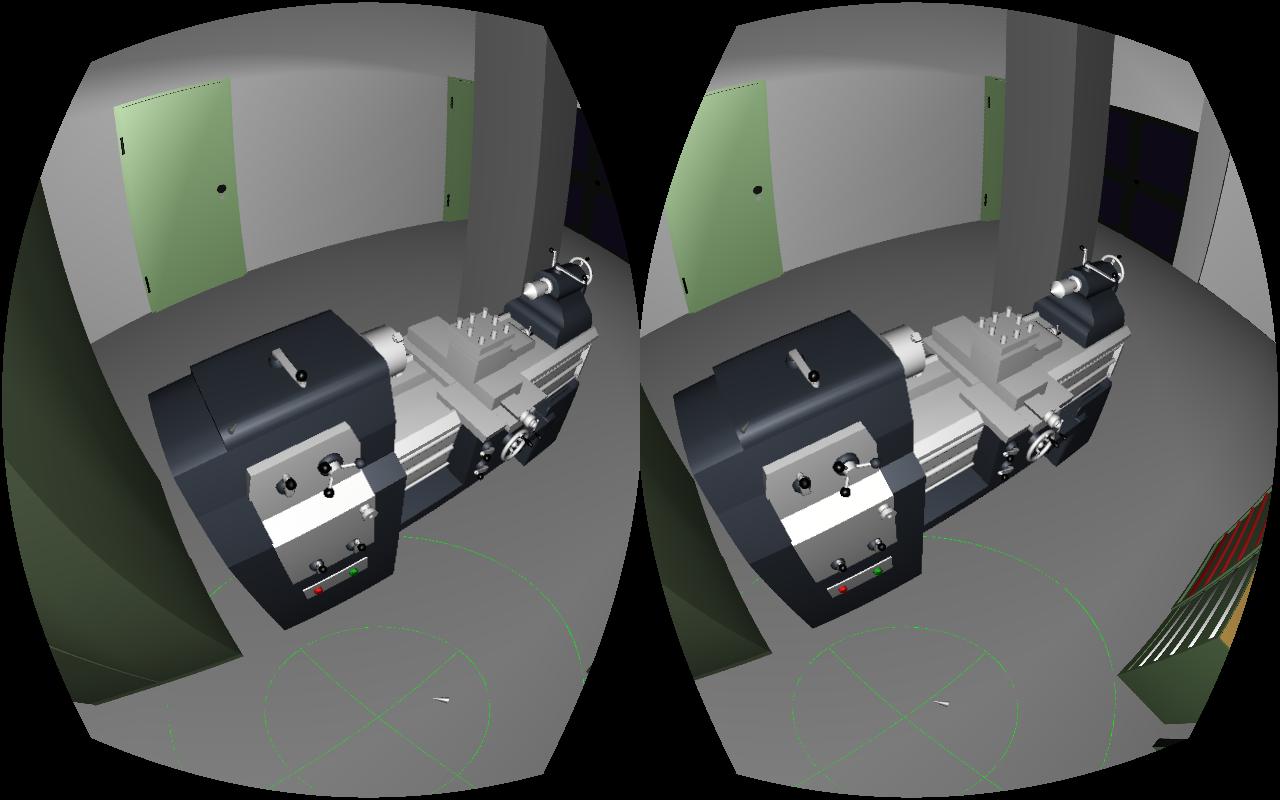

Vrui on (in?) Oculus Rift

I wrote about my first impressions of the Oculus Rift developer kit back in April, and since then I’ve been working (on and off) on getting it fully and natively supported in Vrui (see Figure 1 for proof that it works). Given that Vrui’s somewhat insane flexibility is a major point of pride for me, what was it that I actually had to create to support the Rift? Turns out, not all that much: a driver for the Rift’s built-in inertial tracking unit and a post-processing filter to correct for the Rift’s lens distortion were all it took (more on that later). So why did it take me this long? For one, I was mostly working on other things and only spent a few hours here and there, but more importantly, the Rift is not just a new head-mounted display (HMD), but a major shift in how HMDs are (or will be) used.

On the road for VR: zSpace developers conference

I went to zCon 2013, the zSpace developers conference, held in the Computer History Museum in Mountain View yesterday and today. As I mentioned in my previous post about the zSpace holographic display, my interest in it is as an alternative to our current line of low-cost holographic displays, which require assembly and careful calibration by the end user before they can be used. The zSpace, on the other hand, is completely plug&play: its optical trackers (more on them below) are integrated into the display screen itself, so they can be calibrated at the factory and work out-of-the-box.

Figure 1: The zSpace holographic display and how it would really look like when seen from this point of view.

So I drove around the bay to get a close look at the zSpace, to determine its viability for my purpose. Bottom line, it will work (with some issues, more on that below). My primary concerns were threefold: head tracking precision and latency, stylus tracking precision and latency, and stereo quality (i.e., amount of crosstalk between the eyes).

Continue reading

First impressions from the Oculus Rift dev kit

My friend Serban got his Oculus Rift dev kit in the mail today, and he called me over to check it out. I will hold back a thorough evaluation until I get the Rift supported natively in my own VR software, so that I can run a direct head-to-head comparison with my other HMDs, and also my screen-based holographic display systems (the head-tracked 3D TVs, and of course the CAVE), using the same applications. Specifically, I will use the Quake ||| Arena viewer to test the level of “presence” provided by the Rift; as I mentioned in my previous post, there are some very specific physiological effects brought out by that old chestnut, and my other HMDs are severely lacking in that department, and I hope that the Rift will push it close to the level of the CAVE. But here are some early impressions.

Continue reading

The reality of head-mounted displays

So it appears the Oculus Rift is really happening. A buddy of mine went in early on the kickstarter, and his will supposedly be in the mail some time this week. In a way the Oculus Rift, or, more precisely, the most recent foray of VR into the mainstream that it embodies, was the reason why I started this blog in the first place. I’m very much looking forward to it (more on that below), but I’m also somewhat worried that the huge level of pre-release excitement in the gaming world might turn into a backlash against VR in general. So I made a video laying out my opinions (see Figure 1, or the embedded video below).

Intel’s “perceptual computing” initiative

I went to the Sacramento Hacker Lab last night, to see a presentation by Intel about their soon-to-be-released “perceptual computing” hardware and software. Basically, this is Intel’s answer to the Kinect: a combined color and depth camera with noise- and echo-cancelling microphones, and an integrated SDK giving access to derived head tracking, finger tracking, and voice recording data.

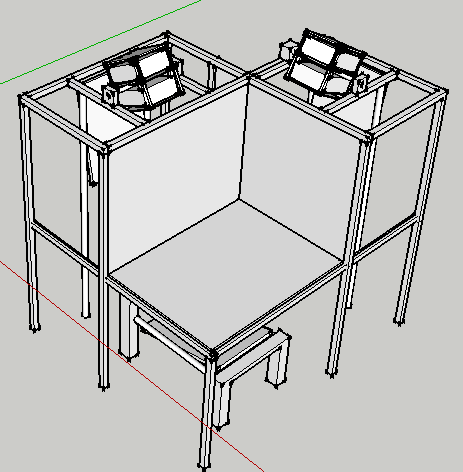

First VR environment in Estonia powered by Vrui

Now here’s some good news: I mentioned recently that reports of VR’s death are greatly exaggerated, and now I am happy to announce that researchers with the Institute of Cybernetics at Tallinn University of Technology have constructed the country’s first immersive display system, and I’m prowd to say it’s powered by the Vrui toolkit. The three-screen, back-projected display was entirely designed and built in-house. Its main designers, PhD student Emiliano Pastorelli and his advisor Heiko Herrmann, kindly sent several diagrams and pictures, see Figures 1, 2, 3, and 4.