Someone whose opinion I value highly convinced me to sign up for a twitter account. So I’m tweeting now. Oh lord, what’s next? A facebook page?

Author Archives: okreylos

When the novelty is gone…

I just found this old photo on one of my cameras, and it’s too good not to share. It shows former master’s student Peter Gold (now in the PhD program at UT Austin) working with a high-resolution aerial LiDAR scan of the El Mayor-Cucapah fault rupture after the April 2010 earthquake (here is the full-resolution picture, for the curious).

How Milo met CAVE

I just read an interesting article, a behind-the-scenes look at the infamous “Milo” demo Peter Molyneux did at 2009’s E3 to introduce Project Natal, i.e., Kinect.

This article is related to VR in two ways. First, the usual progression of overhyping the capabilities of some new technology and then falling flat on one’s face because not even one’s own developers know what the new technology’s capabilities actually are is something that should be very familiar to anyone working in the VR field.

But here’s the quote that really got my interest (emphasis is mine):

Others recall worrying about the presentation not being live, and thinking people might assume it was fake. Milo worked well, they say, but filming someone playing produced an optical illusion where it looked like Milo was staring at the audience rather than the player. So for the presentation, the team hired an actress to record a version of the sequence that would look normal on camera, then had her pretend to play along with the recording. … “We brought [Claire] in fairly late, probably in the last two or three weeks before E3, because we couldn’t get it to [look right]” says a Milo team member. “And we said, ‘We can’t do this. We’re gonna have to make a video.’ So she acted to a video. “Was that obvious to you?” Following Molyneux’s presentation, fans picked apart the video, noting that it looked fake in certain places.

Gee, sounds familiar? This is, of course, the exact problem posed by filming a holographic display, and a person inside interacting with it. In a holographic display, the images on the screens are generated for the precise point of view of the person using it, not the camera. This means it looks wrong when filmed straight up. If, on the other hand, it’s filmed so it looks right on camera, then the person inside will have a very hard time using it properly. Catch 22.

With the “Milo” demo, the problem was similar. Because the game was set up to interact with whoever was watching it, it ended up interacting with the camera, so to speak, instead of with the player. Now, if the Milo software had been set up with the level of flexibility of proper VR software, it would have been an easy fix to adapt the character’s gaze direction etc. to a filming setting, but since game software in the past never had to deal with this kind of non-rigid environment, it typically ends up fully vertically integrated, and making this tiny change would probably have taken months of work (that’s kind of what I meant when I said “not even one’s own developers know what the new technology’s capabilities actually are” above). Am I saying that Milo failed because of the demo video? No. But I don’t think it helped, either.

The take-home message here is that mainstream games are slowly converging towards approaches that have been embodied in proper VR software for a long time now, without really noticing it, and are repeating old mistakes. The Oculus Rift will really bring that out front and center. And I am really hoping it won’t fall flat on its face simply because software developers didn’t do their homework.

Intel’s “perceptual computing” initiative

I went to the Sacramento Hacker Lab last night, to see a presentation by Intel about their soon-to-be-released “perceptual computing” hardware and software. Basically, this is Intel’s answer to the Kinect: a combined color and depth camera with noise- and echo-cancelling microphones, and an integrated SDK giving access to derived head tracking, finger tracking, and voice recording data.

Virtual clay modeling with 3D input devices

It’s funny, suddenly the idea of virtual sculpting or virtual clay modeling using 3D input devices is popping up everywhere. The developers behind the Leap Motion stated it as their inspiration to develop the device in the first place, and I recently saw a demo video; Sony has recently been showing it off as a demo for the upcoming Playstation 4; and I’ve just returned from an event at the Sacramento Hacker Lab, where Intel was trying to get developers excited about their version of the Kinect, or what they call “perceptual computing.” One of the demos they showed was — guess it — virtual sculpting (one other demo was 3D video of a person embedded into a virtual office, now where have I seen that before?)

So I decided a few days ago to dust off an old toy application (I showed it last in my 2007 Wiimote hacking video), a volumetric virtual “clay” modeler with real-time isosurface extraction for visualization, and run it with a Razer Hydra controller, which supports bi-manual 6-DOF interaction, a pretty ideal setup for this sort of thing:

A celebration of sorts

Aside

The odometer on my YouTube channel just rolled over 6,666,666 total views. I tried to catch it exactly, but due to YouTube’s view count buffering, that didn’t happen. Anyway, here’s screenshot proof. Yay!

Oh, the places you’ll go!

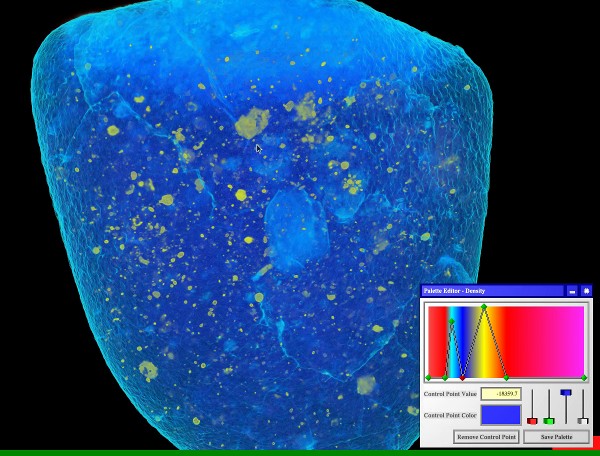

Hey look! A still frame of an animated visualization I created of a CAT scan of a fragment of the meteorite that landed close to Sutter’s Mill in Northern California almost a year ago made the cover of Microscopy Today. Here’s a link to the original post I wrote back in December 2012, and because it’s really pretty, and all grown up and alone out there in the world now, here is the picture in question again:

To quickly recap from my original post, the CAT scan of this meteorite fragment was taken at the UC Davis Center for Molecular and Genomic Imaging, and then handed to me for visualization by Prof. Qing-zhu Yin of the UC Davis Department of Geology. The movies I made were to go along with the publication of Qing-zhu’s and his co-authors’ paper in Science.

I thought I did a really good job with the color map, given that that’s not normally my forte. The icy blue — dark blue gradient nicely brings out the fractures in the crust, and the heavy element inclusions stand out prominently in gold (Blue and gold? UC Davis? Get it?). You can watch the full video on YouTube. I’d link to Qing-zhu’s own copy of the video, but it has cooties, I mean ads on it, eww.

And as can be seen in a full-page ad on page 31 of the same issue of Microscopy Today, apparently my picture — no doubt by virtue of the 3D meteorite fragment scan shown in it — was one of the winners in a “coolest thing you’ve never seen” contest held by the company who made the X-ray CT scanner. My little picture is Miss September 2013. Hooray, I guess?

Low-cost VR for materials science

In my ongoing series on VR’s stubborn refusal to just get on with it and croak already, here’s an update from the materials science front. Lilian Dávila, former UC Davis grad student and now professor at UC Merced, was recently featured in a three-part series about cutting-edge digital research at UC Merced, produced by the PR arm of the University of California. Here’s the 10-minute short focusing on her use of low-cost holographic displays for interactive design and analysis of nanostructures:

KeckCAVES on Mars, pt. 4

I mentioned before that we had a professional film crew in the CAVE a while back, to produce promotional video for the University of California‘s “Onward California” PR program. Finally, the finished videos have been posted on the Office of the President’s official YouTube channel. Unlike my own recent CAVE videos, these ones have excellent audio.

Figure 1: Dawn Sumner, member of the NASA Curiosity Mars rover mission’s science team, interacting with a life-size 3D model of the rover in the UC Davis KeckCAVES holographic display environment. Still image taken from “The surface of Mars.”

These short videos focus on Dawn Sumner, a professor in the UC Davis Department of Geology, and a KeckCAVES core member. This time, Dawn is wearing her hat as a planetary explorer and talking about NASA‘s Curiosity Mars rover mission, and her role in it.

Of CAVEs and Curiosity: Imaging and Imagination in Collaborative Research

On Monday, 03/04/2013, Dawn Sumner, one of KeckCAVES‘ core members, gave a talk in UC Berkeley‘s Art, Technology, and Culture lecture series, together with Meredith Tromble of the San Francisco Art Institute. The talk’s title was “Of CAVEs and Curiosity: Imaging and Imagination in Collaborative Research,” and it can be viewed online (1:12:55 total length, 50 minutes talk and 25 minutes lively discussion).

While the talk is primarily about the “Dream Vortex,” an evolving virtual reality art project led by Dawn and Meredith involving KeckCAVES hardware (CAVE and low-cost VR systems) and software, Dawn also touches on several of her past and present scientific (and art!) projects with KeckCAVES, including her work on ancient microbialites, exploration of live stromatolites in ice-covered lakes in Antarctica, our previous collaboration with performing artists, and — most recently — her leadership role with NASA‘s Curiosity Mars rover mission.

The most interesting aspect of this talk, for me, was that the art project and all the software development for it, are done by the “other” part of the KeckCAVES project, the more mathematically/complex systems-aligned cluster around Jim Crutchfield of UC Davis‘ Complexity Sciences Center and his post-docs and graduate students. In practice, this means that I saw some of the software for the first time, and also heard about some problems the developers ran into that I was completely unaware of. This is interesting because it means that the Vrui VR toolkit, on which all this software is based, is maturing from a private pet project to something that’s actually being used by parties who are not directly collaborating with me.