I just found an article about my 3D Video Capture with Three Kinects video on Discovery News (which is great!), but then I found Figure 1 in the “Related Gallery.” Oh, and they also had a link to another article titled “Virtual Reality Sex Game Set To Stimulate” right in the middle of my article, but you learn to take that kind of thing in stride.

Figure 1: Image in the “related gallery” on Discovery News. Original caption: “Apple has filed a patent for a holographic phone, a concept that sounds absolutely cool. We can’t wait. But what would it look like? A video created by animator Mike Ko, who has made animations for Google, Nike, Toyota, and NASCAR, gives us an idea. Check it out here”

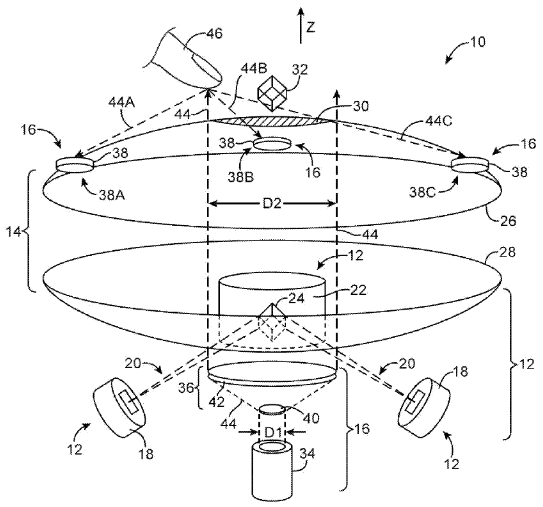

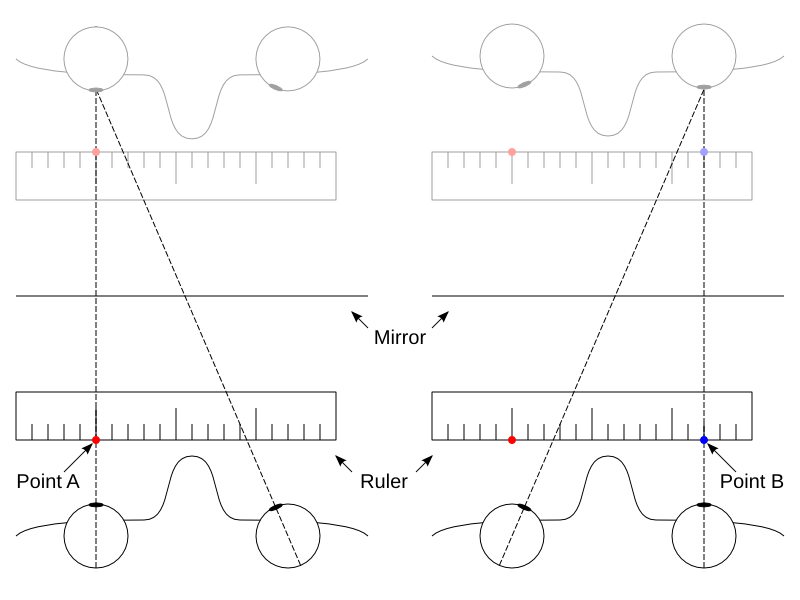

Nope. Nope nope nope no. Where do I start? No, Apple has not filed a patent for a holographic phone. And even if Apple had, this is not what it would look like. I don’t want to rag on Mike Ko, the animator who created the concept video (watch it here, it’s beautiful). It’s just that this is not how holograms work. See Figure 2 for a very crude Photoshop (well, Gimp) job on what this would look like if such holographic screens really existed, and Figure 4 for an even cruder job of what the thing Apple actually patented would look like, if they were audacious enough to put it into an iPhone. Continue reading